Apple has made a significant breakthrough in the field of artificial intelligence with the introduction of Apple MGIE, a revolutionary open-source AI model that enables users to edit images through natural language instructions. MGIE, short for MLLM-Guided Image Editing, leverages the power of multimodal large language models (MLLMs) to interpret user commands and perform pixel-level manipulations with remarkable accuracy.

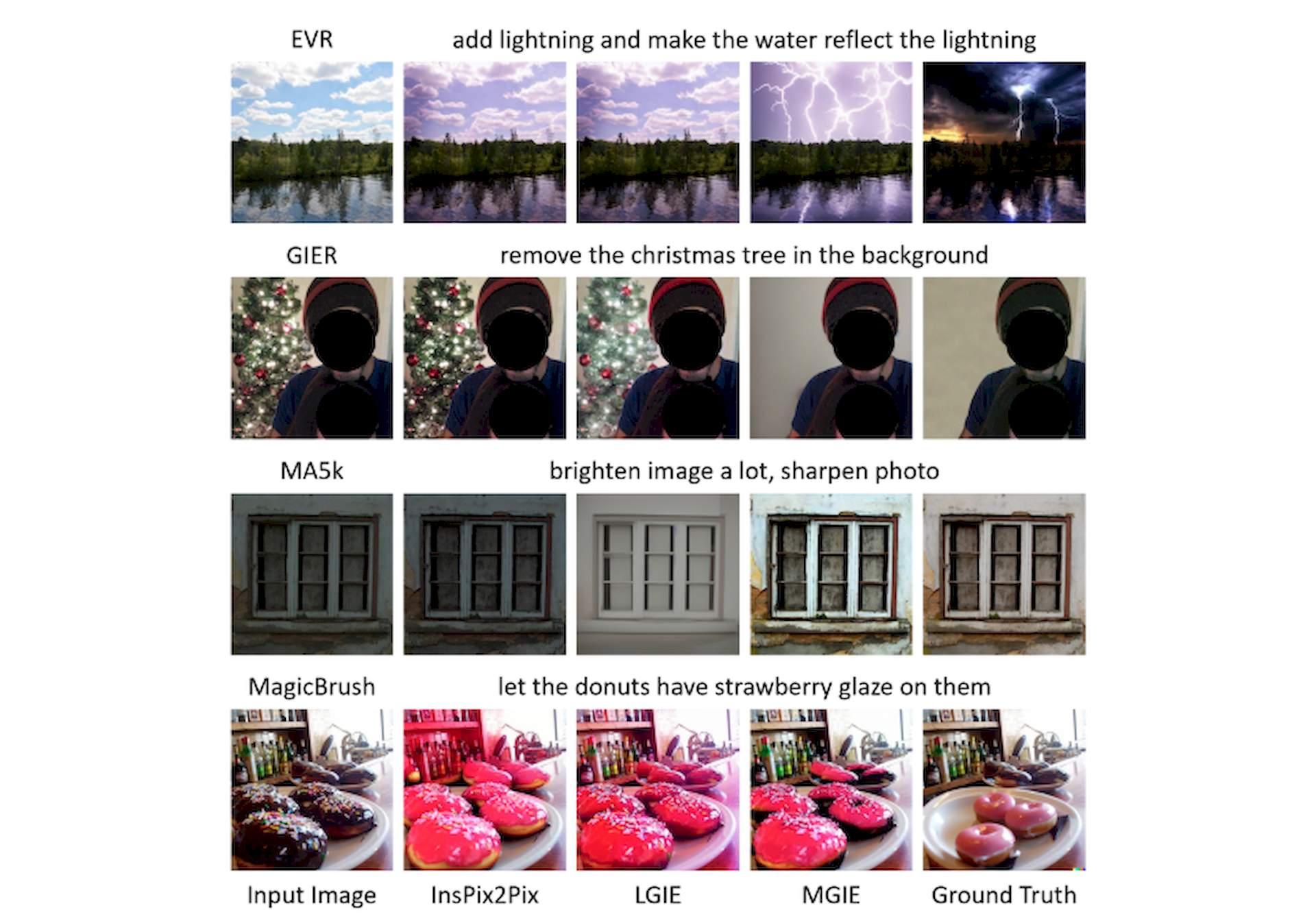

The model boasts an extensive range of editing capabilities, including Photoshop-style modification, global photo optimization, and local editing. This means that users can easily enhance their images with a simple text command, giving them the power to create professional-quality edits without the need for extensive photo editing knowledge.

The development of MGIE is a result of a groundbreaking collaboration between Apple and a team of researchers from the University of California, Santa Barbara. The model was presented in a research paper accepted at the prestigious International Conference on Learning Representations (ICLR) 2024, a premier platform for AI research. The paper showcases the impressive effectiveness of MGIE in improving automatic metrics and human evaluation, all while maintaining competitive inference efficiency.

What is Apple MGIE?

Apple MGIE is a revolutionary image editing system that utilizes machine learning to enable users to edit images using natural language instructions. This innovative technology allows users to simply describe the desired changes to the image, and MGIE will automatically apply the modifications, eliminating the need for complex editing tools or menus.

Similar to other cutting-edge AI image tools such as Midjourney, StableDiffusion, and DALL-E, Apple MGIE bridges the gap between human intention and image manipulation. By leveraging the power of multimodal learning, MGIE can understand both visual information (the image itself) and textual information (user instructions), allowing it to perform pixel-level manipulations with remarkable accuracy.

Apple MGIE is a game-changer in image editing, providing a user-friendly and efficient way to enhance and manipulate images. Whether you’re a professional photographer, graphic designer, or social media influencer, MGIE can help you create stunning images that will leave a lasting impression on your audience.

How does Apple MGIE work?

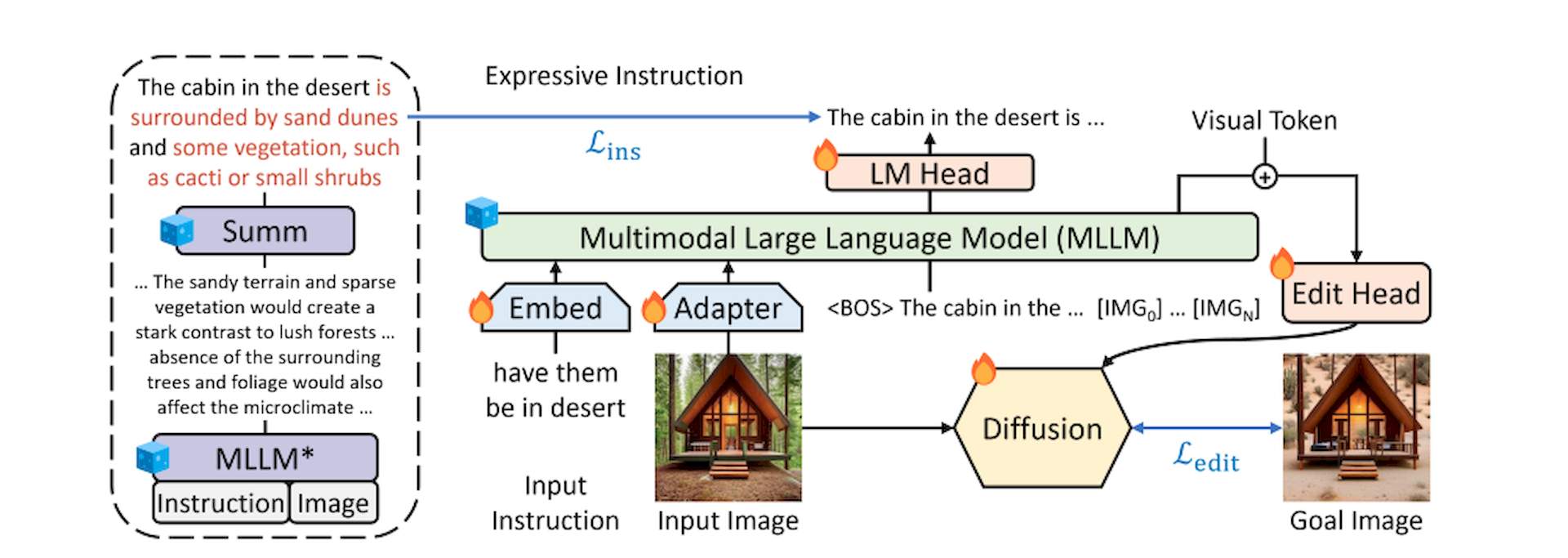

Apple MGIE utilizes natural language processing and machine learning to allow users to edit images using simple, descriptive commands. The system works by understanding the user’s intent and then manipulating the image to accurately reflect the desired changes.

Here’s a breakdown of the MGIE workflow:

- Inputting commands: The user describes the desired edits in plain English, such as “Make the sky in this image bluer” or “Remove the red car from this photo”

- Understanding intent: MGIE’s advanced language model deciphers the user’s instructions, identifying the specific objects, attributes, and modifications desired

- Visual understanding: Simultaneously, MGIE analyzes the image, identifying key elements and their relationships

- Guided editing: Combining both linguistic and visual understanding, MGIE intelligently manipulates the image to accurately reflect the user’s commands. It doesn’t blindly follow instructions but can interpret context and make sensible adjustments

The core concept behind MGIE is to bridge the gap between human intention and image manipulation, making image editing more accessible and efficient for everyone. With MGIE, users can easily enhance and manipulate images using simple, natural language commands, opening up new possibilities for creative expression and communication.

How to use Apple MGIE

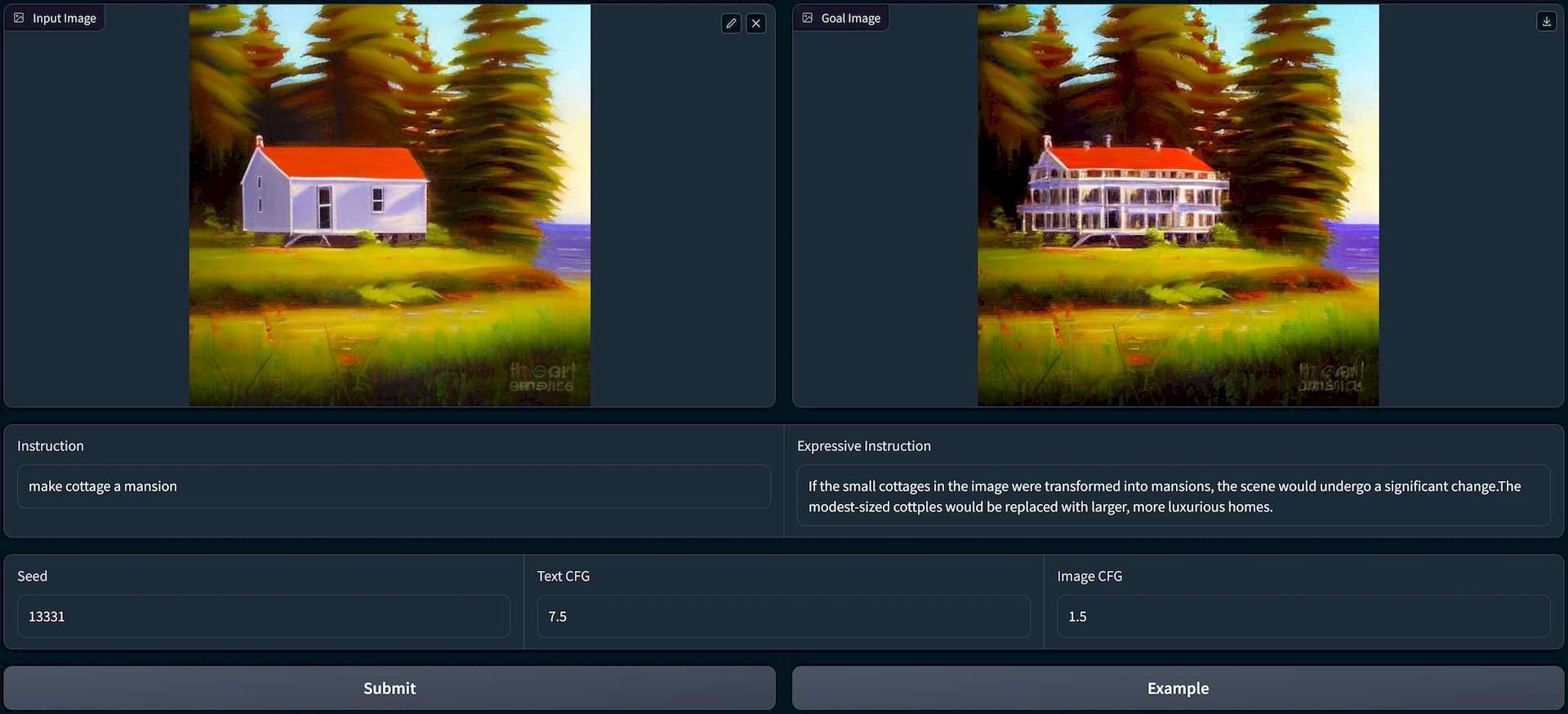

To use MGIE, users can access the open-source project on GitHub, which provides full access to its source code, training data, and pre-trained models. This allows developers and researchers to understand its inner workings and potentially contribute improvements. Additionally, a demo notebook is available on GitHub, guiding users through various editing tasks using natural language instructions. This serves as a practical introduction to MGIE’s capabilities.

For a quick and convenient way to try out MGIE, users can also experiment with the system through a web demo hosted on Hugging Face Spaces. This online platform allows users to experience the system without the need for local setup.

MGIE welcomes user feedback and allows for refining edits or requesting different modifications. This iterative approach ensures that the generated edits align with the user’s artistic vision.

While MGIE is still under development, open-sourcing the project makes it accessible to a wide range of users and contributors. Ongoing research and user contributions will shape its future capabilities and potential applications, making it an exciting and rapidly evolving technology in the field of image editing.

Featured image credit: pvproductions/Freepik.