Google’s DeepMind has announced Genie 2, a groundbreaking model capable of generating playable 3D game worlds solely from text prompts. This technology builds on the earlier version, Genie 1, which created 2D environments. Genie 2’s advancements position it as an innovative tool for creating diverse, interactive experiences in real-time.

Google’s DeepMind unveils Genie 2 for 3D game worlds

Genie 2 is designed to construct immersive virtual worlds by simulating animations, physics, and interactions. Utilizing images that can be generated from simple text prompts, the model enables creative flexibility. For instance, a user might input a request for “a cyberpunk Western,” and Genie 2 will produce a corresponding environment. This innovative approach leverages generative AI to extend the boundaries of what can be created in virtual spaces.

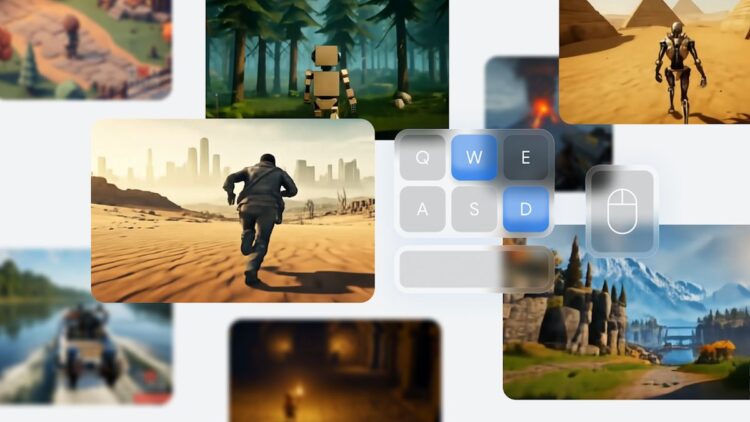

The model operates on a two-step process. It first requires a reference image, which can also be generated from text. Once provided with visual input, Genie 2 extrapolates to build a complete interactive world. Demonstrations revealed seamless player interactions using traditional controls, like the WASD layout, thus ensuring intuitive navigation.

Despite these advancements, Genie 2 faces notable challenges. Specifically, the model’s consistency diminishes after approximately 20 seconds, with the longest simulations lasting up to a minute. This inconsistency may stem from its ability to produce “counterfactuals,” meaning the AI considers different potential actions taken by players, which complicates maintaining a coherent narrative.

Genie 2 shines in its capacity to accommodate various perspectives, such as first-person or isometric views. It also incorporates elements like realistic water effects and environmental interactions, exemplified by a demonstration where a character interacts with a balloon, showcasing physics, gravity, and other dynamics. However, specifics regarding rendering resolution and polygon counts remain undisclosed by Google.

The capabilities of Genie 2 extend beyond user-controlled play. The model can also effectively simulate AI characters that interact within the generated environments. Google illustrated this by showing the AI’s ability to execute commands based on text prompts within the generated world. Such features hint at the potential for AI-driven NPCs that can exhibit realistic behavior in future gaming applications.

Security and ethical considerations arise regarding the training data for Genie 2. It has been suggested that the model’s training framework may include gameplay videos sourced from platforms like YouTube, raising intellectual property concerns about the likeness to copyrighted material. These issues may lead to investigations about the legal implications of generative AI’s use of existing content.

While the current implementations of Genie 2 may not offer an entirely coherent gaming experience due to their temporality, DeepMind envisions the model as a resource for research and development rather than full-fledged gaming products. The focus is on prototyping interactive experiences and evaluating AI agents in simulated environments.

The specifics of Genie 2’s public release, commercial applications, and required technological resources remain unclear as Google slowly navigates these waters.

Featured image and video credits: Google DeepMind