The news comes as more AI-driven attacks on Gmail users rise. There are 2.5 billion Gmail accounts worldwide, so it’s easy to see who the hacker’s targets are — and they won’t allow you to stumble along the path to easy targets, either. Recently, we’ve seen scammers who said they were Google support and falsely claimed account recovery attempts or other suspicious activity. They attack because they want to scare users into giving up sensitive information.

How AI is powering the latest Gmail attacks

Scams are getting more advanced, often spotting phishing emails, fake requests for account recovery, and phone calls purporting to be from actual Google sources. One example is Sam Mitrovic, a Microsoft solutions consultant who almost fell victim to an AI-generated scam. Mitrovic described how a hacker deceived him into believing his account was compromised by posing as Gmail and phoning him after fake notifications gave him a link to hack his account.

Then, there was a case where a venture capitalist, Garry Tan, fell for a scam that scammers falsely claimed had been a family member who filled out a death certificate so they could get his account. In this case, the effort to write convincing dialogues that try to obtain personal information from users was spurred along by using AI. Still, inconsistencies were present in the scam (such as suspicious recovery details), alerting Tan that it was fraudulent.

Common tactics in AI-driven phishing scams

- Fake Google Support calls: The hackers pose as Google representatives, calling you to verify your suspicious account activity or pretend to be in enough of a hurry to text you a code that will allow you to gain access to your account as a password reset. The calls are convincing, and AI-generated voices make them more so.

- Abusing Google Forms: Scammers sometimes use Google Forms to replicate the look of a real account recovery tool, a Google Forms association with Google Workspace. This gives legitimacy to their attacks, making it more difficult for the user to tell the scam.

- AI-generated dialogues: AI can make conversations with scammers sound real because it mimics natural-sounding interactions. Even so, phrasing errors or overly precise pronunciation will let it slip that the call was written by an AI.

New anti-scam initiative from Google

To address these ever-changing threats, Google joined the Global Anti Scam Alliance (GASA) and the DNS Research Federation to create the Global Signal Exchange. One direction of this initiative will be to share scam intelligence, signals, and insights across platforms to increase the layer of fraud detection and prevention on an enormous scale. Targeting high-risk users, such as politicians and journalists, Google’s Advanced Protection Program, which now rolls out support for passkeys, provides extra security layers.

Protecting yourself from AI scams

- Verify sources: Always double-check the phone numbers, emails, or forms claiming to be from Google. Never click links or provide credentials without verifying the authenticity of the request.

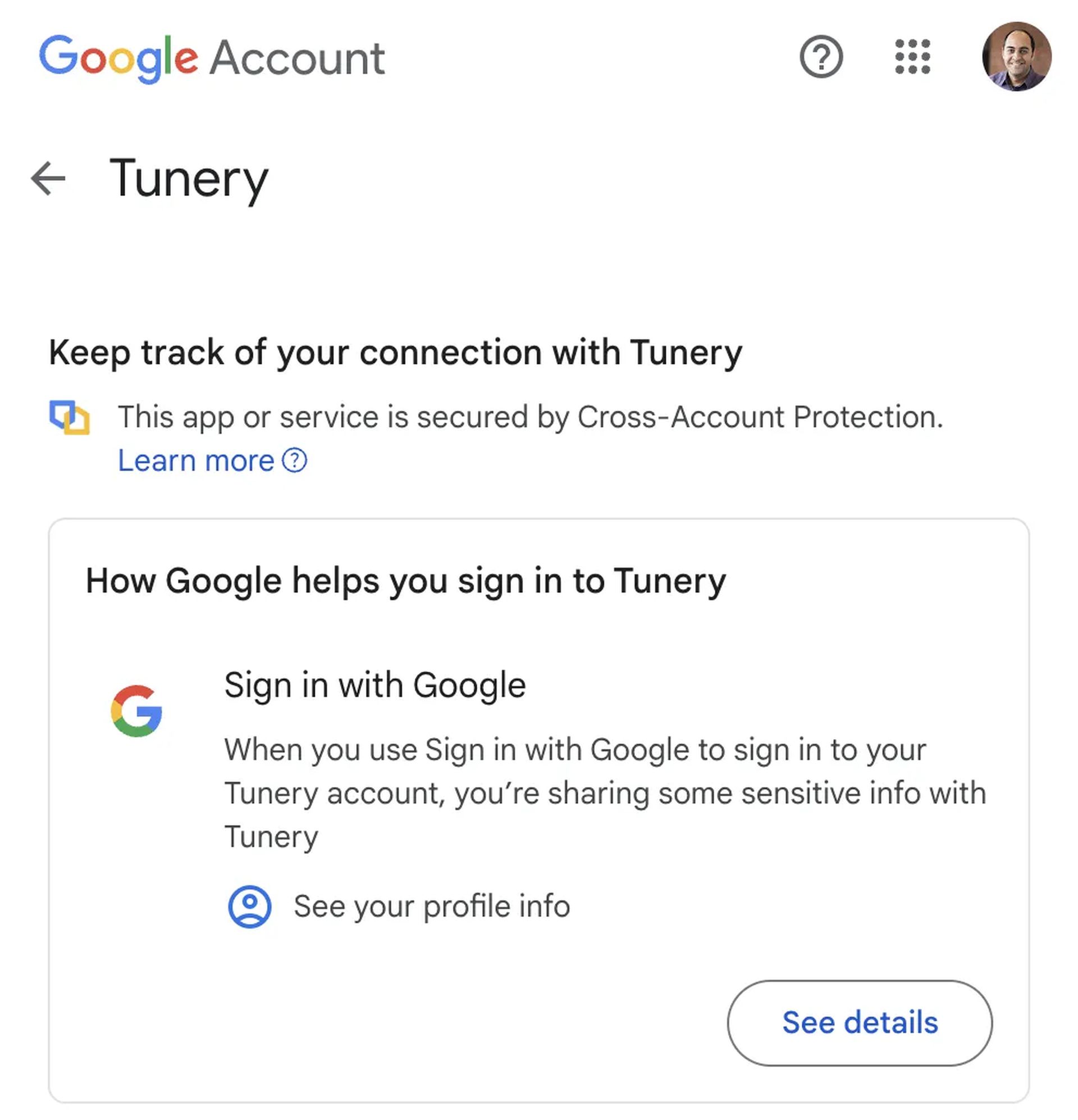

- Use Google’s Advanced Protection Program: This security package is for Gmail users likely to be hit by phishing attacks. It limits third-party app access and uses passkeys to log in securely.

- Stay calm: Attackers exploit the sensation of urgency to convince users to make impulsive decisions. Never click anything or share personal information immediately.

The new challenge in cybersecurity: These are advanced AI-driven attacks, and if you are aware and protected around the clock, you can save your Gmail account.

Image credits: Google Blog