The field of AI has seen a big change with the introduction of CogVideoX-5B. This AI model, developed by researchers from Tsinghua University and Zhipu AI, could change how videos are made and the digital content landscape. What is CogVideoX-5B and why is it so popular?

CogVideoX-5B is different because it is accessible and has impressive capabilities. This model can make high-quality videos up to six seconds long from simple text. This has big implications. The model is open source, so developers worldwide can use it. This makes it easier for everyone to create videos.

CogVideoX-5B をお試し中。https://t.co/e3bNKp3adp

A fluffy white kitten, with a pink ribbon tied around its neck, plays on a cushion by a sunlit window, its soft fur glowing in the light. The scene begins from a slight distance, gradually zooming in as the kitten bats a small… pic.twitter.com/1x1y3mqFA6

— 布留川英一 / Hidekazu Furukawa (@npaka123) August 28, 2024

What makes it work inside the CogVideoX-5B?

The CogVideoX-5B model has 5 billion parameters. The model can produce videos at 720×480 resolution and 8 frames per second. This model is not the best, but it is still good, especially because it is open-source.

The CogVideoX-5B’s success is due to several technical innovations. The model uses a 3D Variational Autoencoder (VAE) to compress video data, making it easier to generate high-quality outputs. It also uses an “expert transformer” with adaptive LayerNorm, which allows the model to interpret text with greater nuance, resulting in more accurate and coherent videos.

The decision to release CogVideoX-5B as open-source is a big move for AI. The researchers at Tsinghua University and Zhipu AI have made their code and model weights public, making it easier for others to use advanced video generation technology. This means that developers can now experiment with AI-generated video content. This open-source approach could lead to new tools and applications in many industries.

Created by CogVideoX-5B! pic.twitter.com/Y22zcg8fBA

— F-AI (@faiAI0) August 28, 2024

CogVideoX-5B: How it compares and who made it

The CogVideoX-5B isn’t the first text-to-video model, but it’s proving to be one of the most influential. It outperformed competitors like VideoCrafter-2.0 and OpenSora. This is due to new techniques used by the developers. Researchers from Tsinghua University and Zhipu AI have created a tool that could change how digital content is produced and consumed.

How to get started with CogVideoX-5B

You can use and experiment with the CogVideoX-5B model for free. Here’s a simple guide to getting started:

- Visit the GitHub repository: The CogVideoX-5B code and model weights are on GitHub. Download them to your computer.

- Set up your environment: Make sure you have the right tools to run the model. This may include specific versions of Python and libraries like PyTorch.

- Run the model: Input text prompts and generate videos using the instructions in the repository.

- Experiment and innovate: Once you know the basics, try different text prompts to see what the model can do.

CogVideoX-5B (txt2vid) has been added to the free Blender add-on, Pallaidium: #b3d pic.twitter.com/ynBupL2TKT

— tintwotin (@tintwotin) August 27, 2024

How to try CogVideoX-5B online

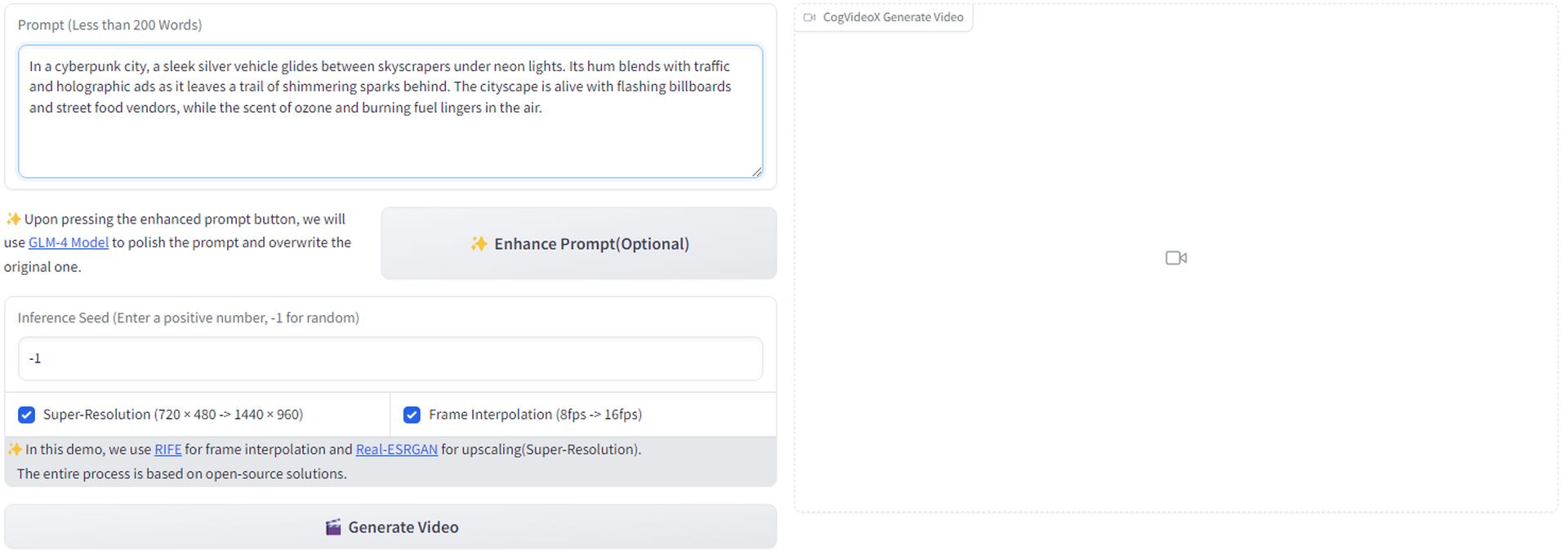

If you don’t want to download CogVideoX-5B, you can try it online through Hugging Face. Here’s a guide on how to use the demo:

- Visit the demo page: Go to the CogVideoX-5B Hugging Face Space.

- Enter your text prompt: In the “Prompt” box, describe the video you want to generate. Keep it under 200 words for the best results.

- Enhance your prompt (optional): Click “Enhance Prompt” to polish your input and overwrite the original prompt.

- Set an inference seed (optional): To control the randomness of video generation, enter a positive number in the “Inference Seed” box. If you prefer a random seed, leave the value as

-1.

- Enable additional features (optional):

- Super-Resolution: Select this box to upscale the video from 720×480 to 1440×960.

- Frame Interpolation: Enable this to improve video output by increasing frames per second (from 8 FPS to 16 FPS).

- Generate your video: When you’re done, click “Generate Video.” The model will make a short video based on your prompt.

- Review the video: Once generated, preview the video on the page. Adjust inputs and try again to get your desired result.

CogVideoX-5B and similar products

The CogVideoX-5B is another AI model that is changing what’s possible in digital content creation. Other notable models include Runway‘s video generation tools, Luma AI, VideoCrafter2, and Pika Labs. Each model has its strengths, but the new AI is open-source, which makes it easier to use and lets more people contribute to its development.

This company is an important step forward in AI-generated video. Its open-source approach makes it easier for everyone to use and helps it to keep improving. As more and more people start using it, video creation will become more diverse, dynamic, and accessible.

Featured image credit: CogVideoX