OpenAI Fine-Tuning is now available for GPT-4o, providing developers the ability to fine-tune the model to meet specific needs and enhance its performance. Fine-tuning allows developers to tailor GPT-4o’s responses, making it more effective for various applications.

Today, OpenAI announced the availability of fine-tuning for GPT-4o, a feature that developers have eagerly awaited. This update allows developers to fine-tune the model using their datasets, which results in more accurate and contextually appropriate responses. With this capability, developers can adjust the structure, tone, and even the specific instructions that GPT-4o follows, making it a versatile tool for a wide range of applications. OpenAI is also offering 1 million free training tokens per day for every organization until September 23, making it easier for developers to start exploring this feature.

OpenAI Fine-Tuning GPT-4o: Tailored AI for better results

With the introduction of OpenAI Fine-Tuning for GPT-4o, developers now can customize the AI’s performance based on their specific needs. This means that whether the task involves coding, creative writing, or any other specialized domain, GPT-4o can be trained to perform better and more cost-effectively. Developers can achieve impressive results with as little as a few dozen examples in their training datasets. This makes the fine-tuning process both accessible and powerful for a variety of use cases.

OpenAI Fine-Tuning GPT-4o isn’t just about improving accuracy; it’s about enhancing the overall effectiveness of the model. For instance, developers can fine-tune GPT-4o to follow complex domain-specific instructions or to output in particular formats. This ability to customize the model’s behavior is particularly beneficial for applications requiring precise and consistent responses. By training GPT-4o on specific datasets, developers can ensure that the AI understands and responds appropriately to nuanced requests, making it a more reliable tool in professional environments.

Real-world applications of GPT-4o fine-tuning

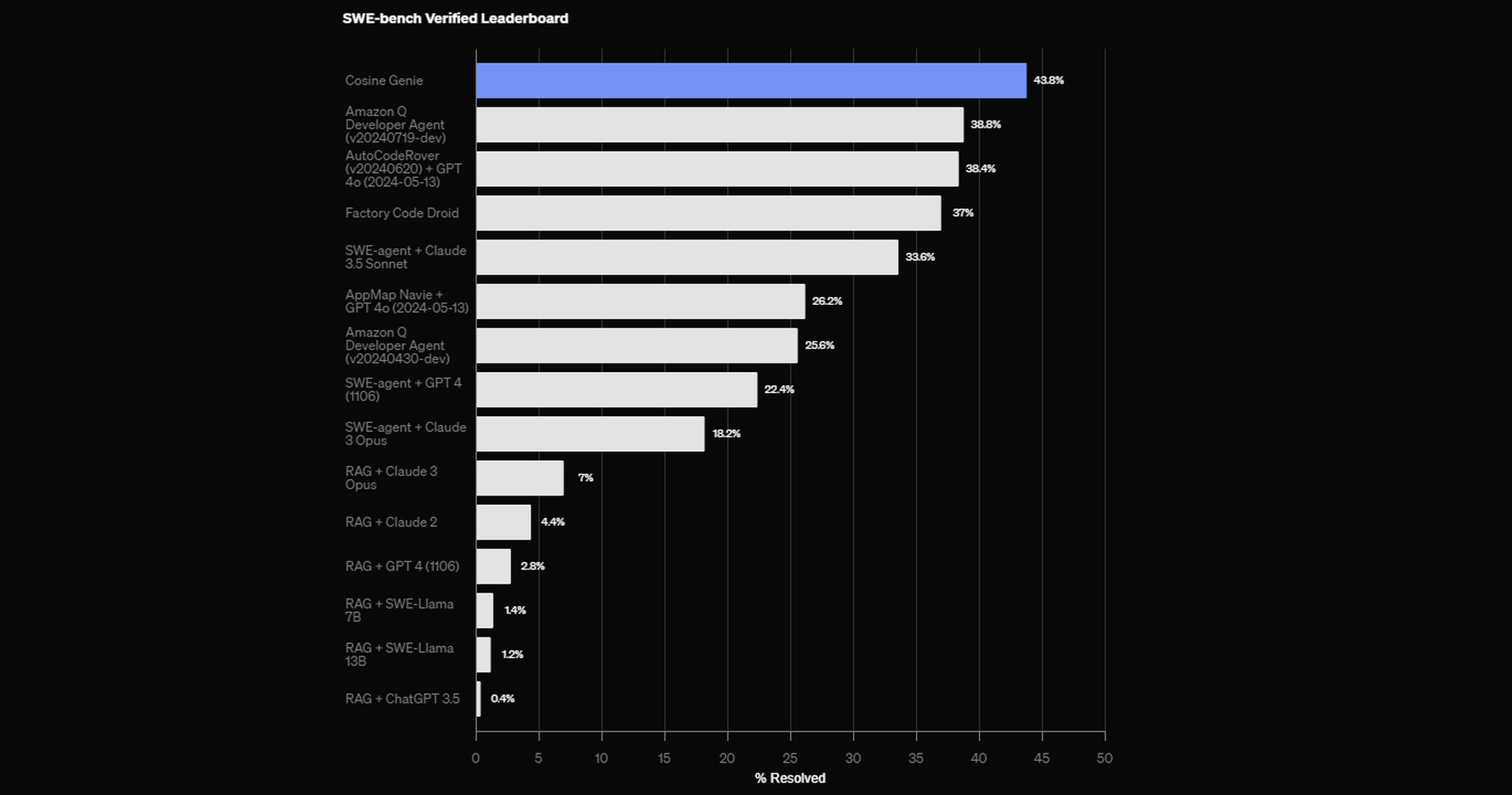

The impact of OpenAI Fine-Tuning on GPT-4o is already being seen in real-world applications. One example is Genie, Cosine’s AI software engineering assistant that uses a fine-tuned GPT-4o model. By training the model on real examples of software engineers at work, Cosine was able to improve Genie’s performance. The fine-tuned model achieved a state-of-the-art (SOTA) score of 43.8% on the SWE-bench Verified benchmark, the highest score on the leaderboard.

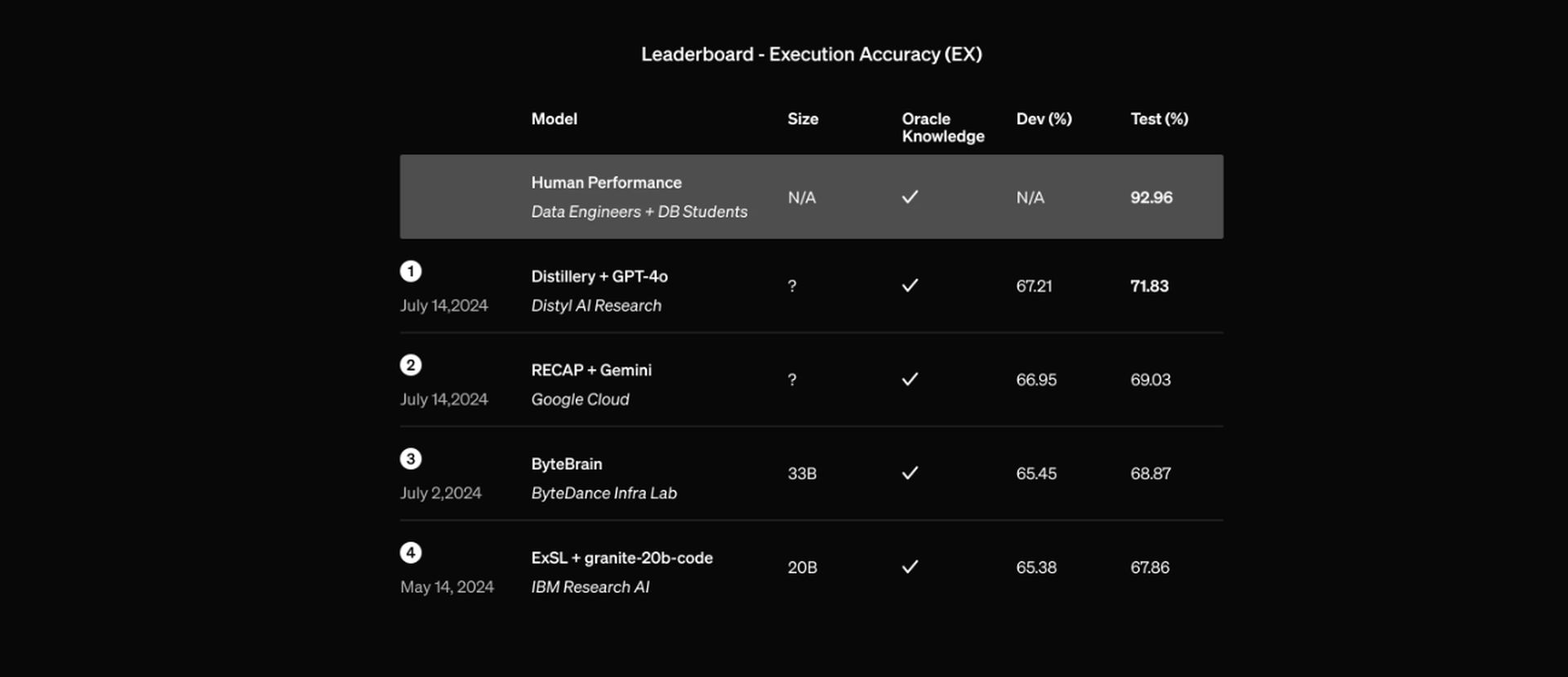

Another success story comes from Distyl, an AI solutions partner to Fortune 500 companies. By fine-tuning GPT-4o, Distyl achieved first place in the BIRD-SQL benchmark, the leading text-to-SQL benchmark. The fine-tuned model achieved an execution accuracy of 71.83%, excelling in tasks such as query reformulation and SQL generation.

Getting started with GPT-4o Fine-Tuning

OpenAI Fine-Tuning for GPT-4o is available to all developers on paid usage tiers, making it accessible to a wide range of users. To start fine-tuning GPT-4o, developers can visit the fine-tuning dashboard, select the base model from the drop-down menu, and begin the training process. The cost for fine-tuning is $25 per million tokens, with inference priced at $3.75 per million input tokens and $15 per million output tokens. Additionally, GPT-4o mini fine-tuning is also available, with 2 million free training tokens per day until September 23.

One of the key considerations with OpenAI Fine-Tuning is data privacy and safety. OpenAI ensures that fine-tuned models remain under the full control of the developers, with all business data, including inputs and outputs, remaining private. This means that data used for fine-tuning is never shared or used to train other models. Additionally, OpenAI has implemented layered safety measures to monitor and evaluate the use of fine-tuned models, ensuring they adhere to usage policies and are not misused.

Overall, the introduction of OpenAI Fine-Tuning for GPT-4o is good news for developers looking to improve the performance and accuracy of their AI models. OpenAI allows developers to customize the model to their specific needs, making it easier to build more effective and reliable AI applications. With the added assurance of data privacy and security, developers can safely explore the possibilities of fine-tuning to achieve the best possible results with GPT-4o.

Featured image credit: OpenAI Edit: Furkan Demirkaya