The main database design factors that are required for Large Language Model (LLM) training and optimization are examined in this article. It emphasizes how large datasets and carefully designed databases are required for effective development of LLMs. Important topics covered in the essay include scalability, which includes partitioning methods and horizontal and vertical scaling approaches that are essential for efficiently managing massive amounts of data. It also discusses performance optimization techniques like caching and indexing, which are essential for maximizing throughput and reducing latency throughout the iterative training procedures of the LLMs.

To understand and generate human-like text, Large Language Models (LLMs) such as OpenAI’s GPT-4, Google Gemini, and BERT have revolutionized the computer and machine in the field of Artificial Intelligence. Massive datasets are required in the training and fine-tuning of these models and also meticulously designed databases to efficiently process, retrieve, and handle such data. There are some key critical database design considerations necessary for these LLMs model training and fine-tuning. Training and the subsequent fine-tuning directly correlates to the performance of these LLMs.

There are 6 variables to optimize for a better performing LLM.

- Scalability

- Performance

- Data variety

- Data integrity

- Data security

- Cost management

1. Scalability

In the fundamental requirements of database design for training and fine-tuning such LLM models, scalability is one of them, due to the extensive demands for data these models impose. To support the computational needs of LLMs, the data is reaching hundreds of terabytes or even petabytes, databases must efficiently manage and process such massive data. Here, we will explore the strategies of scalability crucial for handling such massive data or datasets. Mainly focusing on the strategies of horizontal and vertical scaling and partitioning.

1.1 Horizontal and vertical scaling

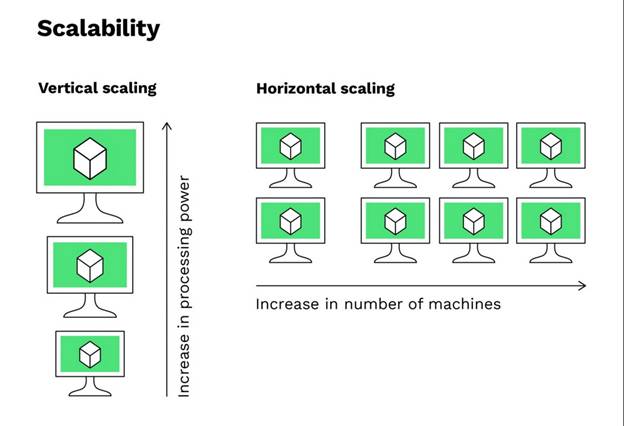

There are two primary approaches in the context of database scalability, horizontal scaling and vertical scaling.

a. Horizontal scaling

To manage increased load, add more machines to the existing pool of resources to manage horizontal scaling. To extend the database capacity by distributing the workload across multiple servers this method is also known as scaling out. This type of scaling is generally preferred for large-scale applications for which LLMs are required because it offers great flexibility and cost-effectiveness. It allows the addition of hardware, which can be cost-effective and minimize downtime [1].

b. Vertical scaling

Vertical/scaling up, is the strategy in which we add more power to existing computers/machines i.e., upgrading CPUs, Increasing memory, etc. It is efficient and cost-effective for moderate increases in database demands. There is a limitation in the vertical scaling, no further scaling can be possible without a complete hardware overhaul, once the maximum capacity of a device is reached, no further scaling [2].

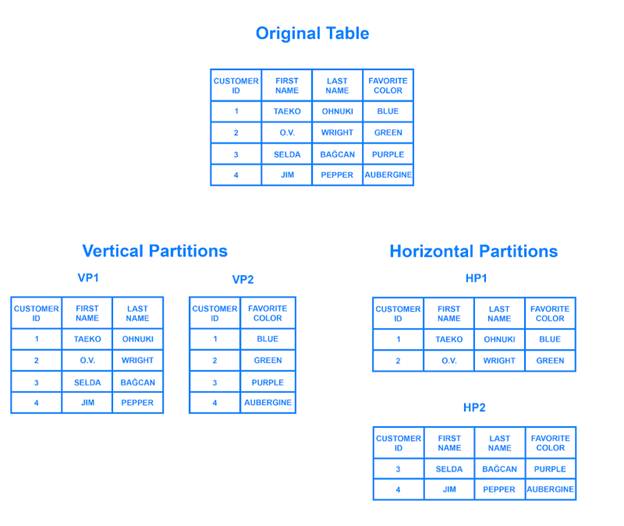

1.2 Partitioning strategies

Through partitioning strategy, we can further enhance data management in horizontal scaling. Data Partitioning is the process of dividing the database into smaller and manageable pieces and distributing these smaller pieces in the server network [3].

Implementation considerations:

Determining the partition keys will maintain a balance between several partitions, handle the loading of each partition, and distribute the data evenly. Under-partitioning might not sufficiently improve performance bottlenecks problems and over-partitioning can lead to increased complexity and management overhead [4].

2. Performance optimization

Another important requirement for databases involved in the training and fine-tuning of LLMs is performance optimization. Because performance optimization directly affects the speed and efficiency of data queries and critically updates the iterative process of model learning. For minimizing latency and maximizing throughput, indexing, and caching effective performance strategies are essential. Here, we explore these strategies in detail.

2.1 Indexing

For the facilitation of quicker retrieval of data by maintaining a separate structure to point to data locations in a database indexing is the fundamental optimization technique. To reduce the need to scan entire tables and are need for performance in large-scale data properly implemented indexing is important.

Inverted indexes

Inverted indexes store a map from content keywords to their locations in the database particularly relevant for text-based data common in LLM training. Searching operations can be sped up by using inverted indexes, instead of scanning the entire database as the model can quickly locate relevant texts based on query terms [5].

![Database design considerations for training and fine-tuning Large Language Models (LLMs) 3 Figure 3: Structure of inverted indexes [6]](https://techbriefly.com/wp-content/uploads/2024/05/fig3.jpg)

2.2 Caching

Another important performance optimization strategy used to enhance database response time is Caching. This strategy reduces the need to repeatedly query the database for the same data for storing copies of frequently accessed data in the faster storage system. Which decreases the load on the database and improves access times.

Data caching

Common queries or hot data segments are frequently accessed data that are stored in the cache. It is effective in such environments where read operations vastly more than write operations, as is often the case in the later stages of LLM training where models may repeatedly query certain data subsets [7].

Query result caching

The results of common queries are stored in this approach. The approach directly fatch the result from the cache for the same queries instead of processing the query again, which saves time and resources [8].

![Database design considerations for training and fine-tuning Large Language Models (LLMs) 4 Figure 4: Query optimization process [9]](https://techbriefly.com/wp-content/uploads/2024/05/fig4.jpg)

3. Data integrity

In the database design for training and fine-tuning LLMs, an important element is data integrity. With the data robustness of the database systems, it ensures the overall performance and effectiveness of the LLMs. Here, we will discuss the important aspects of maintaining data integrity through ACID properties and concurrency control mechanisms.

3.1 ACID properties

To ensure database reliability and data integrity the ACID properties (Atomicity, Consistency, Isolation, and Durability) are the fundamentals, particularly in the environments of handling large-scale and complex datasets used for LLMs.

Atomicity

Atomicity guarantees that every transaction is treated as a single unit, which either completes successfully or does not occur at all, with no partial data updates that lead to data inconsistency.

Consistency

Consistency ensures that any transaction will bring the database from one valid state to another, maintaining all the predefined rules, such as unique constraints and foreign keys.

Isolation

Isolation ensures the safety of concurrent transactions that could corrupt data.

Durability

Even the power loss, crash, or any error, the durability ensures that once a transaction has been committed it will remain so. The implementation of these ACID properties helps in maintaining a robust database system, which is a vital demand of LLMs [10].

3.2 Concurrency control

In the scenarios of multiple processes accessing the database simultaneously, Concurrency control techniques are more important to avoid data inconsistency issues. To ensure the execution of transactions safely and correctly and prevent conflict concurrency control is used.

Mainly two resource locking techniques are used for concurrency control Optimistic Locking [11] and Pessimistic Locking [12].

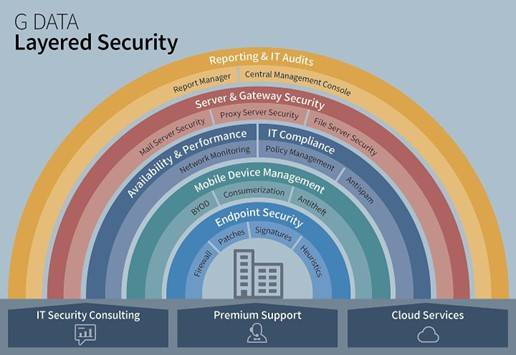

4. Data security

In the database design for training and fine-tuning Large Language Models (LLMs), data security is a critical consideration. Because such databases contain sensitive information and may be personal data. It is important to protect this data from unauthorized access, and breaches and ensure confidentiality, integrity, and availability. Here, we will discuss the key security measures, including encryption and access control, which are integral to secure data in the context of LLMs.

4.1 Encryption

An important security measure that encodes data, after encoding the data is inaccessible without having the corresponding description key. Ensuring the security of such sensitive information from unauthorized access is vital both at rest [13] and in transit [14].

The rest and transit both forms of encryption help in maintaining the security of the data used by LLMs, thus protecting this data against both external and internal threats and breaches.

4.2 Access Control

For defining and enforcing who can view or use resources in a computing environment Access control is important. Effective access control mechanisms ensure that only authorized personnel have access to sensitive data. Which is important in environments handling personal and confidential information.

Role-Based access control

A common approach used to grant access rights according to roles, and users are assigned roles based on their responsibilities and needs. This approach simplifies the management of user permissions and can effectively enforce the principle of least privilege [15].

Audit trails

Keeping detailed logs of who accessed what data and when, helps to maintain access and identify potential security violations. To ensure compliance with data protection regulations and perform security audits it is important to audit [16].

5. Data variety

In the designing of the database for training and fine-tuning of LLMs Data variety is another key consideration. To develop a comprehensive understanding of language patterns, LLMs require diverse datasets that include both structured and unstructured data. This diversity presents different challenges in terms of database architecture and data integration capabilities. Here, we will explore different strategies for managing different data types and integrating heterogeneous data sources effectively.

5.1 Structured and unstructured data

Large Language Models (LLMs) benefit from a rich data source, that can range from organized relational databases to more unstructured formats handled by NoSQL databases.

Structured data is organized in tables and typically stored in relational databases. Structured Query Language (SQL) is used to manage and query structured data, which is important for high precision and clear data relationship tasks [17].

Unstructured data is another type of data that does not fit in traditional database schemes and is stored in NoSQL databases like Oracle, MongoDB, etc. Unstructured data includes text, images, videos, and other forms of data [18].

5.2 Data integration

To provide a unified view of diverse data types Integrating heterogeneous data sources is important. To enhance the comprehensiveness and utility of the training datasets for LLMs effective data integration is required.

Data integration tools

Data visualization, middleware, and ETL (Extract, Transform, Load) technologies important tools for blending data from various sources and that ensure data remains consistent and accessible across different storage formats [19].

Methodology

Schema mapping and data federation approaches are used to integrate data from different sources. These approaches maintain data integrity and reduce redundancies, making diverse datasets more usable for the training of LLMs like complex computational processes [20].

6. Cost management

Another essential consideration of database design for training and fine-tuning LLMs is cost management, which gives extensive resources for these models. An effective cost management approach can help to minimize expenses associated with storage and computing while maintaining optimal performance levels. Here, we will discuss the key considerations for managing storage and compute costs.

6.1 Storage costs

For an overall budget of projects involving LLMs, the cost of data storage is a significant part. Choosing the right storage solutions involves balancing cost with performance needs such as speed and availability of data. The considerations are different storage types [21] and Data lifecycle management policies [22].

6.2 Compute costs

LLMs can lead to significant computing costs, due to the CPU and GPU usage, processing power is required to train and fine-tune these models. Also, efficient query processing [23] and data handling [24] are also important to overcome these costs.

Incorporating these important considerations into the database design process for training and fine-tuning LLMs is essential for building robust, efficient and secure AI infrastructures. As this field continues to grow, staying abreast of the latest technological advancements and incorporating flexible, scalable solutions will be key to success.

References

[1] Abadi, D. J., Marcus, A., Madden, S. R., & Hollenbach, K. (2009). SW-Store: a vertically partitioned DBMS for Semantic Web data management. The VLDB Journal, 18, 385-406.

[2] Hellerstein, J. M., Stonebraker, M., & Hamilton, J. (2007). Architecture of a database system. Foundations and Trends® in Databases, 1(2), 141-259.

[3] Corbett, J. C., Dean, J., Epstein, M., Fikes, A., Frost, C., Furman, J. J., … & Woodford, D. (2013). Spanner: Google’s globally distributed database. ACM Transactions on Computer Systems (TOCS), 31(3), 1-22.

[4] Stonebraker, M., Madden, S., Abadi, D. J., Harizopoulos, S., Hachem, N., & Helland, P. (2018). The end of an architectural era: It’s time for a complete rewrite. In Making Databases Work: the Pragmatic Wisdom of Michael Stonebraker (pp. 463-489).

[5] Zobel, J., & Moffat, A. (2006). Inverted files for text search engines. ACM computing surveys (CSUR), 38(2), 6-es.

[6] Wang, H., Chi, C., Zhang, X., & Zhan, Y. (2022). Inverted Index Construction Algorithms For Large-Scale Data. IAENG International Journal of Computer Science, 49(4).

[7] Larson, P. A., Goldstein, J., & Zhou, J. (2004, April). MTCache: Transparent mid-tier database caching in SQL server. In Proceedings. 20th International Conference on Data Engineering (pp. 177-188). IEEE.

[8] Amiri, K., Park, S., Tewari, R., & Padmanabhan, S. (2003, March). DBProxy: A dynamic data cache for Web applications. In Proceedings 19th International Conference on Data Engineering (Cat. No. 03CH37405) (pp. 821-821). IEEE Computer Society.

[9] Kashem, M. A., Chowdhury, A. S., Deb, R., & Jahan, M. (2010). Query optimization on relational databases for supporting top-k query processing techniques. JCIT, 1(1), 53-58.

[10] Weikum, G., & Vossen, G. (2001). Transactional information systems: theory, algorithms, and the practice of concurrency control and recovery. Elsevier.

[11] Bernstein, P. A., Hadzilacos, V., & Goodman, N. (1987). Concurrency control and recovery in database systems (Vol. 370). Reading: Addison-wesley.

[12] Gray, J., & Reuter, A. (1992). Transaction processing: concepts and techniques. Elsevier.

[13] Popa, R. A., Redfield, C. M., Zeldovich, N., & Balakrishnan, H. (2011, October). CryptDB: Protecting confidentiality with encrypted query processing. In Proceedings of the twenty-third ACM symposium on operating systems principles (pp. 85-100).

[14] Dierks, T., & Rescorla, E. (2008). The transport layer security (TLS) protocol version 1.2 (No. rfc5246).

[15] FERRARIO, D. (1992). Role-Based Access Control. In Proc. of 15th National Computer Security Conference, 1992.

[16] Lead, N. E. C. Definition of Privacy by Design and Privacy Preserving Enablers.

[17] Date, C. J. (2011). SQL and relational theory: how to write accurate SQL code. ” O’Reilly Media, Inc.”.

[18] Stonebraker, M., & Cattell, R. (2011). 10 rules for scalable performance in’simple operation’datastores. Communications of the ACM, 54(6), 72-80.

[19] Halevy, A., Rajaraman, A., & Ordille, J. (2006, September). Data integration: The teenage years. In Proceedings of the 32nd international conference on Very large data bases (pp. 9-16).

[20] Doan, A., Halevy, A., & Ives, Z. (2012). Principles of data integration. Elsevier.

[21] Lee, J. (2013). A view of cloud computing. International Journal of Networked and Distributed Computing, 1(1), 2-8.

[22] Urquhart, C., & Spence, J. (2007). Document Engineering: Analyzing and Designing Documents for Business Informatics and Web Services. Journal of Documentation, 63(2), 288-290.

[23] Graefe, G. (1993). Query evaluation techniques for large databases. ACM Computing Surveys (CSUR), 25(2), 73-169.

[24] Verma, A., Pedrosa, L., Korupolu, M., Oppenheimer, D., Tune, E., & Wilkes, J. (2015, April). Large-scale cluster management at Google with Borg. In Proceedings of the tenth european conference on computer systems (pp. 1-17).