A new open-source, Meta ImageBind AI model that ties together many data streams, such as text, audio, visual data, temperature readings, and movement readings, has been made public by Meta.

The model is currently only a research endeavor with no immediate consumer or practical applications, but it suggests a future of generative AI systems that can produce immersive, multisensory experiences. It also demonstrates how openly Meta is still sharing its AI research in contrast to competitors like OpenAI and Google, both of which have grown more reclusive.

The core idea of the study is the integration of several data kinds into a single multidimensional index (or “embedding space,” to use AI jargon). Although it might sound a little abstract at this point, the fundamental notion behind the recent rise in generative AI is the same.

What is Meta ImageBind AI?

For instance, during the training phase, a number of AI image generators, including DALL–E, Stable Diffusion, and Midjourney, rely on these systems. While relating that data to descriptions of the photos, they search for patterns in visual data. This therefore makes it possible for these systems to produce images that correspond to text inputs from users. Numerous AI technologies also produce video or audio in a similar manner.

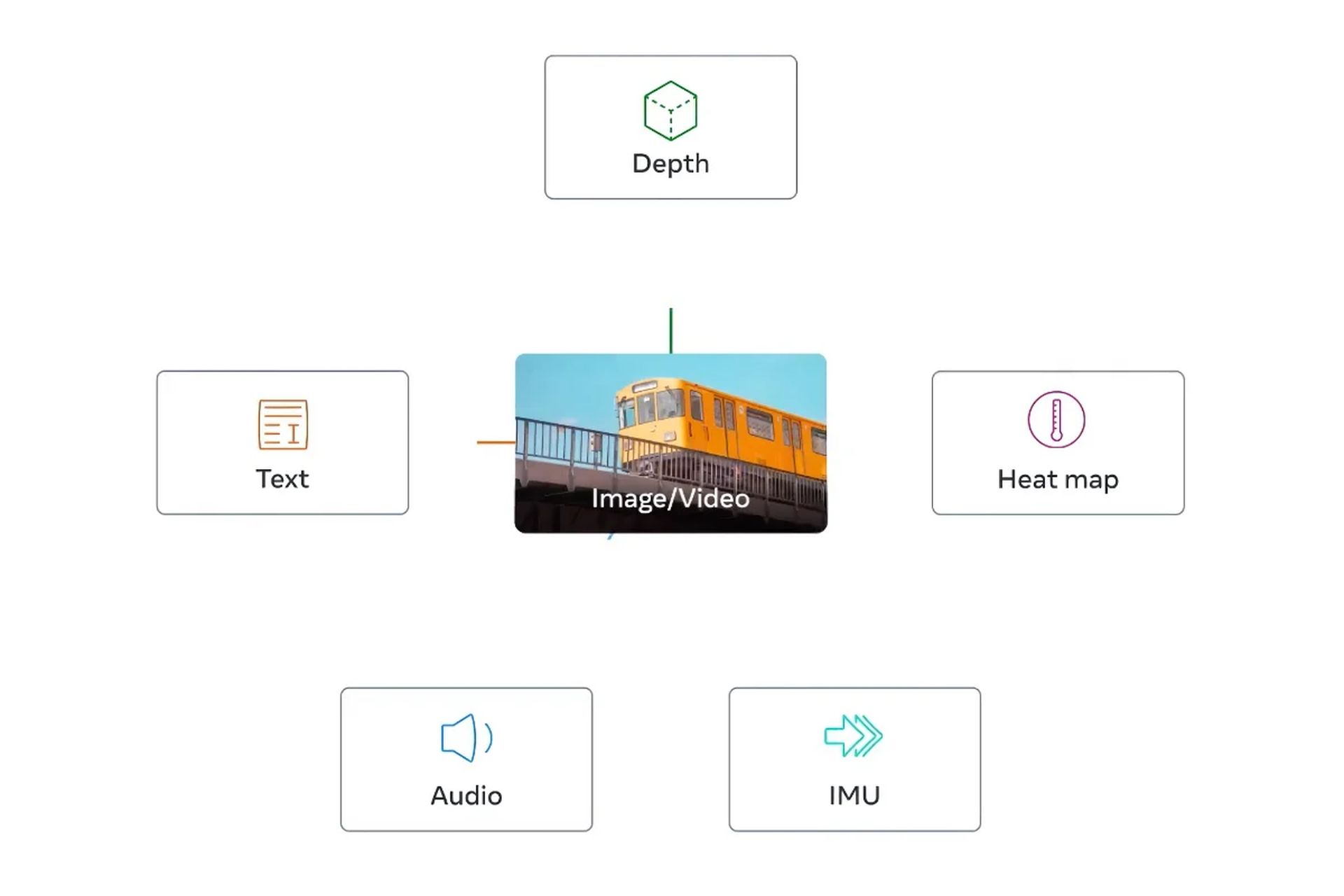

According to Meta ImageBind AI is the first model to integrate six different forms of data into a single embedding space. Visual (in the form of picture and video), thermal (infrared images), text, audio, depth information, and — most intriguingly — movement measurements produced by an inertial measuring unit, or IMU, are the six categories of data that are incorporated in the model.

IMUs are used in phones and smartwatches for a variety of functions, including as switching a phone from landscape to portrait mode and identifying various forms of physical activity.

The notion is that, like present AI systems do with text inputs, future AI systems will be able to cross-reference this data. Consider a futuristic virtual reality system, for instance, that creates not just audio and visual input but also your environment and movement on a real stage.

If you asked it to simulate a protracted sea cruise, it would put you on a ship with the shaking of the deck under your feet and the cold wind of the ocean air in addition to the sound of the waves in the distance.

How does Meta ImageBind AI work?

In a blog post, Meta ImageBind AI mentions that future models may include incorporate “touch, speech, smell, and brain fMRI signals.” The discovery, according to the statement, “brings machines one step closer to humans’ ability to learn simultaneously, holistically, and directly from many different forms of information.” Which is okay; whatever. how minute these stages are will determine.)

Naturally, all of this is quite hypothetical, and it’s probable that the immediate uses of this type of study will be considerably more constrained. For instance, Meta showed out an AI model last year that creates brief, blurry films from text descriptions. Future iterations of the system might combine additional data streams, producing audio to complement the video output, for instance, as demonstrated by work like ImageBind.

However, for those who follow the industry, the research is particularly intriguing since Meta ImageBind AI is open-sourcing the underlying model, a trend that is being closely watched in the field of AI.

Meta ImageBind AI open-source approach: Why it works?

Those who are against open-sourcing, such as OpenAI, claim that the approach is bad to creators because competitors may duplicate their work and that it may even be hazardous since it could allow nefarious actors to exploit cutting-edge AI models.

Responding, proponents claim that open-sourcing enables third parties to examine the systems for flaws and fix some of their shortcomings. They point out that it may even have a financial advantage as it effectively enables businesses to hire outside coders as unpaid employees to enhance their job.

Although there have been challenges, Meta ImageBind AI has remained solidly in the open-source camp thus far. (For instance, its most recent language model, LLaMA, was released online early this year.) In many respects, the company’s lack of commercial AI success (it doesn’t have a chatbot to compete with Bing, Bard, or ChatGPT) has made this strategy possible. This tactic is still being used with ImageBind in the meantime.

Check out the articles below to stay up to speed on the most recent advancements in technology, particularly those relating to AI.