Intel and UCSC work on a promising alternative to NVIDIA’s DLSS. NVIDIA has marked a turning point with DLSS 2.0, a smart rescaling technology that has inspired Intel and UCSC to develop a very interesting alternative, although it is true that it is still in an early stage and therefore it has a long way to go.

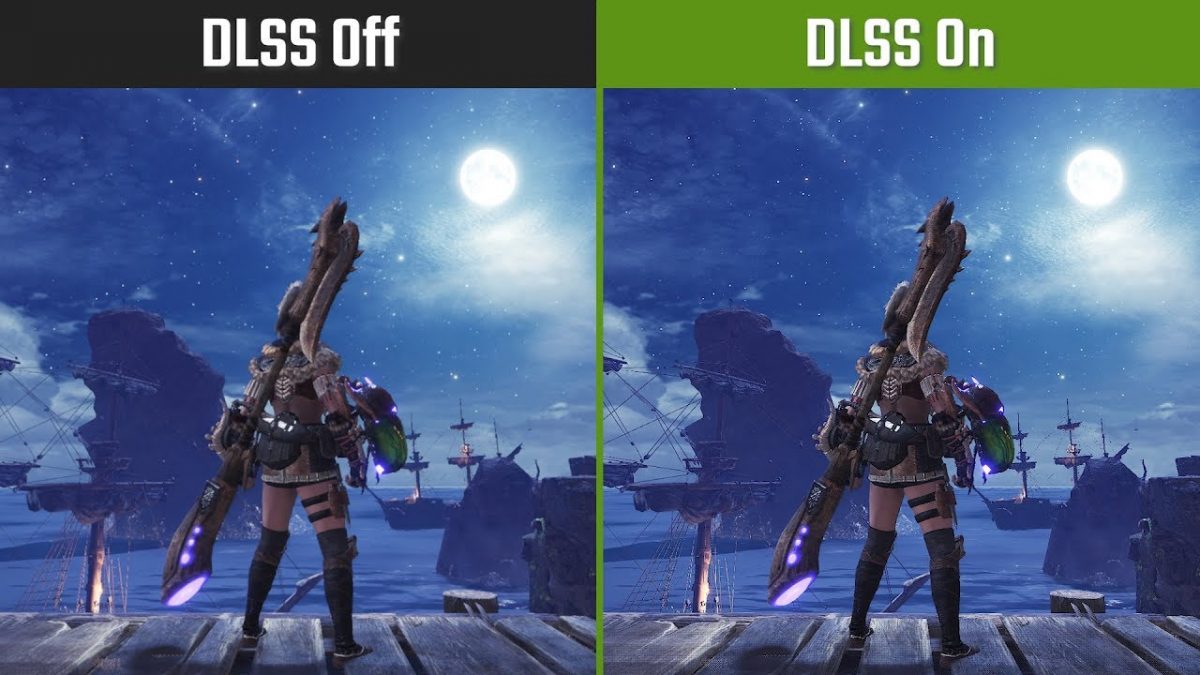

NVIDIA’s DLSS 2.0 technology uses a series of algorithms (artificial intelligence) to generate a reconstruction process that combines different images to create the perfect frame.

A traditional rescaling technique renders with fewer pixels and extrapolates the rest from the pixels that have been rendered . To improve the result, it applies a temporary image filter that softens the edges and reduces saw teeth, but usually ends up producing a blur.

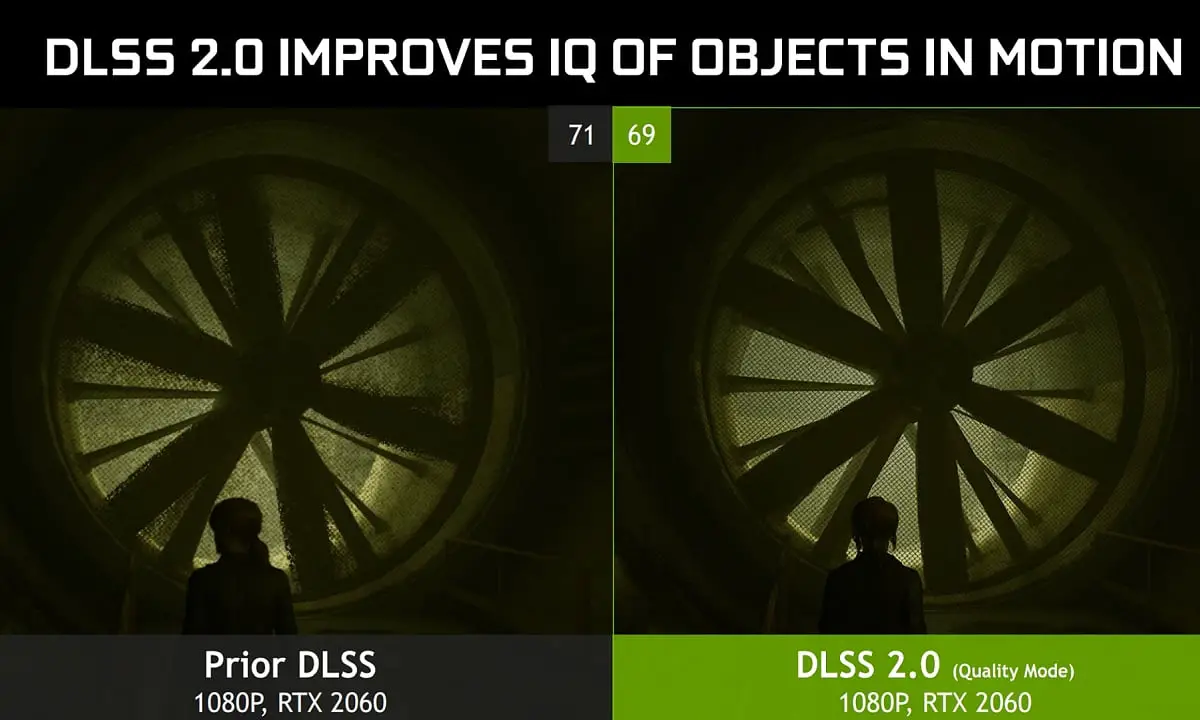

DLSS 2.0 technology doesn’t work that way. It is true that it also starts from a lower resolution, that is, it renders the image at 50% or 67% of the target resolution, depending on the settings we have selected, but it is not limited to stretching or filling in the missing pixels, Instead, it performs a process of combining images in real time to complete a reconstruction cycle that results in a high-quality image . It is so effective that, as we saw at the time, it can even outperform a native resolution setting with TAA applied.

- Intel targets cheap PC’s with “Jasper Lake” processors

- Image shows Nvidia RTX 3090, RTX 3080 and RTX 3070 size

- NVIDIA released GeForce RTX 3070, 3080 and 3090 with breakthrough changes

DLSS 2.0 allows you to achieve a perfect image using half the pixels, and intelligently rescale to 16K resolutions, an achievement that, as many of our readers will know, has been possible thanks to artificial intelligence, and also thanks to Tensor cores including RTX 20 and RTX 30 graphics cards, dedicated to speeding up this workload.

Intel and UCSC also want to advance in this field

And to achieve this, it has developed, together with UCSC, an intelligent rescaling technique that we have already seen working with the demo “Infiltrator”, a classic that has been with us for a few years (it arrived in 2013) and that uses the Unreal Engine 4.

Intel’s intelligent rescaling technology is based on an approach similar to NVIDIA’s DLSS technology, using a neural network called QW-Net to carry out an image reconstruction process. According to those responsible for the project, 95% of the operations necessary to complete this process are 4-bit integers.

Intel and UCSC have combined two U-shaped networks that are specialized in different tasks. The first focuses on the extraction of features from the image, and the second focuses on the filtering and reconstruction of the output image . The role of both networks is perfectly differentiated, although we must be clear that the first one is representing a greater computational load. The second, on the other hand, represents a lower workload (at the level of calculations), but it requires higher precision, this way it has less margin of error.

As could be imagined, this network accumulates frames on a recurring basis, which allows achieving stable results over time and achieving an output quality that has little to envy to a native rendering with TAA applied, as we can see in the attached video. Unfortunately, this technology is not yet ready to work in real time like NVIDIA DLSS 2.0 does, so we will not see that in the short or medium term.

Despite everything, it is a very interesting solution, and knowing the technical difficulties that Intel and UCSC are facing allows us to internalize in a more profound way the enormous advance that NVIDIA DLSS 2.0 has accomplished.