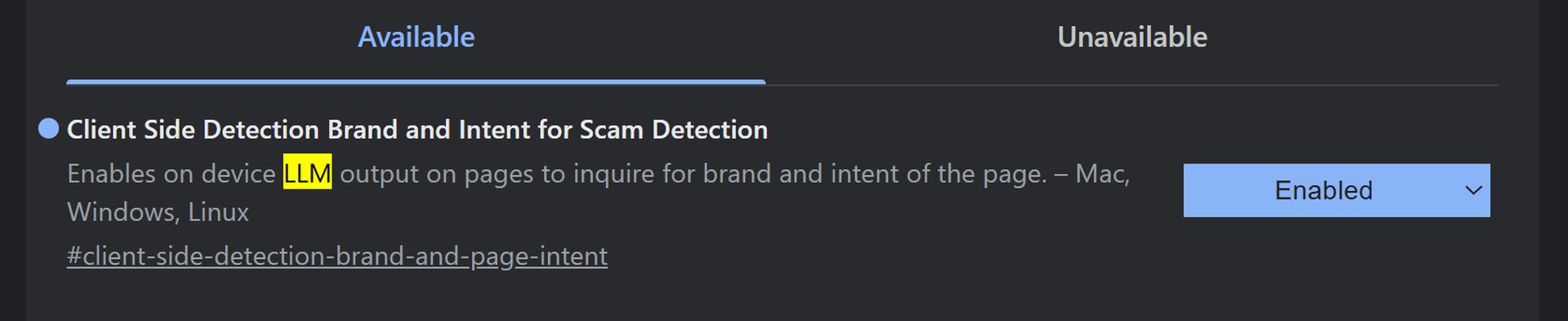

Google Chrome is set to introduce an AI-powered feature aimed at safeguarding users against advanced scams and phishing attacks. This development comes amid increasing sophistication in cybercriminal schemes, prompting the need for enhanced online security measures. The initial insights into this feature emerged from Leopeva64 on X (formerly Twitter), revealing that the latest update in Chrome Canary includes a function called “Client Side Detection Brand and Intent for Scam Detection.”

Google Chrome to launch AI feature for scam protection

The new feature utilizes an on-device large language model (LLM) to analyze web pages for brand authenticity and intent, thereby ensuring better protection against security threats. By conducting this analysis directly on user devices, Google aims to address privacy concerns typically associated with cloud-based security solutions. This shift seeks to keep user credentials offline, alleviating fears over potential exposure during data transmission to the cloud or usage in training AI models.

This initiative from Google mirrors a similar security enhancement implemented by Microsoft in its Edge browser. Earlier this month, Leopeva64 noted the presence of a “scareware blocker” in Edge’s settings, which also employs AI to detect tech-related scams. Interestingly, Microsoft’s feature is turned off by default, requiring users to manually enable it in the settings.

The urgency behind these developments has intensified following warnings from law enforcement, including the FBI, about the increasing use of generative AI by criminals. Cybercriminals are utilizing AI technologies to produce more convincing persuasive content and deceptive images, making fraudulent websites appear legitimate. Traditional methods of identifying scams are becoming less effective, underscoring the necessity for AI-based tools to bolster users’ defenses against potential fraud.

As Google advances its AI-driven approach to online security, questions remain regarding the effectiveness and privacy implications of these new features. While both companies are striving to protect users, the exact operational dynamics and potential challenges of implementing these technologies are still under scrutiny.

AI security features in browsers

The introduction of AI security features reflects ongoing trends among major tech firms to enhance user safety. Microsoft has publicly expressed its commitment to prioritizing security, especially following the company’s exposure to significant breaches, such as the attack by the Russian hacker group Nobelium that accessed executive email accounts. During Microsoft’s FY24 Q3 earnings call, CEO Satya Nadella emphasized, “Security underpins every layer of the tech stack, and it’s our No. 1 priority,” highlighting the focus on integrating robust security functionalities across their products.

Google’s AI scam detection feature is currently in the experimental phase within Chrome Canary, and while its broader implementation timeline remains uncertain, the company is dedicated to advancing online security measures in response to rising threats. Both Google and Microsoft are investing in their respective security architectures to create safer browsing experiences, which is pivotal as the landscape of online fraud continues to evolve.

Featured image credit: Growtika/Unsplash