In a lawsuit filed by Asian News International (ANI) this month, the media agency maintains that OpenAI used ANI’s material for training its AI models, including ChatGPT, without permission. ANI cited communication from OpenAI’s lawyers saying that since September, the news agency has been blocked internally from using its content to train AI in a legal filing. ANI said on Monday it had suspended service to OpenAI. Still, OpenAI countered in a tweet the next day that it had stopped using ANI’s content and said that its operations comply with legal boundaries.

The suit also covers copyright and ethical use of information by AI systems. ANI insisted that the published content that appeared in ChatGPT’s chat output was still preserved within the AI, as it lacks a way to erase such content. The complaint claims that OpenAI also failed to get a legal license for the content and has even brokered licensing agreements with other news organizations, including the Financial Times and Associated Press.

Is OpenAI crossing ethical lines in AI training?

OpenAI’s commitment to building models based on publicly available data has been vocal. The company says its practice complies with fair use principles backed by legal precedents. The company, moreover, disclosed its working relationship with several world news organizations, suggesting it might be open to forging these partnerships, particularly in India.

The Delhi High Court ordered OpenAI to submit a detailed response to ANI’s accusations in the first hearing related to ANI’s lawsuit. The next hearing is on January 28, 2025.

ANI’s lawsuit isn’t an outlier; it has joined a growing chorus of news organizations suing OpenAI for similar reasons. The tech company is fighting many lawsuits from agencies, including The Chicago Tribune and The New York Times. In its lawsuit against The New York Times filed in December 2023, OpenAI alleges copyright infringement, saying its models were trained off unauthorized content from the publication, and it provided users not paying for access. In April 2024, the Chicago Tribune and seven other newspapers filed in a court that OpenAI and Microsoft used copious copyrighted articles inappropriately during training AI systems.

These are just the latest in legal battles over what content is owned by whom and what rights they have to it, revealing the intricacies of who owns a piece of content and rights to it and the questions for the future of content creation and AI training in general. As AI capability makes its way into machines, the need for clear guidelines and a legal framework that will define how copyrighted material may be used is only increasing.

Another development occurred recently in the ongoing lawsuits around OpenAI. Lawyers representing The New York Times and Daily News worried that OpenAI deleted crucial evidence in Thursday’s November 2024 case. Following an agreement that allowed the publishers’ counsel to search OpenAI’s training data for their copyrighted material, OpenAI inadvertently erased all search data on one of the virtual machines provided for this purpose.

The deletion also raised concerns, considering that the recovered data could only be used if folder structures and file names could be recovered—an additional complicating factor in the already complicated legal process. Publishers’ counsel also said there was no reason to suspect intentional wrongdoing, though the incident demonstrates that OpenAI has a view on handling its search and management of its datasets.

However, OpenAI firmly believes that training AI models on publicly available data, including articles from The New York Times and Daily News, is permissible under fair use. In its defense, OpenAI has diversified its partnerships with publishers, including News Corp, and we see reports that Dotdash Meredith makes at least $16 million annually through such partnerships.

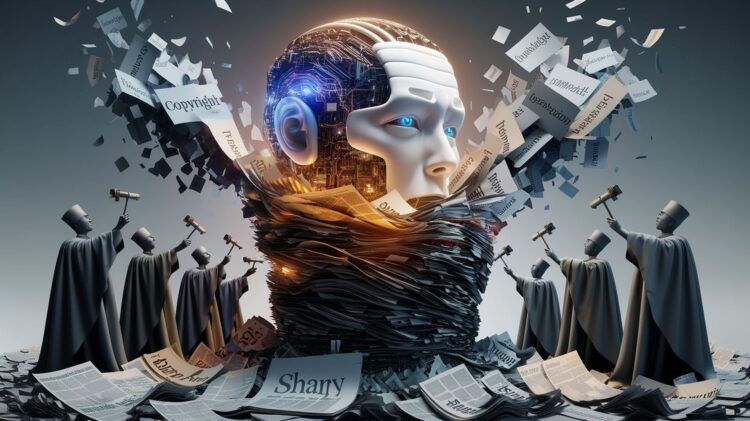

Image credit: Furkan Demirkaya/Flux AI