The OpenAI o1 API changes how AI models handle complex tasks. The o1 series models excel at scientific and logical reasoning by encouraging deep thinking. These models are currently available in beta and have set impressive benchmarks, including high ranks in competitive programming and outperforming human experts in various scientific domains.

Understanding models before the OpenAI o1 API

The OpenAI o1 API now has reasoning tokens. The o1 models use reasoning tokens to think through a problem before responding, unlike previous models. The model can tackle more complex tasks because of this internal reasoning process, especially in fields like coding, mathematics, and science.

There are two versions of the OpenAI o1 API: o1-preview and o1-mini. The o1-preview model is for tackling tough problems that need lots of knowledge. This early preview model shows what the full o1 series can do. The o1-mini is faster and cheaper. It’s good for tasks that don’t need much general knowledge but still need precise reasoning, like coding and science.

Why does the OpenAI o1 API stand out?

OpenAI o1 API performs exceptionally well on reasoning tasks. The o1 models excel in scientific reasoning, ranking in the 89th percentile on competitive programming questions and achieving high marks in the USA Math Olympiad qualifiers. These models are more accurate than humans at solving physics, biology, and chemistry problems. This makes them useful tools in science.

As the OpenAI o1 API is currently in beta, there are certain limitations developers should be aware of. The beta version supports text-only inputs, and some chat completion API parameters are not yet available. For instance, image inputs, system messages, and streaming are not supported. Additionally, tools like function calling and response format parameters are unavailable, and certain settings like temperature and presence_penalty are fixed.

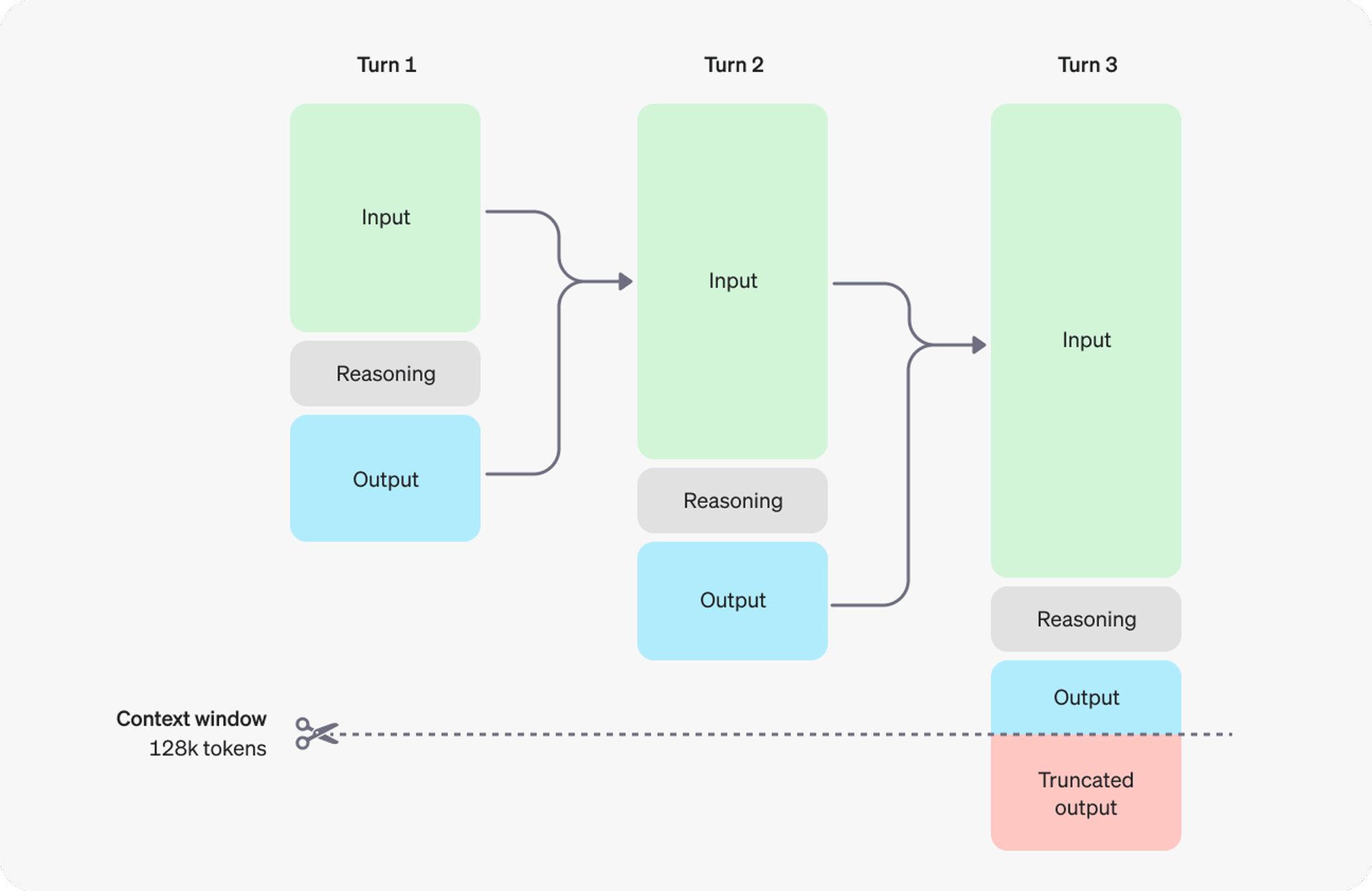

Despite these limitations, the OpenAI o1 API offers a generous context window of up to 128,000 tokens, with specific models allowing for different maximum output token limits. The o1-preview model can generate up to 32,768 tokens, while the o1-mini model can generate up to 65,536 tokens. This expansive context window is particularly beneficial for handling complex tasks that require substantial reasoning.

Managing costs and token limits

One of the challenges of working with the OpenAI o1 API is managing the costs associated with the extensive token usage required by the reasoning process. The o1 models generate both reasoning tokens (which are invisible to the user) and completion tokens (the visible output). To help developers manage these costs, OpenAI has introduced the max_completion_tokens parameter, allowing users to control the total number of tokens generated.

This parameter is crucial because the number of reasoning tokens can sometimes exceed the number of visible completion tokens, leading to higher costs without a corresponding visible output. By adjusting the max_completion_tokens parameter, developers can ensure they stay within their budget while still benefiting from the powerful reasoning capabilities of the OpenAI o1 API.

Best practices for prompting the o1 Models

When working with the OpenAI o1 API, it is essential to keep prompts simple and direct. The models are designed to excel with straightforward instructions, and complex prompting strategies like chain-of-thought prompts may not be necessary. Additionally, using delimiters to indicate distinct parts of the input can help the model interpret the information more accurately.

As the OpenAI o1 API continues to evolve, additional features like multimodality and tool usage are expected to be added in future updates. For now, developers can explore the unique capabilities of the o1 models and experiment with their advanced reasoning features to create innovative applications.

How to access OpenAI o1 API

Accessing the API is currently limited due to its beta status. If you’re a developer interested in using the o1 models, follow these steps to gain access:

- Check your usage tier: Access to the o1 models is restricted to developers in tier 5. You can verify your usage tier on OpenAI’s platform.

- Request access: If you are eligible, request access through OpenAI’s developer portal. You may need to provide details about your intended use case.

- Understand the rate limits: During the beta phase, the API has a low rate limit of 20 requests per minute (RPM). Plan your usage accordingly.

Once you have access, you can start experimenting with the o1-preview and o1-mini models through the chat completions endpoint. Keep in mind that additional features and broader access may become available as the API moves out of beta.

Image credit: OpenAI