The Megalopolis trailer is in hot water after it was revealed that some of its review quotes were fake and generated by AI. Eddie Egan, who was responsible for the trailer’s marketing, has been removed from his position due to this mess.

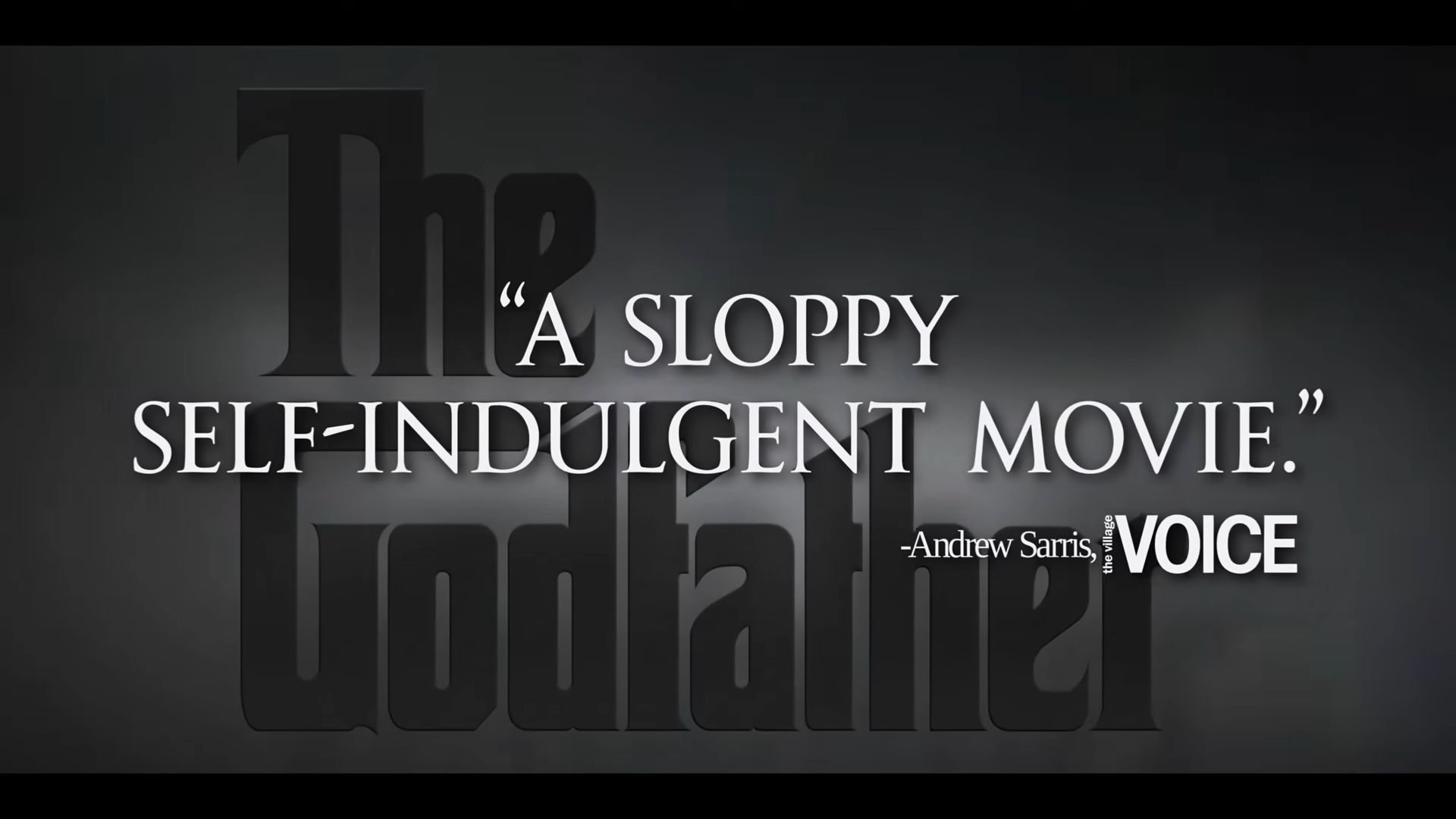

The trailer included made-up reviews that criticized Francis Ford Coppola’s classic films. It falsely claimed that “The Godfather” was a “sloppy, self-indulgent movie” and that “Apocalypse Now” was “an epic piece of trash.” In reality, the original reviews were positive and praised the movies.

This situation highlights a growing problem with AI: it can hallucinate. This isn’t just an issue for movie trailers; AI errors have affected legal documents and court cases as well. For example, Michael Cohen’s lawyer submitted fake court cases, and a Colombian airline’s lawyers made similar mistakes. Even rapper Pras Michél lost a legal case because his lawyer used an AI-generated argument.

What are AI hallucinations? The Megalopolis trailer scandal shows how they work

AI hallucinations occur when artificial intelligence generates information that sounds convincing but is actually false or misleading. The “Megalopolis” trailer scandal brought this term into the spotlight.

In this case, the trailer featured fake review quotes about Francis Ford Coppola’s films, claiming that “The Godfather” was “sloppy” and “Apocalypse Now” was “an epic piece of trash.” AI created these quotes, but they didn’t match the actual positive reviews of these movies. The AI “hallucinated” these quotes, producing believable yet false statements.

AI hallucinations happen because the technology can generate text based on patterns it has learned from data but doesn’t truly understand the content. It can mix up facts, create errors, or make things up entirely, which is what happened with the misleading reviews in the trailer.

This incident highlights the risks of relying on AI without proper checks. Just as in the “Megalopolis” case, where fake quotes led to serious consequences for those involved, AI hallucinations can cause confusion and damage if not carefully managed. It’s a reminder that while AI can be powerful, it’s crucial to verify its output to avoid misleading information.

Featured image credit: IMDB