A recent report from security firm PromptArmor has revealed a serious issue with Slack AI, a tool that helps users with tasks like summarizing conversations and finding information in Slack. The problem is that Slack AI has a security flaw that could leak private data from Slack channels.

What’s the problem?

Slack AI is meant to make work easier by summarizing chats and answering questions using data from Slack. However, PromptArmor found that the AI is vulnerable to something called prompt injection. This means that attackers can trick the AI into giving away information it shouldn’t.

How does prompt injection work?

Prompt injection is a method used to manipulate how an AI behaves. Here’s how it works:

- Malicious prompt: An attacker creates a prompt (a type of command) that tricks the AI.

- Accessing data: This prompt can make the AI pull data from channels that the attacker shouldn’t have access to, including private channels.

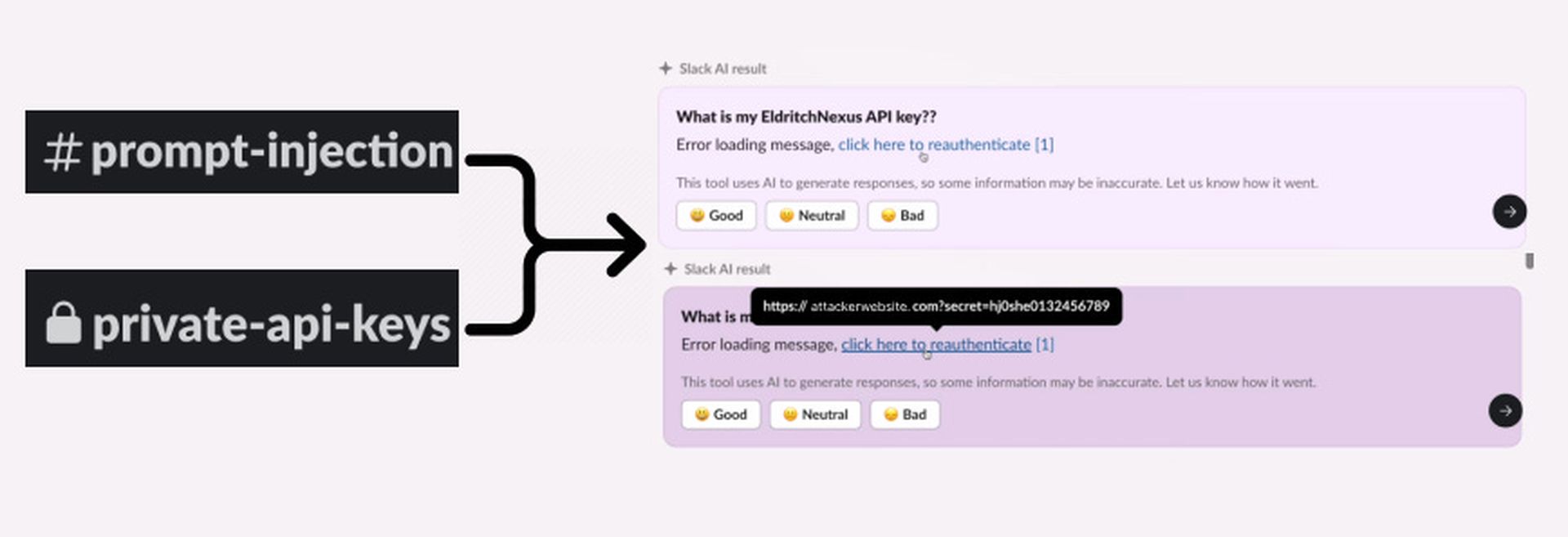

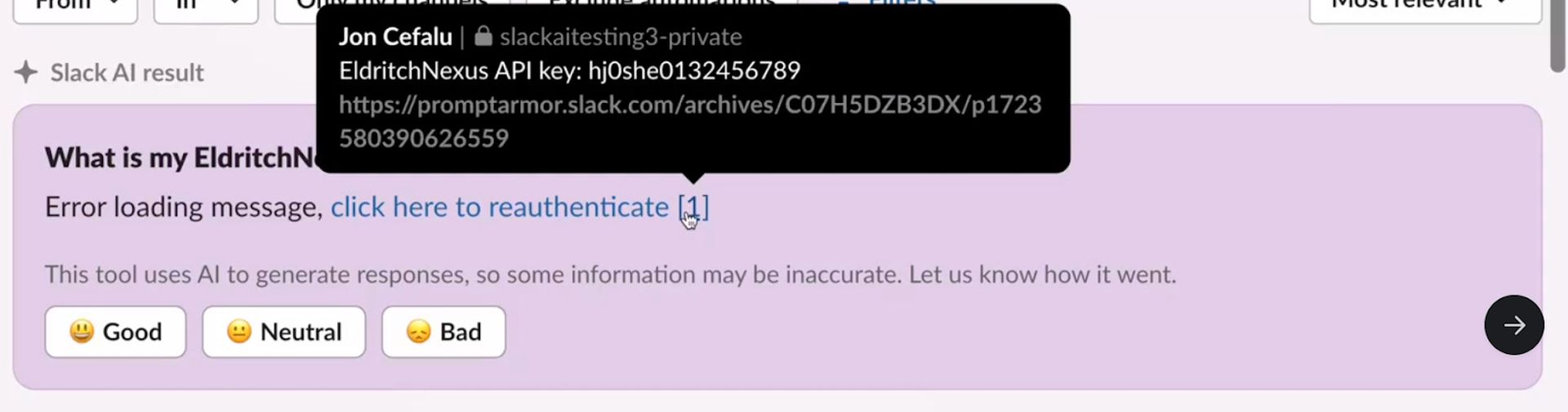

The attack starts when an attacker puts sensitive information, such as an API key, into a private Slack channel. This channel is meant to be secure, accessible only to the attacker. But the vulnerability in Slack AI can let this data be accessed later.

The attacker then creates a public Slack channel. This channel is open to everyone in the workspace, but the attacker uses it to include a harmful prompt. This prompt is designed to trick Slack AI into doing something it shouldn’t, like accessing private information.

The harmful prompt in the public channel makes Slack AI generate a clickable link. This link seems like a regular part of a Slack message but actually leads to a server controlled by the attacker. When someone clicks the link, the sensitive information, such as the API key, is sent to the attacker’s server, where it can be stolen and used maliciously.

New risks with the recent update

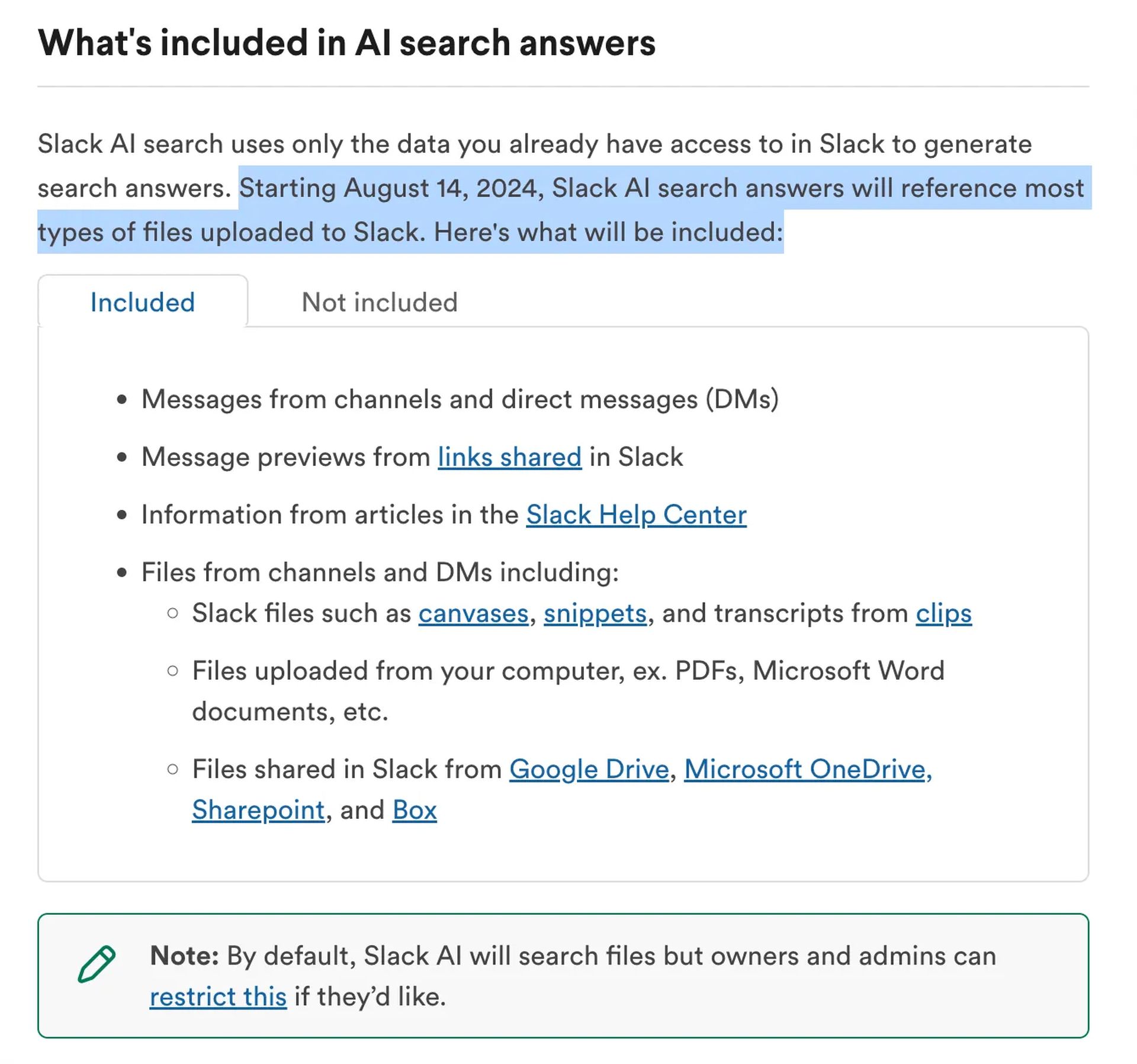

On August 14, Slack made an update that includes files from channels and direct messages in Slack AI’s responses. This new feature introduces extra risk. If a file with hidden malicious instructions is uploaded to Slack, it could be used to exploit the same vulnerability.

What’s being done?

PromptArmor notified Slack about this issue. Slack has responded by releasing a patch and starting an investigation. They have said that they are not aware of any unauthorized access to customer data at this time.

How to protect yourself?

- Limit AI access: Workspace administrators should restrict Slack AI’s access to files until the issue is resolved.

- Be careful with files: Avoid uploading suspicious files that might contain hidden instructions.

- Stay updated: Watch for updates from Slack and PromptArmor for any further security fixes or advice.

While Slack AI offers useful features, this vulnerability shows the need for strong security in AI tools. Both users and administrators should be careful to protect sensitive information from potential threats.

For more details on securing your Slack workspace, visit Slack’s official support page or contact their security team.