Artificial intelligence (AI) has long fascinated some people and been criticized by others. It has become difficult to predict what is possible and what is not with AI. A group of academics at the University of British Columbia (UBC) in Vancouver have come up with something that may seem like science fiction: a fully autonomous AI scientist. This AI analyzes data as well as creates and runs experiments. This discovery has both fascinating and, for some, disturbing implications.

While the idea of an AI scientist may seem like a far-fetched dream, the UBC team has made it a reality through partnerships with the University of Oxford and Sakana AI. While these AI-generated working papers may not initially contribute much to science, the fact that they are the result of machine learning from their experiments and that they work represents an advancement in AI research.

The AI scientist that never stops learning

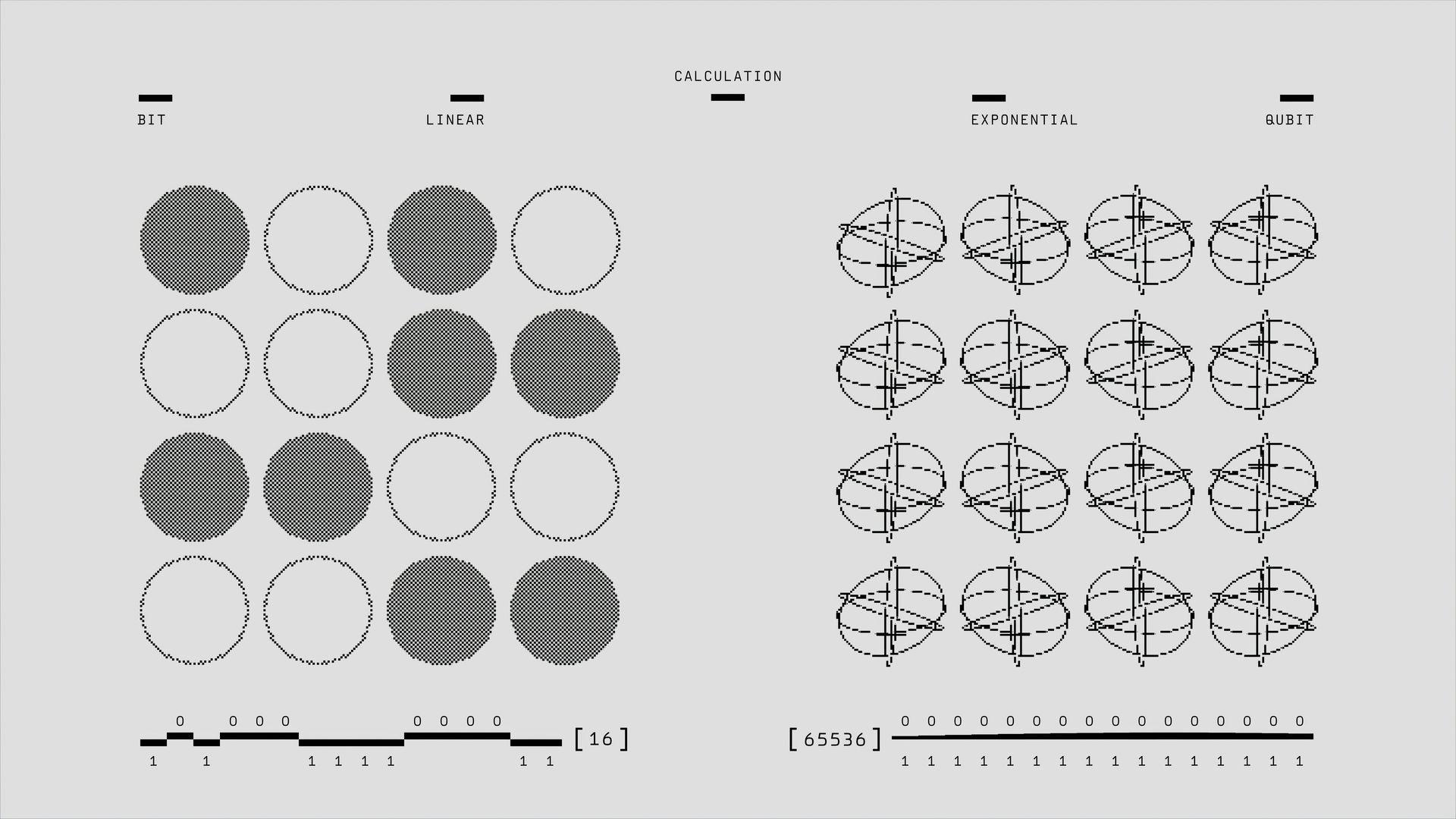

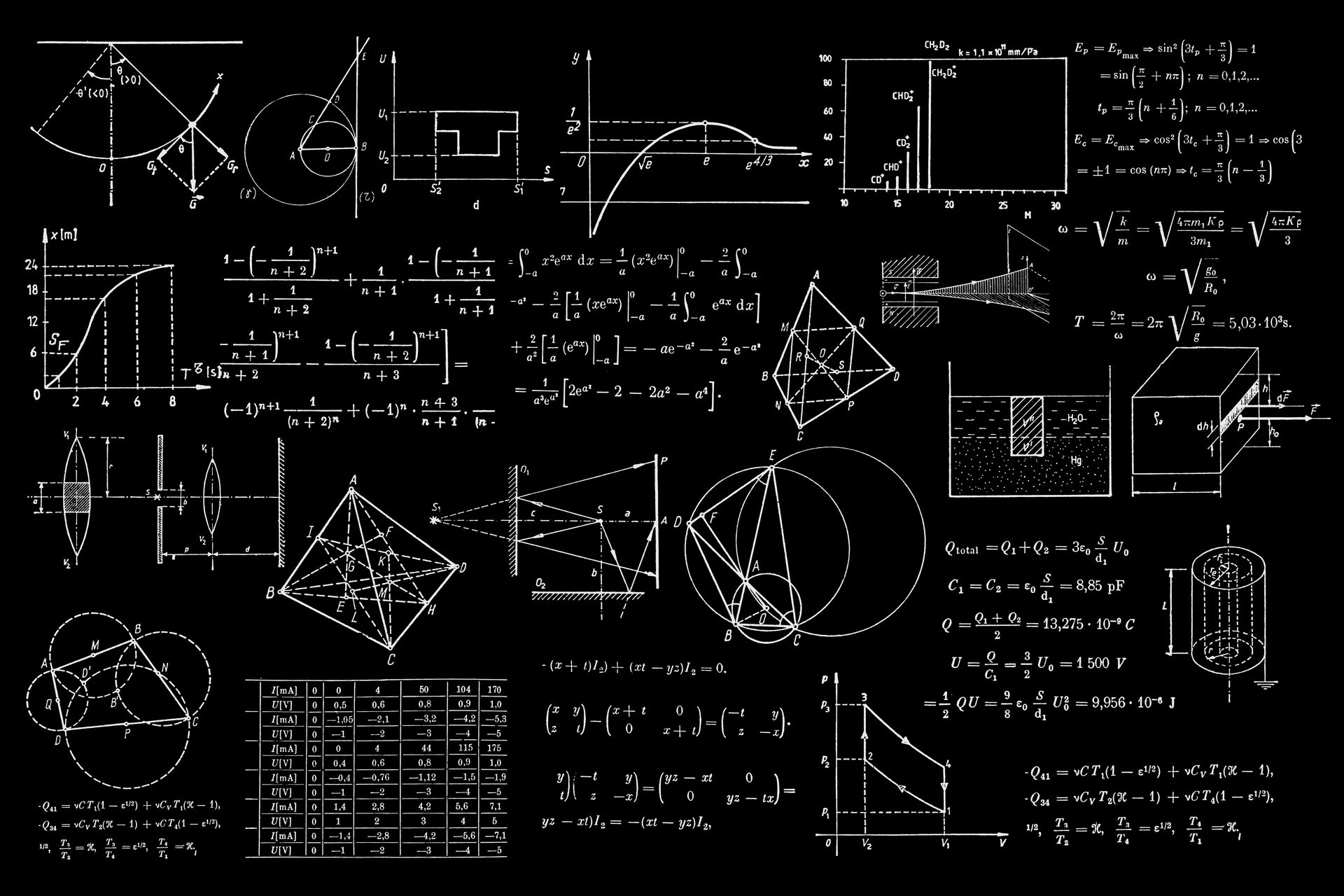

This work is centered around an approach called open-ended learning. This new AI scientist functions differently than traditional AI, which is dependent on vast amounts of data created by humans. It learns by trying new things, investigating them, and refining them. Using this technique, AI can expand the boundaries of current knowledge and perhaps find new insights that human researchers may have missed.

One of the first tasks this AI scientist tackled was to improve existing machine learning techniques, such as diffusion modeling and accelerating deep neural networks. While these may not seem like earth-shattering advances, they represent AI’s ability to develop and test ideas autonomously. AI itself could not do many things when it first came out, but now it has started to enter every aspect of our lives.

Jeff Clune, the professor who directs the UBC lab, acknowledged that the results are not necessarily a success but they are promising. The AI scientist’s approach to learning—constantly refining his experiments and looking for things he finds “interesting”—is a fresh departure from the more rigid methods traditionally used in AI research.

AI scientists code-test and refine their theories independently

Clune’s lab has been experimenting with open-ended learning for a while. In previous projects, they’ve developed AI programs designed to explore virtual environments and generate behaviors based on what they find interesting. These programs had to be carefully guided with hand-coded instructions, but the introduction of large language models (LLMs) has changed the game. Now, these AI programs can independently determine what’s worth investigating, making them more autonomous and potentially more creative.

The AI scientist’s capacity to write the code needed to validate their theories, as well as run tests, is one of their most fascinating attributes. This allows the AI to continually refine its strategy, becoming increasingly effective and perhaps more perceptive. Clune likens this process to discovering a new continent because both involve the spirit of exploration and the unknown and the possibility of big surprises along the way.

The reliability of such systems is poor, despite their bright future. While AI scientists may seem remarkable, Tom Hope, a researcher at the Allen Institute for AI, says it is still highly derivative and unreliable. This distrust stems from the fact that AI overpromises and underperforms, especially in the areas of creativity and true innovation.

Managing potential and risk

With the creation of the AI scientist, concerns have also arisen about the direction of scientific research. When AI can create and evaluate theories on its own, where will human researchers be? Furthermore, how can we ensure that these AI technologies are used appropriately? Could a malicious person train an AI scientist at home and use it for evil purposes in the future?

This AI scientist is already making progress in creating AI agents, which are autonomous programs with predetermined functions. Clune’s group has created AI-designed agents that outperform their human-designed counterparts in skills such as math and reading comprehension. This success suggests a future where AI could contribute to innovation in ways that are still unclear, rather than simply assisting with everyday activities.

But there are hazards associated with this advancement. It is quite concerning that these AI systems may produce misbehaving agents, whether on purpose or not. Clune and his group are aware of the risks and are attempting to find solutions to stop these kinds of situations from happening. They contend that the secret is to properly oversee the creation of these systems to guarantee their strength and safety.

While it’s unclear where this will lead, one thing is certain: AI scientists are here, and they’re not going to stop getting smarter. AI is about to enter a new phase, and everyone will be watching closely to see if it leads to groundbreaking findings or new problems. As AI evolves, it will be vital for academics to emphasize ethical concerns and ensure that these systems are built ethically. Ultimately, how we address the complex relationship between technology and humans will determine how AI impacts society.

Featured image credit: National Cancer Institute / Unsplash