Gemma 2 has just burst onto the 2D AI scene and is causing quite a stir. This tiny model proves that good things come in small packages and is sparking a real conversation in the tech world.

Google’s latest invention, Gemma 2 2B, is a compact language model with just 2.6 billion parameters. Despite its small size, this AI powerhouse is a match for its bigger siblings. Not only does it talk the talk, Gemma 2 2B walks the walk, matching and even outperforming models ten times its size.

Gemma 2 2B’s sparkling performance

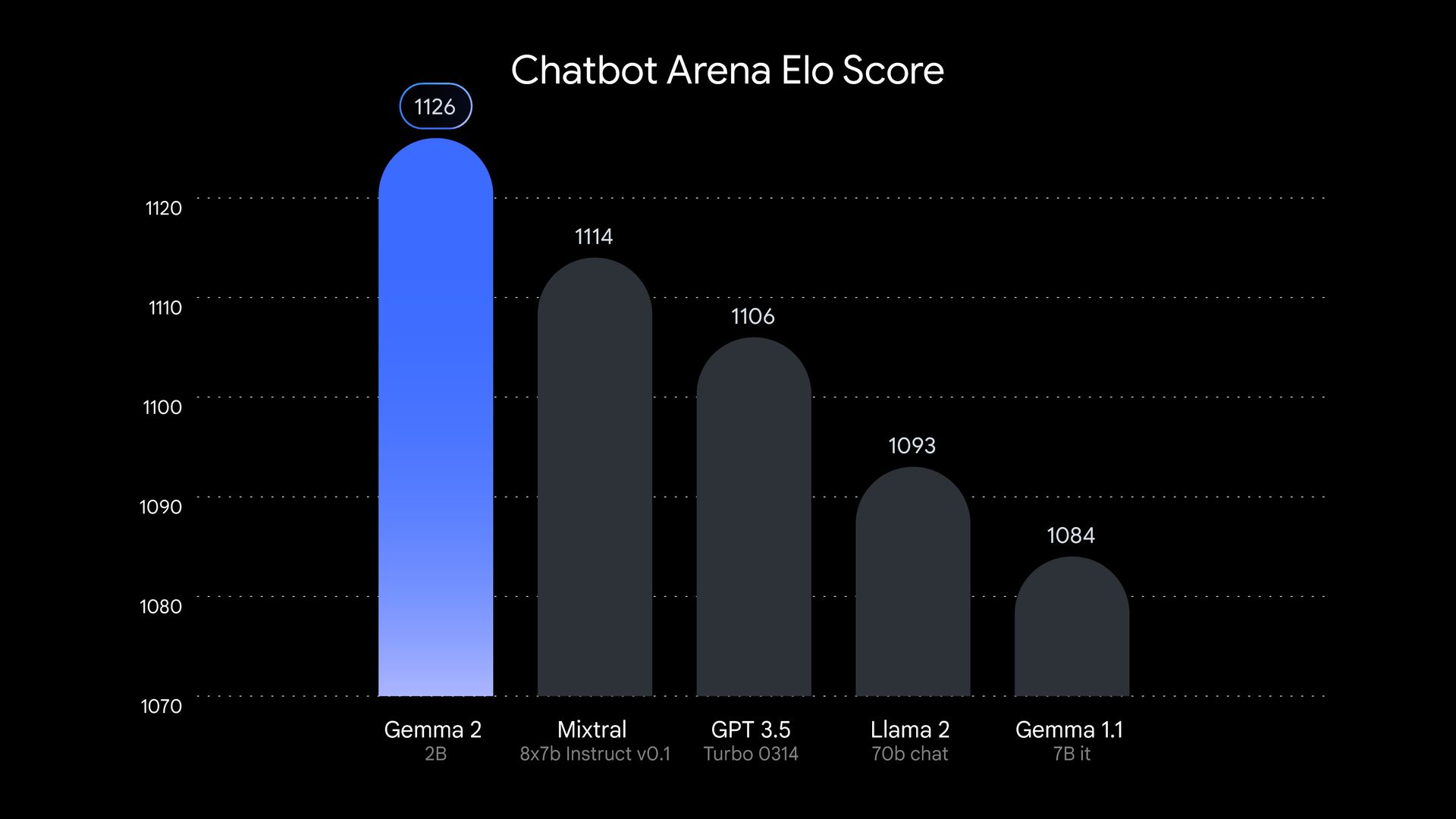

Let’s be clear about Gemma 2 2B not being another major force in the great AI crowd. This model was put to the test and came out shining. In tests conducted by LMSYS, an independent AI research group, Gemma 2 2B scored an impressive 1130 points in the evaluation arena. That’s right, this little gem outperformed some big names like GPT-3.5-Turbo-0613 and Mixtral-8x7B.

But the Gemma 2 2B doesn’t stop there. It shows that it is smart in other areas too. In the MMLU benchmark, which tests a model’s ability to understand and reason about various topics, the Gemma 2 2B scored 56.1 points. When it comes to coding, it scored 36.6 points in the MBPP test. These figures represent a quantum leap over its predecessor.

So how did Google create this little wonder? Gemma 2 2B was trained on a massive dataset of 2 trillion tokens using Google’s advanced TPU v5e hardware. This training process allowed the model to pack a lot of information into its compact frame. Gemma 2 2B is also multilingual, expanding its potential use cases worldwide. This makes it a versatile tool for developers and researchers working on international projects.

Gemma 2 2B’s success challenges the idea that bigger is always better in AI. Its impressive performance shows that with the right training techniques, efficient architectures, and high-quality data, smaller models can rise far above their weight class. This development could shift the focus in AI research from creating larger models to improving smaller, more efficient models. This is a change that could have far-reaching implications for the field and potentially make AI more accessible and environmentally friendly.

Polishing the future of AI

Gemma 2 2D represents a growing trend in AI towards more efficient models. As concerns grow over the environmental impact and accessibility of large language models, tech companies are looking for ways to create smaller systems that can run on everyday hardware.

The success of Gemma 2 2D also highlights the importance of model compression and distillation techniques. By effectively condensing knowledge from larger models into smaller ones, researchers can create more accessible AI tools without sacrificing performance.

This approach not only reduces the computational power required to run these models but also addresses concerns about the environmental impact of training and running large AI systems. This is a win-win situation that could shape the future of AI development.

Gemma 2 2B proves that when it comes to AI, it’s not the size that matters, but how you use it. This small but powerful model challenges our assumptions about AI and paves the way for a new generation of efficient, powerful, and accessible AI systems. It’s clear that this little gem will shine in the world of AI.

Featured image credit: Google