OpenAI paves the way for realistic conversations with chatbots with ChatGPT Advanced Voice Mode. The company will allow us to correspond with AI like a human and now talk to it like a human. Let’s take a look at ChatGPT Advanced Voice Mode, which is currently only available to a limited number of Plus members.

This cutting-edge feature, which will revolutionize the way we interact with AI, is generating a debate almost as interesting as the technology itself. Hey, Jarvis, you there?

We’re starting to roll out advanced Voice Mode to a small group of ChatGPT Plus users. Advanced Voice Mode offers more natural, real-time conversations, allows you to interrupt anytime, and senses and responds to your emotions. pic.twitter.com/64O94EhhXK

— OpenAI (@OpenAI) July 30, 2024

ChatGPT Advanced Voice Mode: More than just talk

OpenAI’s latest product is no ordinary voice assistant. ChatGPT Advanced Voice Mode is said to have hyper-realistic voice responses that blur the line between humans and AI. Unlike its predecessor, which relied on three separate models to process voice inputs, GPT-4o (the engine behind this new feature) is capable of multimodal tasks all in one. Imagine a machine that can handle voice-to-text conversion, prompt processing, and text-to-speech output all in one go. Fluent conversations that make you forget you’re having a conversation with a robot are not far away.

But wait, there’s more! This AI chatbox claims to be able to detect emotional intonations; it can tell if you’re feeling sad or excited. It can even join you for a karaoke session – but don’t expect it to sing any copyrighted tunes. Let’s first take a look at how to use ChatGPT Advanced Voice Mode.

How to Use ChatGPT Advanced Voice Mode

Ready to give your fingers a break and your vocal cords a workout? Here’s how to start chatting it up with OpenAI‘s latest creation. The ChatGPT Advanced Voice Mode is currently available for a limited number of ChatGPT Plus users (so not every Plus member has access to it), but only if you are among them:

- Update your app: First of all, make sure you are running the latest version of the ChatGPT app. For Android users, this is version 1.2024.206 or higher. For iOS users, you will need version 1.2024.205 or higher and your device must be running iOS 16.4 or higher. No old technology is allowed at this futuristic party!

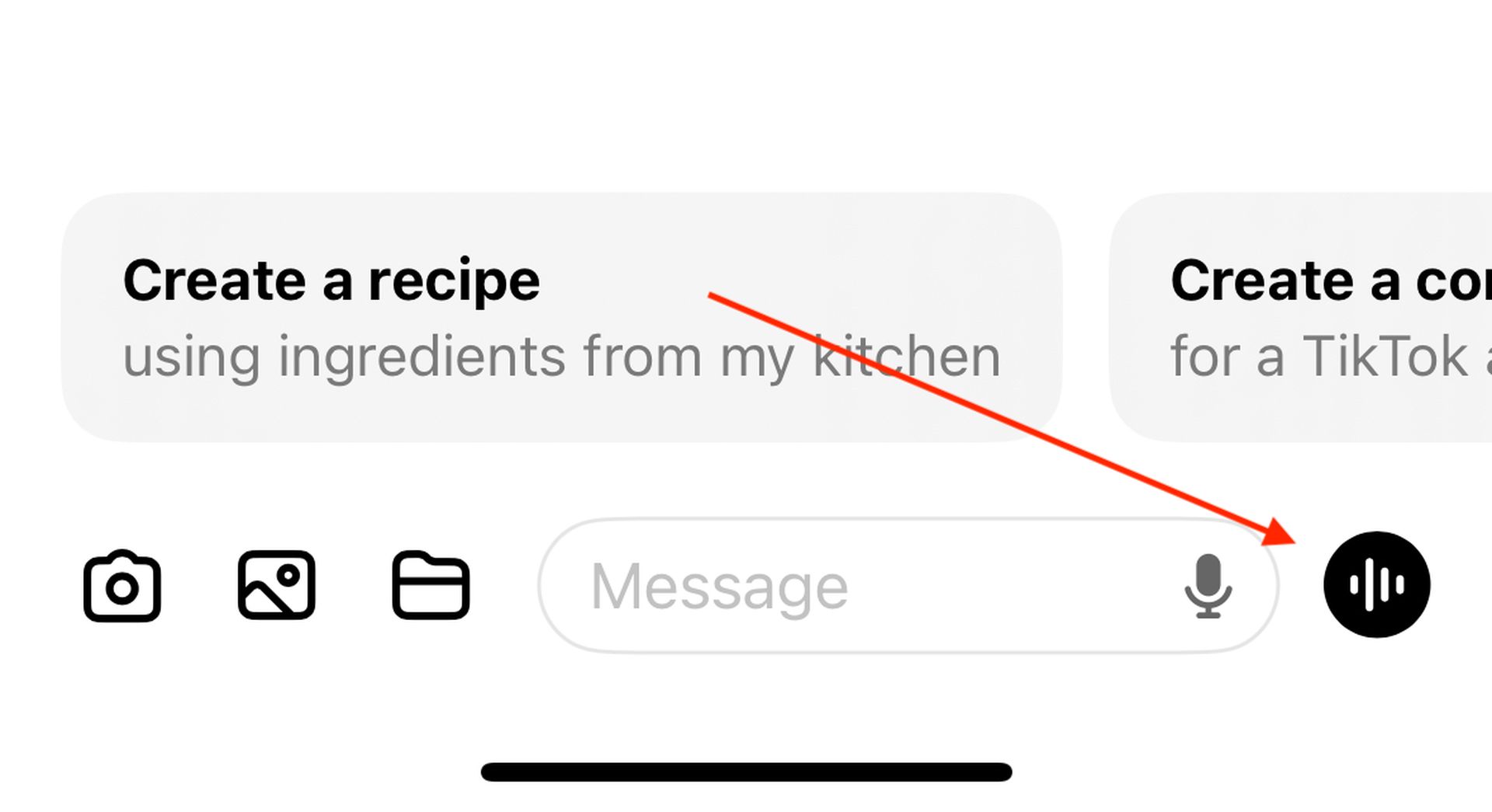

- Find your voice: Once you’re all updated, look for the voice icon lurking in the bottom-right corner of your screen. Give it a tap, and you’re ready to roll.

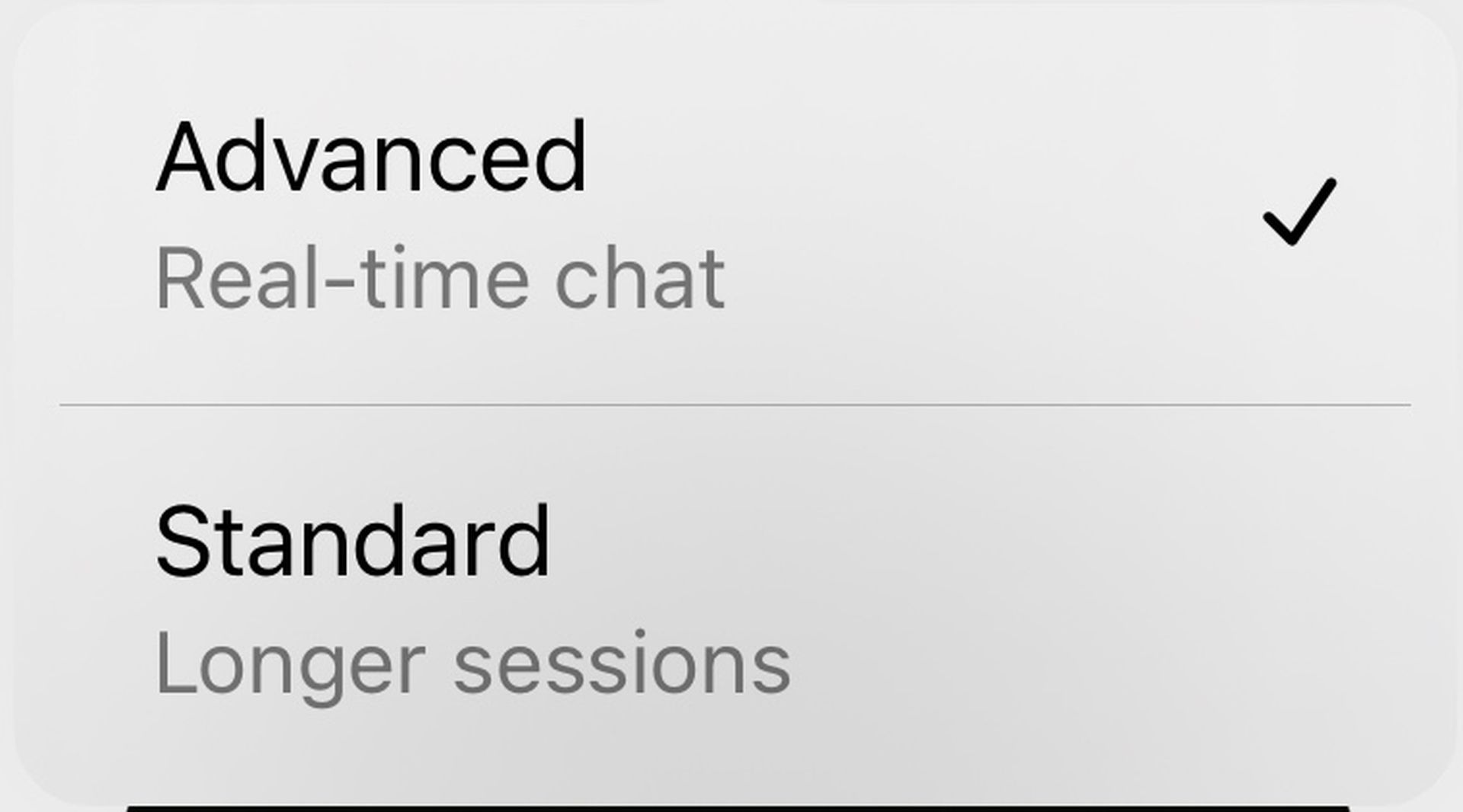

- Choose your fighter: You’ll be presented with a choice between standard Voice Mode and the shiny new Advanced Voice Mode. Pick “Advanced” to experience the full power of GPT-4o.

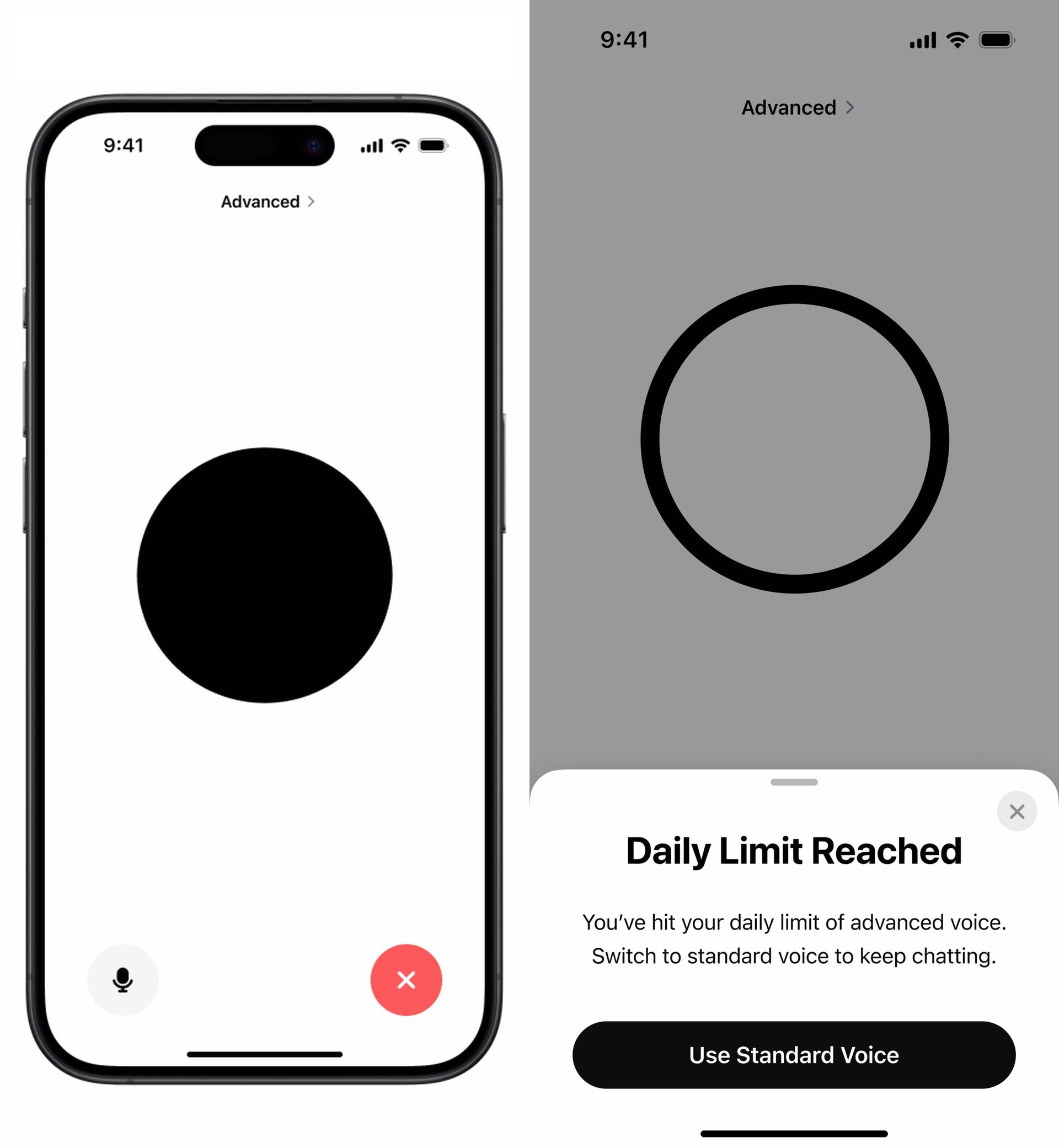

- Speak up: Your microphone should be on by default, but if you are getting the silent treatment, check the microphone icon at the bottom left of the screen. Tap to switch if necessary.

- Chat away: Start talking to your AI friend as naturally as you would talk to a human friend. Remember, it can understand your emotions, so feel free to let your personality shine through.

- Wrap it up: When you’re done influencing (or being influenced by) your new AI speaker, press the red icon at the bottom right to end the conversation.

Pro tip: For the best experience, pop in some headphones. And iPhone users, enable Voice Isolation mic mode to avoid any unwanted interruptions. After all, three’s a crowd when you’re trying to have a heart-to-heart with your AI assistant!

Remember, this feature is still in its alpha stage, so it might have a few quirks. But hey, even humans aren’t perfect conversationalists 100% of the time, right?

Scarlett Johansson: A voice of controversy

While OpenAI has been boasting about the advanced capabilities of its new voice feature ChatGPT Advanced Voice Mode, the road to its release has not been without bumps. Remember the jaw-dropping demo back in May? It turns out that one of the voices, Sky, bears an uncanny resemblance to a Hollywood star.

Scarlett Johansson, also known for her role as an AI assistant in the movie “Her”, reportedly turned down multiple requests from OpenAI CEO Sam Altman to use her voice. Johansson was quicker to advocate for what you might call “artificial intelligence” when a demo featuring a voice that sounded suspiciously similar to her own was released. OpenAI denied using her voice but immediately removed the controversial sample from their program.

Safety first, starlets second

In response to the controversy, OpenAI put the brakes on the release, taking the time to increase security measures. The company claims to have tested GPT-4o with more than 100 external red team members speaking 45 different languages. The result. A more secure system with four preset voices;

- Juniper,

- Breeze,

- Cove,

- Ember

created in collaboration with paid voice actors.

OpenAI spokesperson Lindsay McCallum assures that ChatGPT “cannot mimic the voices of others, both individuals and public figures, and will block output that differs from one of these preset voices.” So, if you were hoping to chat with a virtual Scarlett Johansson, you’re out of luck.

As OpenAI gradually rolls out ChatGPT Advanced Voice Mode to all Plus users this fall, the tech world watches with bated breath. Will this be the conversational AI we’ve all been waiting for, or will it open up a new can of worms in the ongoing debate about AI ethics and copyright issues?

Featured image credit: X / OpenAI Edit: Furkan Demirkaya