The Mistral Large 2 has arrived, bringing a new level of sophistication to language models. With its massive 123 billion parameters and an impressive 128,000-token context window, this model can tackle lengthy texts and complex conversations with ease. It’s designed to be precise, reducing errors in information while excelling in coding and multilingual tasks.

Dive into how Mistral Large 2’s advanced capabilities are pushing the boundaries of what AI can do, from solving mathematical problems to supporting a wide range of programming languages.

Mistral Large 2: A comprehensive overview

Mistral Large 2 stands out as a significant advancement in the field of language models, combining substantial scale with cutting-edge technology, and here is why.

Model scale and context window

Mistral Large 2 is distinguished by its 123 billion parameters. Parameters are the core components of a language model, enabling it to learn and generate text based on patterns found in its training data. The vast number of parameters allows Mistral Large 2 to understand and generate more complex and nuanced text.

The model also features an extensive 128,000-token context window. This large context window means Mistral Large 2 can process and generate text while maintaining coherence over very long passages, making it effective for handling lengthy documents and detailed conversations.

One major challenge with language models is the generation of plausible-sounding but incorrect information, known as hallucinations. Mistral Large 2 has been specifically trained to reduce this issue. It is designed to acknowledge when it does not have sufficient information, rather than generating potentially misleading content. This improvement enhances the accuracy and reliability of the model.

Performance on benchmarks

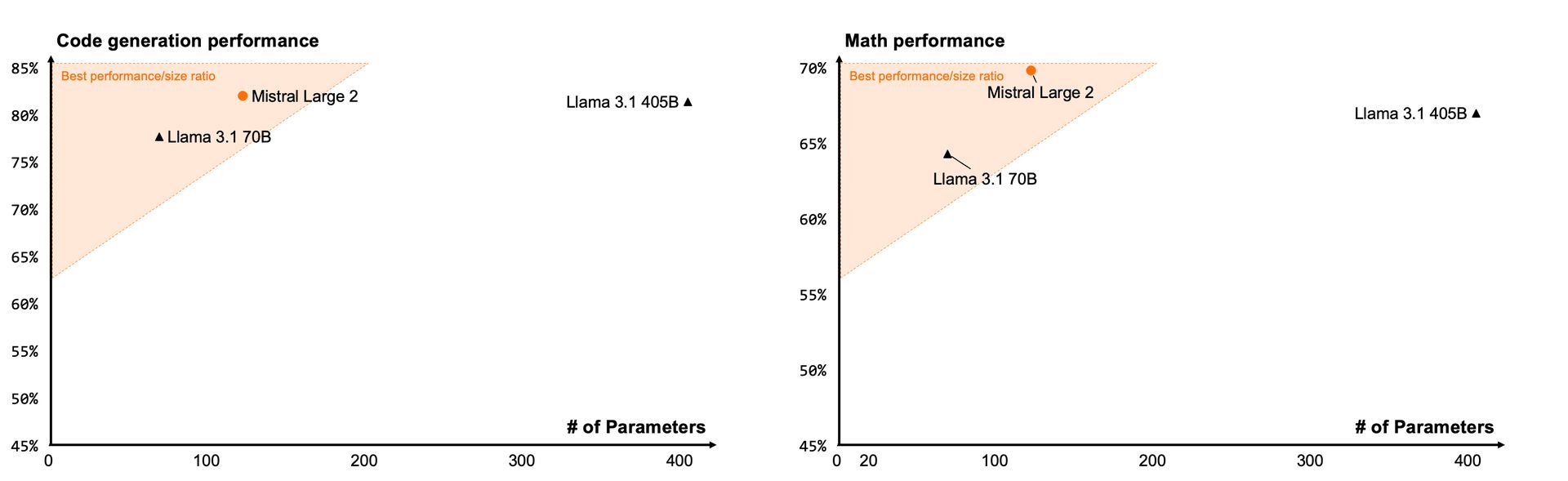

Mistral Large 2 has demonstrated strong performance across various benchmarks:

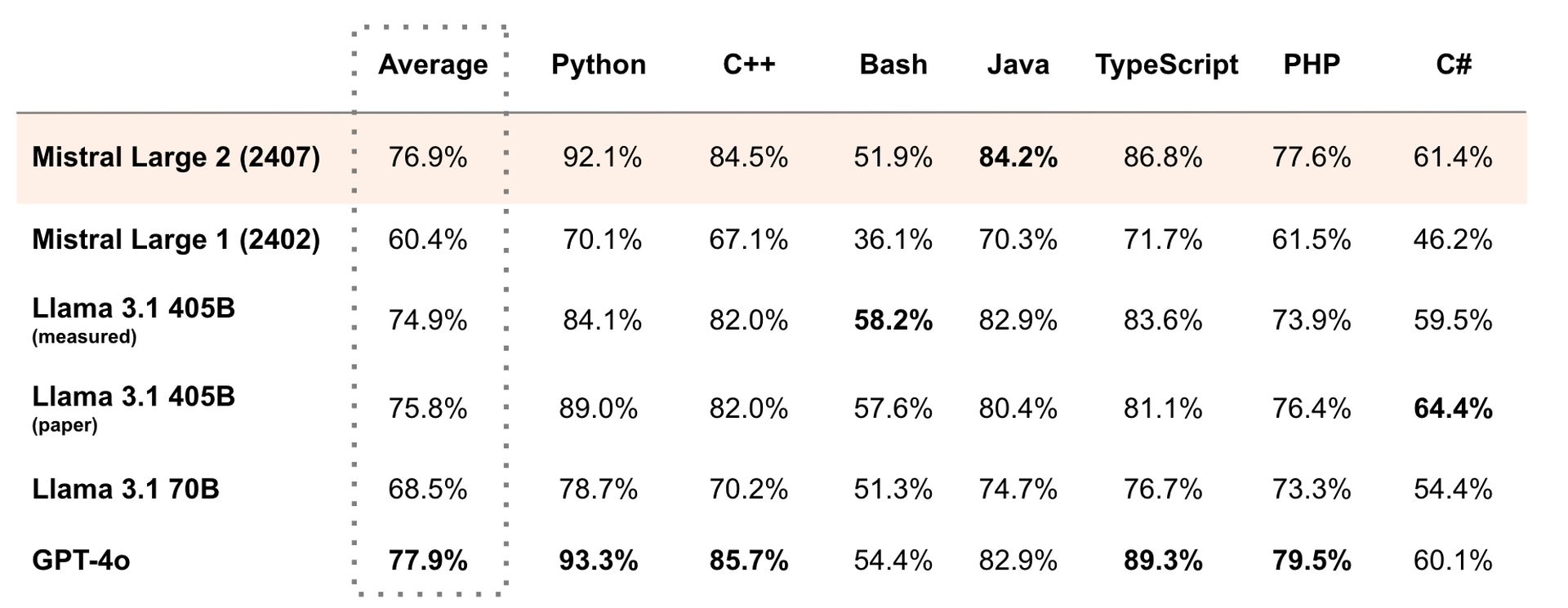

- Coding tasks: On the HumanEval benchmark, which tests programming skills, Mistral Large 2 shows high proficiency, performing comparably to leading models like GPT-4. This indicates its capability to understand and generate code effectively.

- Mathematical problem solving: The model performs well on the MATH benchmark, which evaluates mathematical problem-solving abilities. Although it ranks just behind GPT-4, its performance reflects its capacity to handle complex calculations and logical tasks.

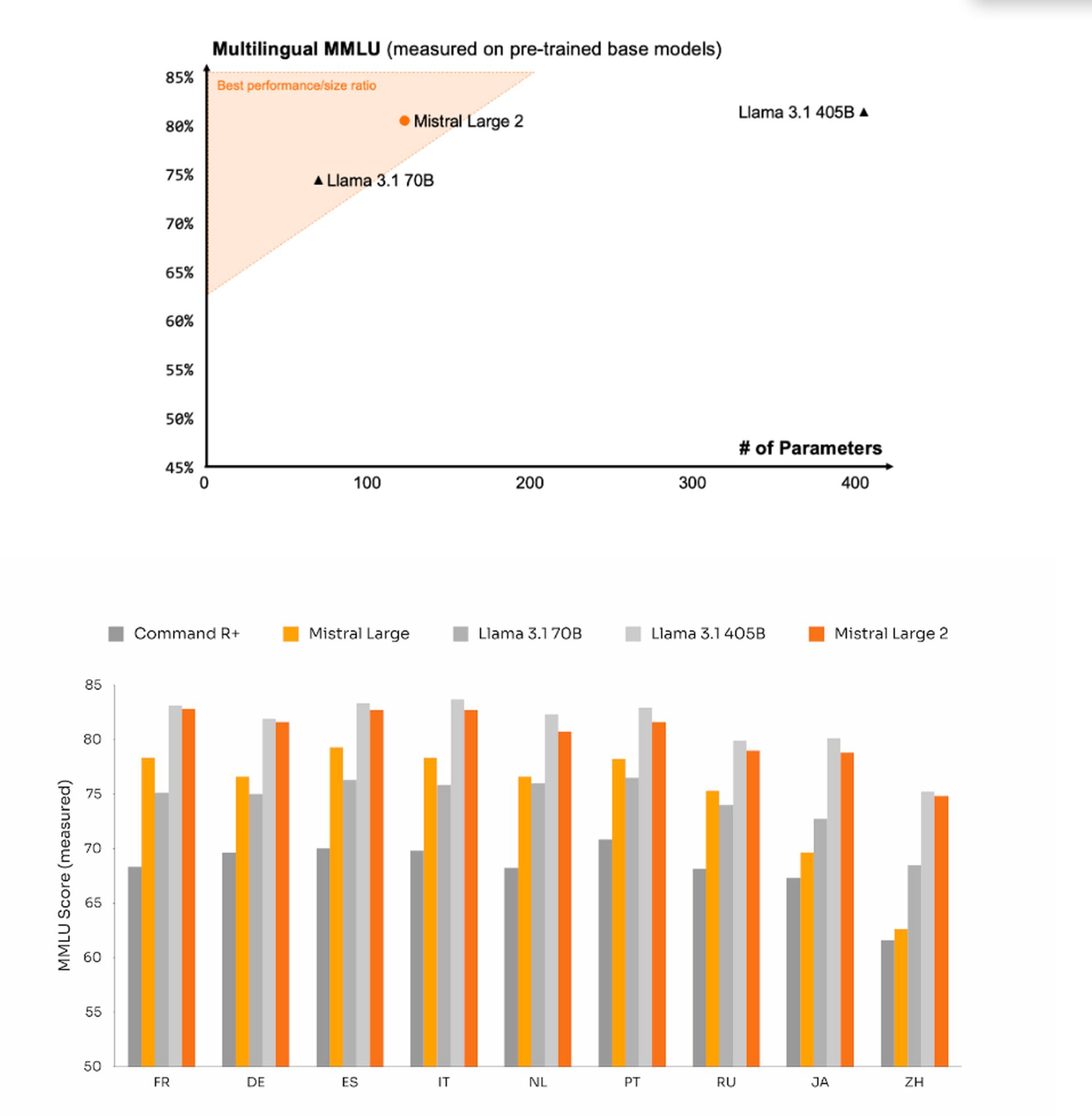

- Multilingual performance: In the Multilingual MMLU test, Mistral Large 2 excels in multiple languages, demonstrating its ability to process and generate text in various linguistic contexts.

Technical specifications

Mistral Large 2 is designed to operate efficiently despite its large scale. It can run on a single machine, which is significant given its size. This efficiency is beneficial for applications that require processing extensive amounts of text quickly.

Coding capabilities

The model supports over 80 programming languages, including popular ones like Python, Java, C, C++, JavaScript, and Bash. This broad support is a result of extensive training focused on programming tasks, making Mistral Large 2 a versatile tool for developers and those working with code.

Multilingual capabilities

Mistral Large 2 is capable of processing and generating text in numerous languages, including:

- European Languages: French, German, Spanish, Italian, Portuguese

- Asian Languages: Arabic, Hindi, Russian, Chinese, Japanese, Korean

This extensive language support allows the model to handle various multilingual tasks and applications.

The missing part

Mistral Large 2 does not currently offer multimodal capabilities, which involve processing both text and images simultaneously. This is an area where other models, such as those from OpenAI, currently have an advantage. Future developments may address this gap.

How to use Mistral Large 2

Mistral Large 2 is available through several platforms, including:

For experimentation, Mistral also offers access through their ChatGPT competitor, le Chat. However, while the model is more accessible than some competitors, it is not open source, and commercial use requires a paid license.

So, is Mistral’s new Large 2 model large enough?

Mistral’s Large 2 model is indeed quite large, with 123 billion parameters, making it one of the most extensive language models available. This scale allows it to handle complex text generation tasks and maintain coherence over long passages. Its 128,000-token context window further enhances its ability to process and generate detailed and lengthy documents.

In addition to its sheer size, Mistral Large 2 has been optimized to minimize issues like generating incorrect information, improving its reliability. It also performs well across various benchmarks, including coding and mathematical problem-solving, and supports multiple languages. So, yes, Mistral Large 2 is impressively large and capable, meeting the needs of many advanced AI applications.