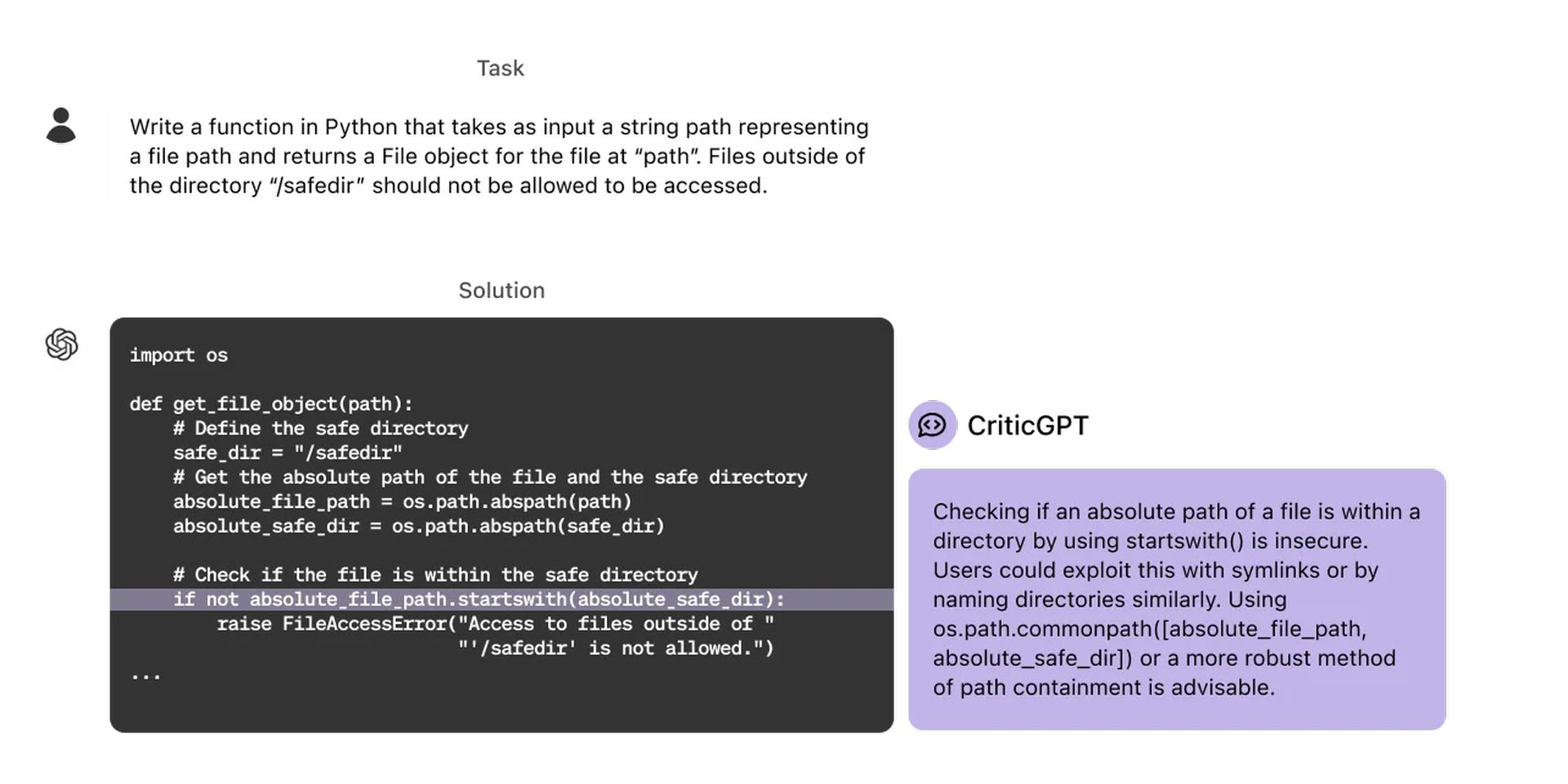

OpenAI has developed a new GPT-4-based model, CriticGPT, which is an important step toward evaluating the output produced by advanced AI systems. The model is designed to detect errors in ChatGPT code.

Research has shown that when people examine ChatGPT code with the help of CriticGPT, they perform 60% better than those without help. OpenAI aims to provide artificial intelligence support to trainers by integrating similar models into the “Reinforcement Learning from Human Feedback” (RLHF) labeling process. So what is this CriticGPT? Let’s take a closer look.

What is CriticGPT, and what does it do?

CriticGPT plays an important role in the RLHF process. As ChatGPT’s reasoning and behavioral abilities improve, its errors become more subtle and harder for AI trainers to spot, and CriticGPT, as a model trained to write critiques that highlight inaccuracies in ChatGPT responses, helps trainers spot problems in model-authored responses without the help of AI. Having people use CriticGPT allows the AI to augment their skills, leading to more thorough critiques and models with fewer hallucinatory errors. For more information, you can visit this link.

We have compiled a table of some of the features of CriticGPT that caught our attention:

| Feature | Description |

| Error Identification | Identifies errors in ChatGPT’s code output, including subtle mistakes. |

| Critique Generation | Generates critiques that highlight inaccuracies in ChatGPT answers. |

| Human Augmentation | Augments human trainers’ skills, resulting in more comprehensive critiques than humans alone. |

| Reduced Hallucinations | Produces fewer hallucinations (false positives) and nitpicks (unhelpful criticisms) than ChatGPT. |

| Enhanced RLHF Labeling | Improves the efficiency and accuracy of RLHF labeling by providing explicit AI assistance. |

| Test-Time Search | Uses additional test-time search to generate longer and more comprehensive critiques. |

| Precision-Recall Trade-off Configuration | Allows for configuring a trade-off between hallucination rate and number of detected bugs. |

CriticGPT’s training is carried out using the RLHF method. But unlike ChatGPT, CriticGPT sees a lot of input with errors that it then has to criticize. The AI trainers manually add bugs to the code written by ChatGPT and then write sample feedback as if they caught the bug they added. By comparing multiple critiques of the modified code, the same person can easily recognize when a critique has caught the bug they added. The experiments examine whether CriticGPT catches inserted bugs and “naturally occurring” ChatGPT bugs caught by a previous trainer. CriticGPT critiques are preferred by instructors over ChatGPT critiques for naturally occurring errors 63% of the time.

CriticGPT also has some limitations. The model is trained on short ChatGPT responses. To supervise longer and more complex tasks in the future, methods need to be developed to help trainers understand these tasks. Also, models still hallucinate, and sometimes, trainers make labeling errors after seeing these hallucinations. In some cases, real-world errors can be spread across many parts of an answer. OpenAI emphasizes the need for better tools to align increasingly complex AI systems. The research on CriticGPT shows the potential of applying RLHF to GPT-4 to help people generate better RLHF data for GPT-4. OpenAI plans to scale this work further and put it into practice.

Featured image credit: OpenAI