Last April, Meta took an important step in the world of technology by announcing the Meta Llama 3 language model, which ushered in a new era in the field of artificial intelligence. Equipped with various innovations compared to its previous versions, the model aims to spread the use of artificial intelligence technologies to a wider area.

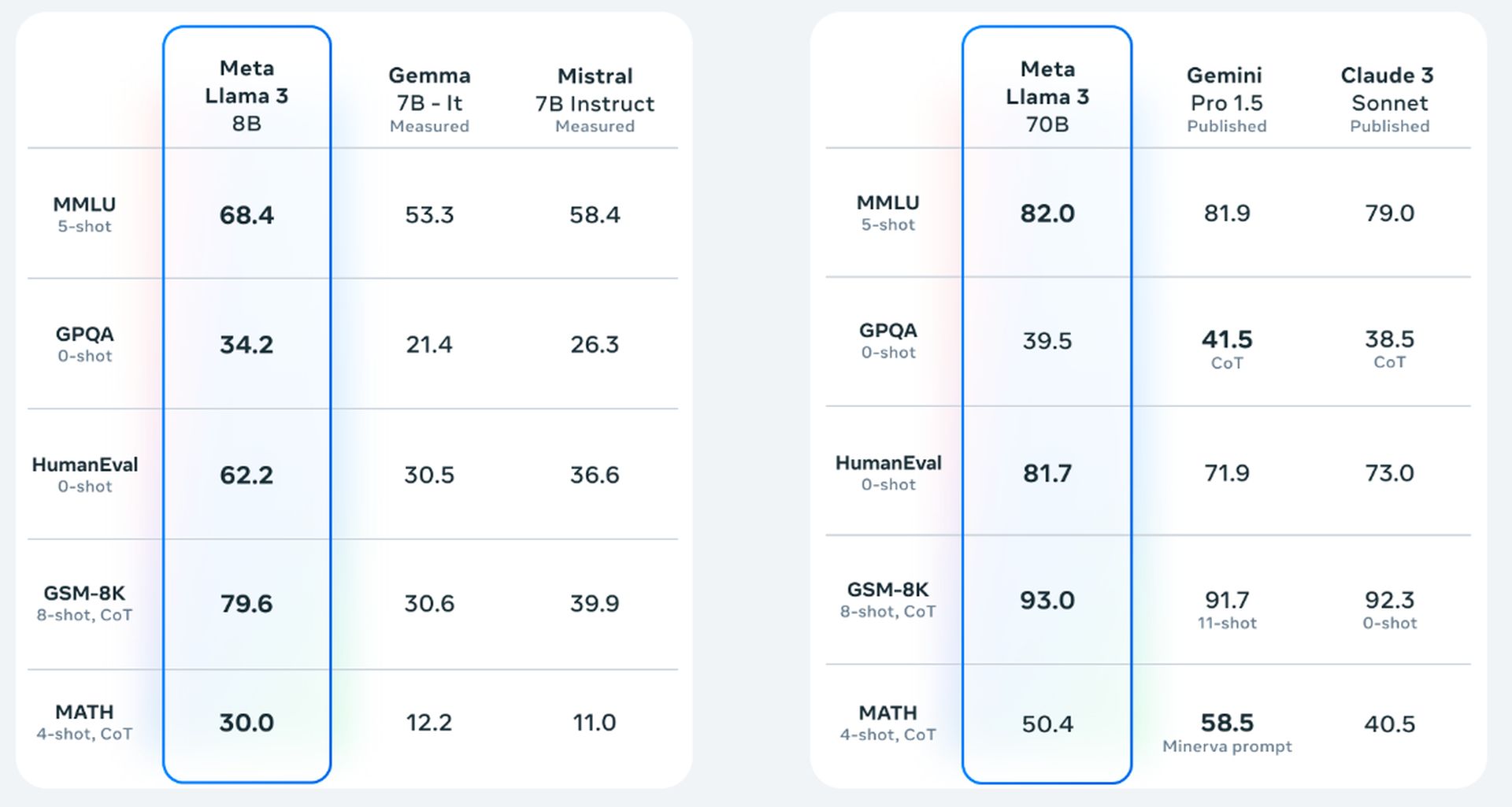

We told you about Llama 3 Benchmark results last month. Now, we have more comprehensive information.

Here is all we know about Llama 3…

Llama 3: Highlights and innovations

Improving performance and capacity

Llama 3 is available with 8 billion and 70 billion parameters, providing a much higher processing capacity than its predecessors. These large-scale models demonstrate exceptional language processing, text generation, and complex problem-solving capabilities. In particular, they improve the accuracy and speed of language models, enhancing their ability to answer more challenging questions and enriching the user experience.

Expanding application areas

Llama 3 provides developers with pre-trained models, allowing them to fine-tune them according to their needs. For example, in areas such as e-commerce, healthcare, and customer service, Llama 3’s advanced natural language processing capabilities provide more accurate and human-like responses to user queries.

Open-source approach

Meta’s open-sourcing of Llama 3 allows the global AI community to study the technology, adapt it to their own projects, and develop innovative solutions. Providing an open-source model makes it easier for researchers and developers to share knowledge with each other, contributing to the faster advancement of AI technologies.

Enhanced security features

Llama 3 comes with several security features designed to prevent abuse. Tools such as Llama Guard 2, Code Shield, and CyberSec Eval 2 have been developed to ensure the model can be used safely. These tools specifically detect potential malicious uses of the model, creating a secure AI environment.

Future plans and improvements

Meta plans to continuously improve Llama 3 and expand the model’s capabilities. In particular, we aim to add features such as multimodality and multilingualism, as well as a longer context window and more powerful general capabilities. Such enhancements will enable the model to work with more complex and diverse datasets with a wider range of uses.

Llama 3 architecture

The development of Llama 3 is based on key elements such as model architecture, pre-training datasets, scaling, and instruction-based fine-tuning. The large-scale datasets and advanced algorithms used during the model’s training significantly improve its performance. In addition, innovative techniques applied during the model’s training enable the AI to learn faster and more effectively.

Llama 3 system requirements

You can see the system requirements in the table we have prepared for you below:

| Component | Requirement |

|---|---|

| CPU | Modern CPU with at least 8 cores |

| GPU | Nvidia GPUs with CUDA architecture (RTX 3000 series or later) |

| RAM | 16 GB (for 8B model), 32 GB or more (for 70B model) |

| Disk Space | Several terabytes of SSD storage for larger models (70B) |

| Operating System | Linux (preferred for large-scale operations) or Windows |

| Python | Python 3.7 or above |

| Machine Learning Frameworks | PyTorch (recommended) or TensorFlow |

| Additional Libraries | Hugging Face Transformers, NumPy, Pandas |

Llama 3 license

The Llama 3 license is an exclusive license created by Meta that allows research and commercial use. It grants a non-exclusive, worldwide, non-transferable, and royalty-free limited license to use, reproduce, distribute, copy, create derivative works from, and modify the Llama 3 models and related materials.

For more information, please visit Meta’s official license page.

Llama 3 function calling

Llama 3 function calling is a feature that allows the model to execute specific functions within its response. This is a significant advancement from previous versions, as it enables Llama 3 to perform tasks like:

- Code generation and execution: Llama 3 can generate and execute code snippets directly, making it a valuable tool for developers. It can automate coding tasks, generate boilerplate code, and suggest improvements.

- Database queries: The function calling feature allows Llama 3 to interact with databases, fetching relevant information and incorporating it into its responses.

- API interactions: Llama 3 can call external APIs to access real-time information or perform actions, broadening its capabilities and applications.

How does it work?

Llama 3 function calling uses a structured approach. The model identifies specific function calls within the user’s request. It then executes these functions and integrates the results into its final response.

Meta’s Llama 3 language model is poised to have a major impact on the future of AI technologies. With its advanced features, wide usage areas, and open-source approach, Llama 3 enables artificial intelligence to reach a wider audience and technology to develop faster. Meta aims to consolidate its leadership in AI by continuously improving this model and adding new features.

Featured image credit: Dima Solomin / Unsplash