Even as Apple prepares to unveil its broader AI (artificial intelligence) strategy at the Worldwide Developer Community (WWDC) in June, the company highlighted the AI technologies available on its devices at its iPad event last Tuesday.

The company promoted the new iPad Pro and iPad Air as “an incredibly powerful device for AI” and mentioned AI-powered features such as image recognition, topic extraction, and live text capture. The enhanced M4 chip, which includes a neural engine dedicated to speeding up AI workloads, was also discussed using the iPad Pro.

So the question is, where has Apple been in the AI race all this time? Was it secretly already in this race, or was it caught off guard and late to the AI race, as most users or analysts thought?

Apple and the state of artificial intelligence

During the last iPad event, Apple showcased the new iPad Pro and iPad Air, branding them as “incredibly powerful devices for AI.” These devices are equipped with the latest M4 and M2 chips respectively, featuring enhanced neural engines designed to accelerate AI workloads. Capabilities such as advanced image recognition, topic extraction from texts, and live text capture are just the tip of the iceberg. These features underscore Apple’s commitment to integrating more sophisticated AI technologies into its devices.

However, the question that looms large is why Apple seemed to lag in the AI race. Historically, Apple has incorporated neural processing units (NPUs) within its chips long before AI became a buzzword. The presence of NPUs in the M1 and M2 chips, for instance, provided these processors a significant performance edge over competitors. Yet, Apple’s strategy appeared reactive rather than proactive, especially as the industry shifted focus from hardware-centric innovations to software solutions like AI-driven chatbots.

When Apple wasn’t referring to its own AI technology, it pointed to third-party solutions. For example, when talking about iPad Air, Apple praised Pixelmator’s Photomator, which uses AI models trained on 20 million professional images and improves photos with a single click.

This is where the secretive part begins. We can say that this secrecy has become a little clearer with the cancellation of Apple Car and the shift of most of the software developers working there to the unit where Apple’s own artificial intelligence works. On the other hand, the reason for the secrecy may be that they are not doing this work themselves, but having their investors do it, but not getting enough results. Microsoft also followed this path, but there was a lot of feedback from OpenAI.

Can Apple keep up with the AI race?

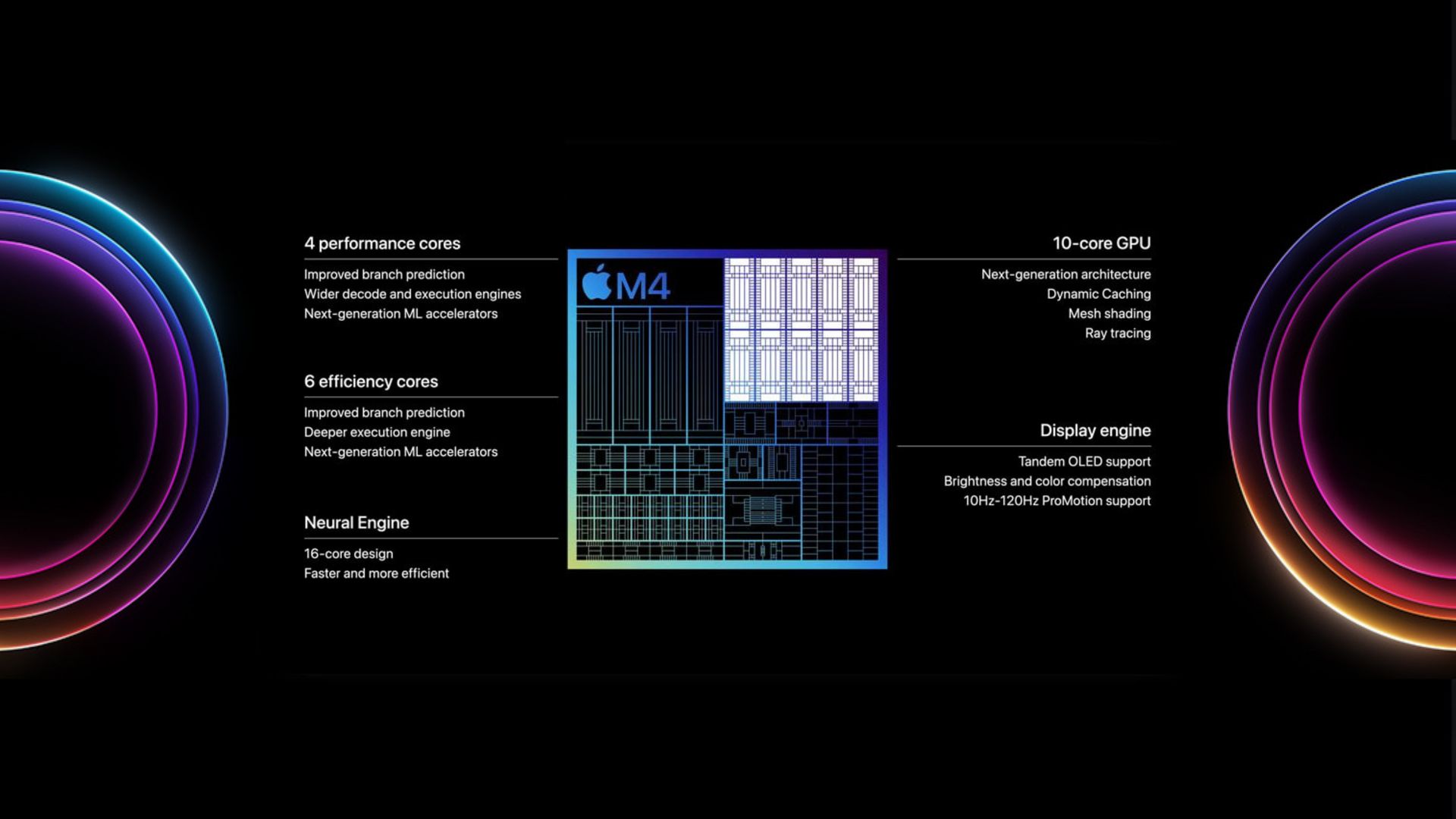

Meanwhile, the iPad Pro has switched from the M2 to the new M4. Apple claims this next-generation Apple processor will deliver up to 50% faster performance compared to the M2, including a new CPU, next-generation GPU and next-generation ML accelerators. The company also highlighted the chip’s neural engine, or NPU, dedicated to accelerating AI workloads.

John Ternus, Apple Senior Vice President, Hardware Engineering, said during the event, “Right now the chip industry is starting to add NPUs to their processors, while we’ve been building our industry-leading neural engine into our chips for years.”

Users are curious about possible use cases for these hardware advancements, and while Apple has yet to elaborate, it may take the opportunity to introduce an iPadOS release with new AI features or other developer-focused announcements.

But as we mentioned, the important thing is not the existence of the NPU but how we should use it, for example, if Siri was a Microsoft product and as a result of its partnership with OpenAI, would Siri remain like the current Apple product?

Everything points to June

Although they try not to show that they are behind in the race, with Let Loose and WWDC events, Apple will begin its test with artificial intelligence. If the size of the company and its past visionary work also affects its AI efforts, we could see very successful products. After all, we are talking about the company that accelerated the transition to the smartphone point of time.

While Apple glossed over the current features of iPadOS, listing features such as the multitasking view Stage Manager and Reference Mode, a display mode designed for creators, it hinted that improved AI capabilities will soon be available to iPadOS app developers. He noted that the OS software offers advanced frameworks such as CoreML and that developers will have access to its neural engine to deliver “powerful AI capabilities” on the device.

Apple also touched on problems that can be solved with AI in areas such as photography. “We’ve all experienced that it’s difficult not to cast shadows when scanning a document in certain lighting conditions,” Ternus said, adding that the new Pro solves this problem, with AI automatically detecting documents such as forms and instantly taking multiple photos if there are inappropriate shadows. The result is a much better scan, he said.

As WWDC 2024 approaches, all eyes will be on Apple to see how it articulates its AI strategy. Will Apple introduce a groundbreaking AI-powered feature or a new partnership that could shift industry dynamics? Only time will tell. What’s clear is that Apple is not just entering the AI race but is poised to redefine it, staying true to its legacy of innovation and quality. The anticipation for June’s revelations is high, and the implications for the tech industry could be significant. Apple’s next moves could very well set the stage for the next generation of AI applications across its vast array of devices.

Featured image credit: Apple