Artificial Intelligence (AI) has been making incredible progress in recent years. Yet, understanding what is an AI hallucination and how to overcome it still an ongoing process for LLM creators.

The technology of today and tomorrow continues to fascinate us all especially when it comes to how AI can understand and generate human language. Huge leaps have been made with Large Language Models (LLMs), computer programs that are trained on massive amounts of text. These LLMs can write different kinds of creative text formats, like poems, code, scripts, musical pieces, email, letters, etc.

However, there’s a peculiar problem that arises with the power of these impressive models – they sometimes “hallucinate”.

What is an AI hallucination?

Just like humans can imagine or dream up things that aren’t real, AI can do something similar. AI hallucinations happen when an LLM produces text that seems believable but is factually wrong. These hallucinations can range from minor errors to completely made-up stories and nonsensical statements.

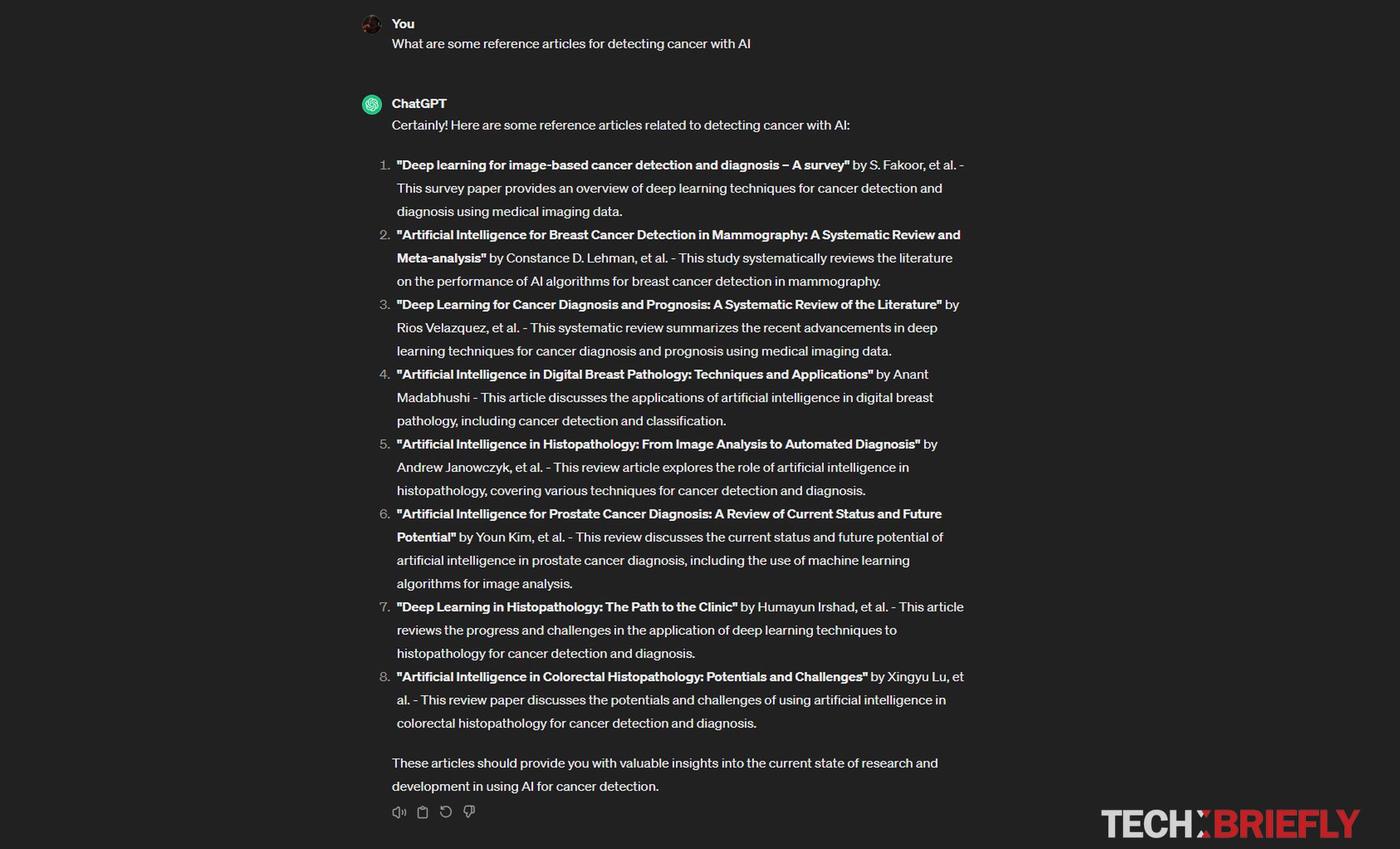

To get a better grip on understanding this issue, let’s look at some examples:

- Mixing up facts: An AI asked to write a news article about an event might include incorrect dates, and locations, or misrepresent the people involved.

- Creating false information: An AI tasked with writing a product description could invent features that the product doesn’t actually have.

- Confidently incorrect: The really tricky thing about AI hallucinations is that they’re often presented in a very convincing manner, making it hard to tell them apart from real information.

Why hallucinations are a problem for LLMs?

As you can see from our explanations of what is an AI hallucination, they are a significant problem for LLMs for several reasons. First, if people can’t trust the text an LLM generates, users lose faith in the overall usefulness and reliability of the technology. Imagine you’re reading an online summary of a news story written by an LLM. If the summary contains factual errors, you might stop using that source altogether. This can be a major problem for any application that relies on LLMs to generate text, such as chatbots, virtual assistants, or automated content creation tools.

Second, AI hallucinations could contribute to the spread of misinformation. In an age where fake news is already a major concern, AI-generated text that seems believable could easily be mistaken for real information. This could have a negative impact on everything from public discourse to important decision-making processes. For instance, imagine an LLM creating social media posts about a political candidate that contains false information.

These posts could mislead voters and sway the outcome of an election. That’s the exact reason why Google has disabled Gemini’s ability to respond to election-related queries.

Third, businesses or organizations that use LLMs could face reputational damage if their AI systems produce inaccurate or harmful content. Let’s say a company uses an LLM to write product descriptions for their online store. If the LLM invents features that the products don’t actually have, it could lead to customer dissatisfaction and damage the company’s reputation.

Why do AI hallucinations happen?

You have some ideas on what is an AI hallucination now, but why do they happen? Several factors are contributing to this issue:

- How they are trained: LLMs are fed enormous amounts of text data. This data often contains errors, biases, and outdated information. The AI learns from this data just like it does from correct information.

- Understanding vs creating: LLMs are good at understanding patterns in language, but they don’t always have a solid grasp of real-world concepts or logic. They can generate something that sounds plausible without it actually being true.

- The quest for fluency: LLMs are designed to produce text that sounds natural and human-like. Sometimes, this means “filling in the blanks” where there might be gaps in the AI’s knowledge. Those filled-in-blanks can lead to the AI making up content.

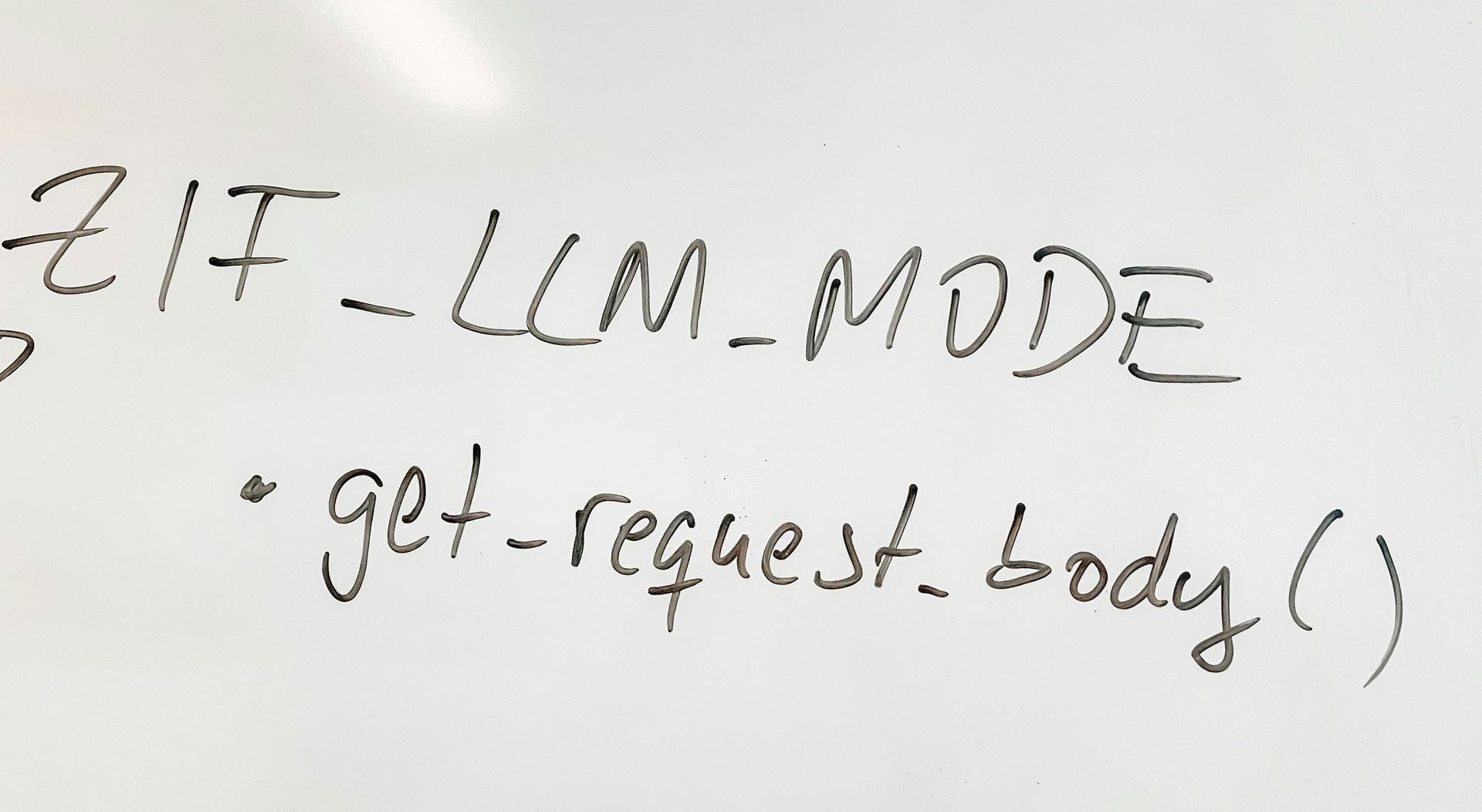

What’s being done to solve this?

Researchers and developers in the AI community are actively working on ways to reduce AI hallucinations.

Some of the approaches include:

- Better training data: Creating carefully curated datasets that are less likely to contain errors and biases, leading to improved AI behavior.

- Fact-checking: Developing techniques to cross-reference AI-generated text with reliable sources, ensuring its accuracy.

- Explainability: Designing LLMs that can explain their reasoning process, which makes it easier to spot potential errors

- Human-AI collaboration: Building systems where humans and AI work together, making it easier to identify and correct hallucinations.

Besides all that, Nvidia’s Jensen Huang states AI hallucinations can be cured within the next 5 years.

So, AI hallucinations are a complex challenge, but it’s a crucial one to overcome if LLMs want to reach their full potential. As technologies to reduce hallucinations become more sophisticated, they promise to make LLMs a much more trustworthy and valuable tool for communication, creativity, and knowledge tasks.

Featured image credit: vackground.com/Unsplash