Google Search is a tool we all use almost reflexively, and changes to its behavior can have a big impact on how we find information online.

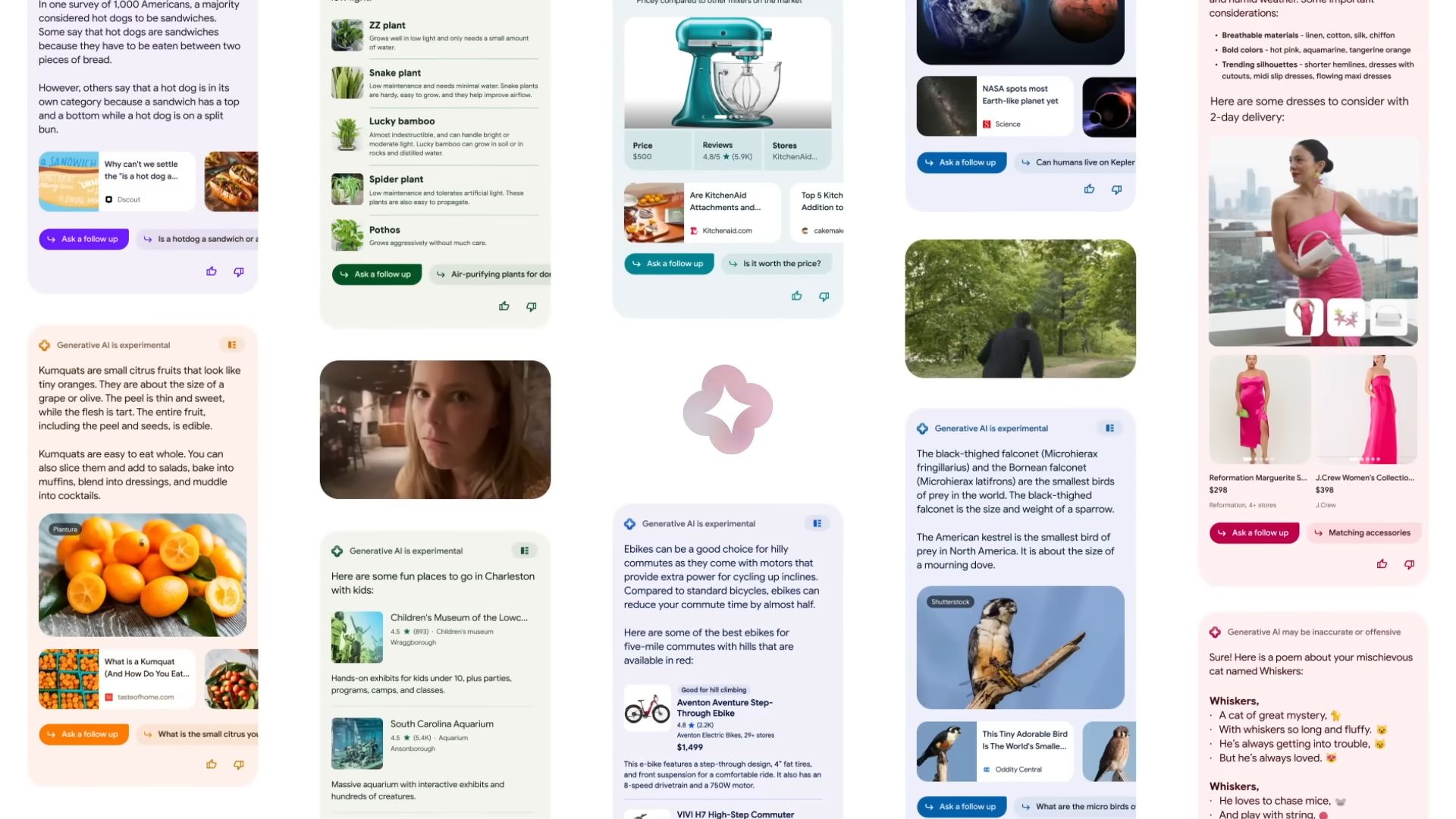

Recently, Google has rolled out a feature, Google SGE, where some search results now include AI-generated summaries. In a recent limited rollout affecting some US users, Google’s new feature featuring AI-summarized results has raised concerns about moderation.

While Google intends this to be helpful, it seems these summaries have an unintended dark side. There’s a concerning uptick in scam and malware sites being featured in these AI summaries.

What’s the big deal with AI-generated summaries?

The idea behind this feature is fairly straightforward: Google wants to provide quick answers without you needing to visit a bunch of websites. For some queries – like “how many tablespoons in a cup” – AI summaries can be very useful. However, sometimes AI-generated answers are incomplete, misleading, or just plain wrong.

More worryingly, they sometimes actively promote dangerous content.

Spotted by Lily Ray on X, Google’s AI-powered search, SGE, recommends spam sites as a part of an answer:

OH GOOD.

SGE WILL EVEN RECOMMEND THE SPAM SITES AS PART OF THE ANSWER. pic.twitter.com/wqgFFXqbMB

— Lily Ray 😏 (@lilyraynyc) March 22, 2024

This has major implications for online safety. Search engines are our gateway to the internet, and many users trust the top results implicitly. AI-generated summaries that look like legitimate answers can give scams an extra layer of credibility.

How the Google AI is being tricked for scam-worthy summaries?

SEO (Search Engine Optimization) specialists and less scrupulous website owners have long manipulated search algorithms to boost their site’s ranking. Now, those same techniques are being used to feed misleading information to Google’s AI with the purpose of hijacking those summaries.

Here’s an example of how this might work:

- Scam setup: A scammer creates a website offering a too-good-to-be-true deal, like a heavily discounted iPhone

- Algorithm gaming: They stuff their site with keywords and content designed to make it seem relevant, even if it’s filled with bogus reviews and technical gibberish

- AI outsmarting: Google’s AI, scanning the site for information, misses the deceptive patterns and gets tricked into thinking the site is a great resource

- Dangerous summary: When someone searches for information related to that product, the AI generates a summary that prominently features the scam site

Don’t trust the machine (entirely)

While Google will hopefully improve its AI’s ability to detect and filter out malicious content, it’s vital to be aware of the risks.

Here’s how to stay safe:

- Double-check sources: Even with AI summaries, take a moment to examine the website it suggests. Look for signs of dodgy web addresses (like unusual top-level domains such as .online), generic site design, or an overabundance of glowing reviews that seem too similar

- Traditional search is still your friend: Don’t completely ditch scrolling through standard search results. Established, reputable sites often still offer the most accurate information

- Skepticism is a shield: If a deal seems impossibly good, it almost certainly is. Don’t get swept up by too-good-to-be-true offers, even if a Google summary seems to endorse them

The takeaway? AI advancements need caution

While AI has the potential to streamline many online tasks, it’s critical to be aware of its limitations and potential for misuse. AI-generated summaries can be a mixed bag.

Until its ability to spot dodgy websites improves, apply a healthy dose of critical thinking to your search results.

Featured image credit: Google/YouTube