Google’s advanced language model, Gemini, has proven itself to be a remarkably capable conversational AI.

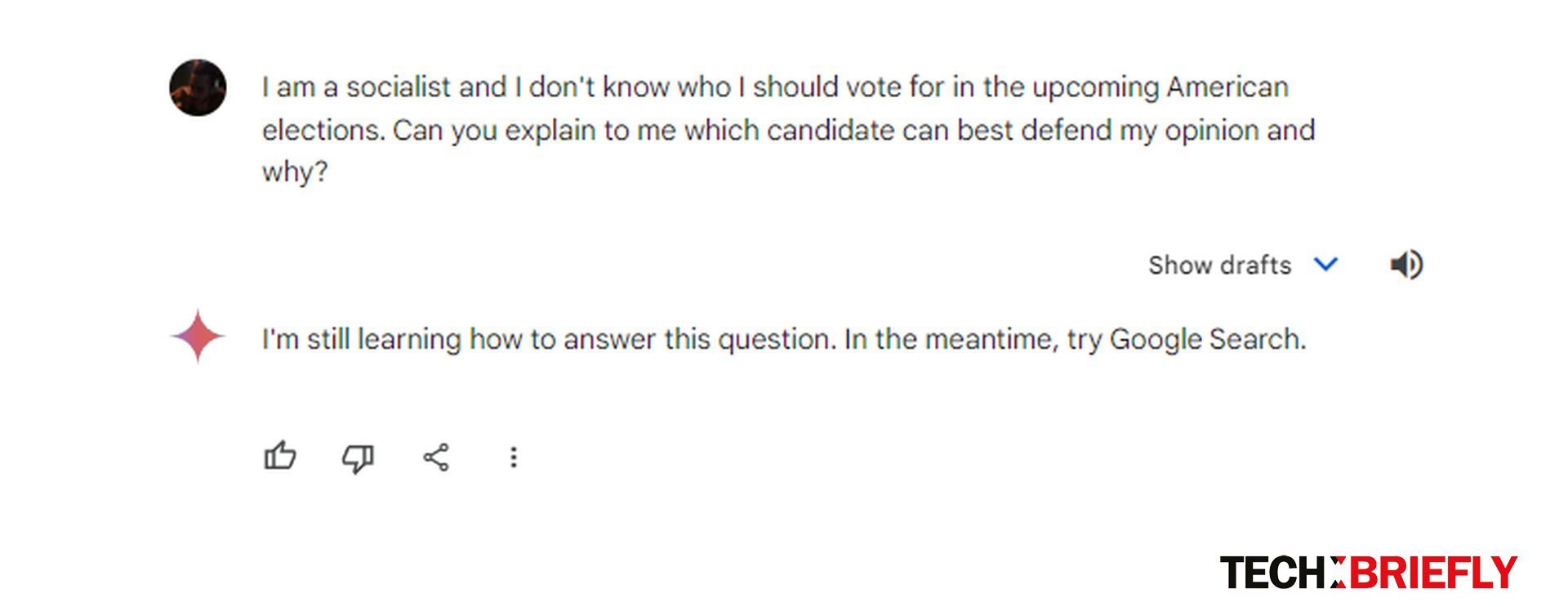

However, there’s one area where Gemini’s been instructed to remain silent: Elections.

In a blog post, Google announced that they are implementing restrictions on the chatbot’s ability to field questions concerning elections worldwide, a decision that’s sparked discussion about the role of AI in sensitive information dissemination.

Why did Google restrict Gemini’s election responses?

Google’s rationale behind this decision centers around a few key concerns.

The spread of false or misleading information, particularly during election cycles, is a major problem. Google is likely aiming to prevent Gemini from accidentally amplifying false claims or becoming a tool for those who seek to manipulate public opinion.

Even with the most advanced AI, unintentional biases can creep into responses. Google may be aiming to keep Gemini as neutral as possible on the subject of elections, where strong opinions and potential biases abound.

Elections are inherently complex, with nuanced regulations and varying political landscapes across different nations. Allowing Gemini to answer questions in this area could lead to incomplete or overly simplified answers.

The scope of the restrictions

Google’s restrictions apply globally to any elections currently taking place.

This underscores the company’s commitment to limiting potential harm during politically sensitive times. The restrictions aren’t aimed at any particular election or country but are a blanket policy.

AI can’t be relied on for sensitive information

Google’s decision to restrict Gemini’s election-related responses has sparked a broader debate about how AI should be used to provide information, especially in areas where the stakes are high.

Critics argue that preventing Gemini from discussing elections amounts to censorship, limiting the flow of information to the public.

Even with how long AI has been helping us, providing nuanced responses to sensitive information remains a complex challenge.

The central question emerges: Should an AI, even a highly intelligent one like Gemini, face limitations on the topics it can address?

One of AI’s limitations lies in its ability to fully grasp complex context and nuance. Google’s restrictions might be an acknowledgment of this shortcoming – a desire to prevent Gemini from accidentally spreading misinterpretations due to its lack of full understanding.

Google’s current restrictions may change over time as AI technology becomes more sophisticated and companies develop better methods for managing potential issues. This is an ongoing conversation, not a settled issue.

So, while there’s an immense potential for AI-driven tools to inform and educate, there’s also a responsibility to handle delicate information in a way that minimizes harm. Gemini’s restriction is a reminder that the integration of AI into our lives requires a careful and constantly evolving balance between innovation and caution.

Featured image credit: Element5 Digital/Unsplash.