In a blog post, Google has recently unveiled a suite of groundbreaking language models under the name Google Gemma AI. These models offer impressive power in a surprisingly compact form, opening doors to innovation in areas where large, resource-hungry models were previously impractical.

Let’s explore what Google Gemma AI is, its features, pricing, and how you can harness its potential.

What is Google Gemma AI?

Google Gemma AI is a series of open-source, lightweight language models. Built on the same foundational technology as Google’s renowned Gemini models, Gemma’s primary strengths are:

- Accessibility: Runs on laptops, cloud environments, and other less powerful hardware

- Performance: Rivals larger models in certain tasks

- Responsible AI: Prioritizes safety and ethics with built-in tools

Gemma models come in two sizes:

- Gemma 2B: 2 billion parameters, perfect for efficiency-focused applications

- Gemma 7B: 7 billion parameters, ideal for more complex scenarios

Google Gemma AI’s standout feature is its compact size. This enables developers to deploy cutting-edge AI solutions on devices with limited resources, like smartphones or smart home appliances. It also expands AI’s reach into environments where less powerful hardware is the norm. Despite this efficiency, Gemma models maintain impressive performance levels, often rivaling those of larger language models.

Another key feature is instruction tuning. Gemma can be customized to understand and follow specific instructions, making it highly adaptable for task completion. This opens doors to creating AI-powered tools and assistants that go beyond simple prompts.

Finally, Google Gemma AI is built with responsible AI in mind. Google has included tools to help developers ensure their Gemma-based applications prioritize safety, ethics, and fairness. This focus aligns with the growing emphasis on creating trustworthy and beneficial AI systems.

Google Gemma AI pricing

Google Gemma AI is an open-source project, thus the models themselves are free to use. However, consider the associated costs:

- Compute resources: Cloud instances or local hardware needed when running the models

- Customization and fine-tuning: Potential costs for adapting models to specific tasks

How strong is Google Gemma AI compared to competitors?

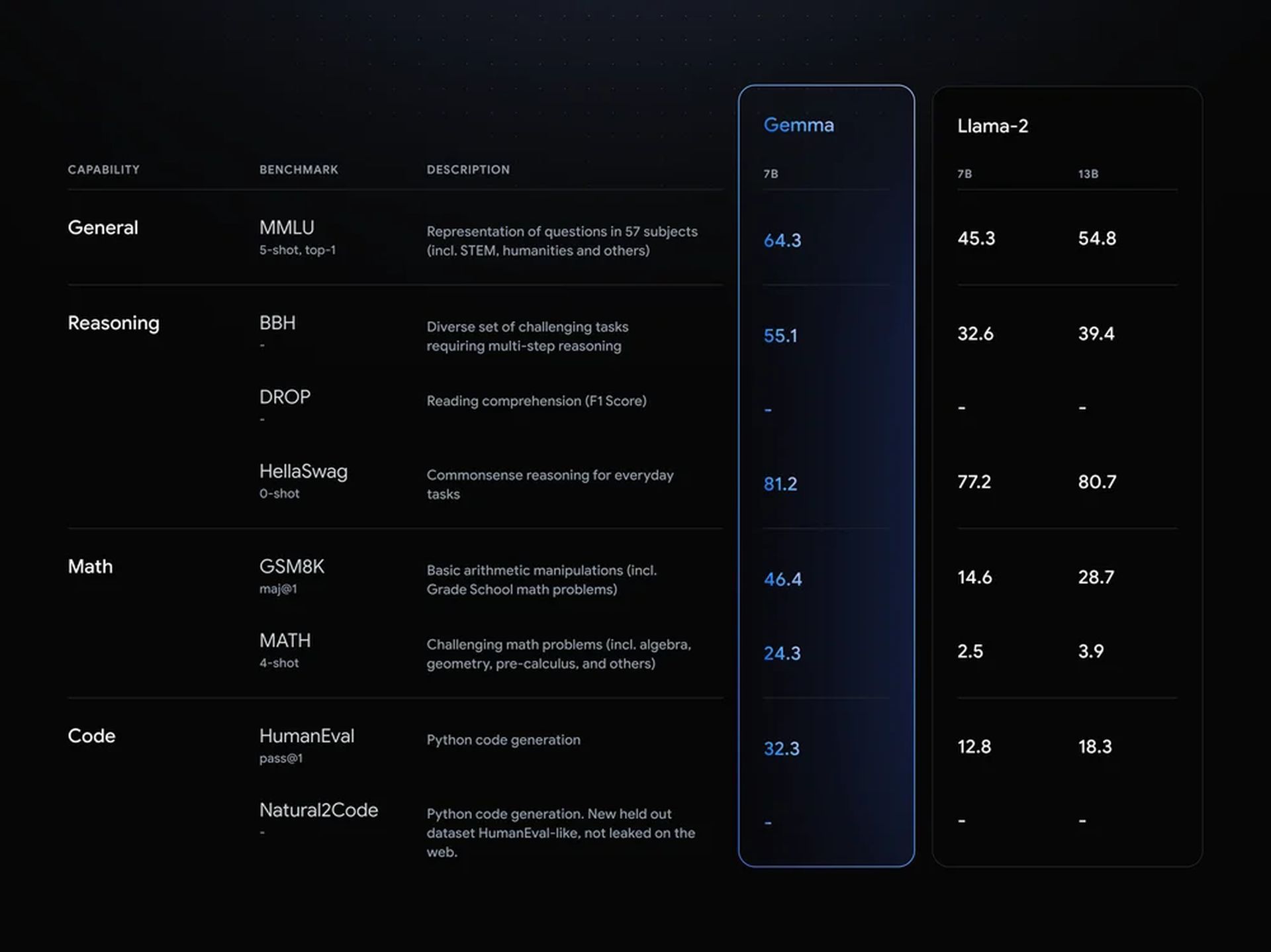

Google Gemma AI’s core strength lies in its balance between size and performance. It’s smaller than many leading language models, meaning it can run on devices with less power, like your phone or laptop. Surprisingly, Gemma still performs exceptionally well on many tasks. This efficiency advantage makes it a compelling choice for projects where hardware limitations are a concern.

Comparing language models is tricky. The “best” model depends on your specific needs. If your AI tool needs to translate languages, a translation-focused model will likely outperform a general-purpose one, regardless of size.

Gemma’s open-source nature also sets it apart. This encourages a strong community to improve and customize the models over time. Additionally, Google’s focus on safe and responsible AI aligns well with ethical application development.

To truly figure out Gemma’s strength, you’ll need to:

- Clearly define your project’s requirements (task, hardware, accuracy goals)

- Test Gemma against similar-sized competitors on your specific task

- Look on community forums to see if others are having success with Gemma for similar projects

Getting Started with Google Gemma AI

Start your Gemma journey on the official Google Gemma AI website. It’s your go-to resource for accessing the models themselves, finding comprehensive tutorials, and exploring in-depth documentation. This website provides the foundation to understand Gemma’s capabilities and potential applications.

For a hands-on, experiment-driven approach, try Google’s Colab notebooks. These browser-based environments offer pre-written code examples and allow you to run Gemma models directly without any complex setup. Colab is a fantastic way to experience the power of Gemma firsthand and gain a rapid understanding of how it works.

Here are is you map to Google Gemma AI:

- Framework & Environment: Choose from JAX, PyTorch, Keras, and utilize cloud environments like Google Cloud or run locally

- Model Selection: Gemma 2B for efficiency, Gemma 7B for complexity. Consider pre-trained vs. instruction-tuned models based on your use case

- Resources:

- Google AI Gemma Website

- Hugging Face, NVIDIA tools, Kaggle, and Colab for integration and experimentation

Google Gemma AI empowers developers to push the boundaries of language-based AI applications. Its lightweight nature, coupled with impressive capabilities and a focus on responsible use, make it a game-changer for the future of AI innovation.

Featured image credit: Google.