Meta AI’s release of the Video Joint Embedding Predictive Architecture (V-JEPA) is a breakthrough in artificial intelligence development and perhaps the whole of our future.

Today could indeed be a turning point for AI. Following Google’s announcement of Gemini 1.5 Pro and OpenAI’s Sora, another tech giant has dropped a bombshell.

Inspired by the pioneering work of Yann LeCun, V-JEPA takes a bold leap toward machines that can learn and understand the world around them with a human-like intuitiveness.

How Meta V-JEPA mirror human learning?

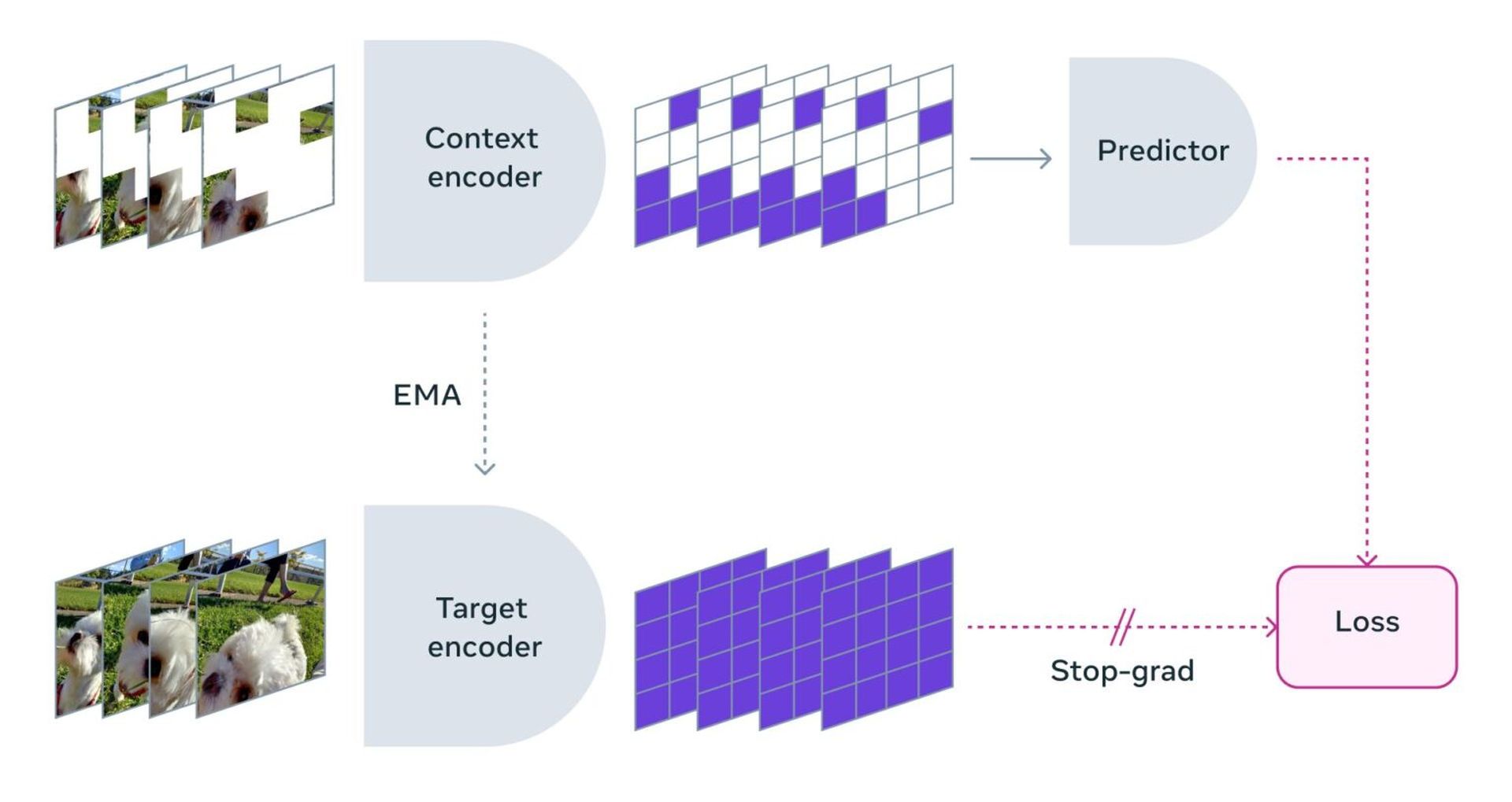

Much like an infant learns through observation, passively taking in sights and sounds to decipher patterns and relationships, V-JEPA absorbs information from videos. However, rather than focusing on individual pixels, it uses advanced algorithms to analyze videos at a higher, conceptual level. It seeks to understand the relationships between objects, the flow of events, and the underlying rules governing physical interactions.

What makes V-JEPA so unique is its predictive nature. The model is trained by being shown videos with carefully masked sections. The new model’s task is to not only predict the missing visual information but also to infer the abstract concepts of what’s happening within the scene. This forces the model to develop a strong internal representation of a virtual model of the world it observes.

Today we’re releasing V-JEPA, a method for teaching machines to understand and model the physical world by watching videos. This work is another important step towards @ylecun’s outlined vision of AI models that use a learned understanding of the world to plan, reason and… pic.twitter.com/5i6uNeFwJp

— AI at Meta (@AIatMeta) February 15, 2024

Efficiency and adaptability equals innovation

V-JEPA’s key innovations lie in the way it learns and how it applies its knowledge:

- Self-supervised learning: Can be trained on massive amounts of unlabeled video data. It doesn’t require hand-crafted examples, reducing the cost and time needed to achieve impressive results

- Selective prediction: Designed to ignore less relevant details and focus on the big picture, leading to remarkable efficiency compared to traditional AI models

- Remarkable adaptability: After initial training, it can be quickly fine-tuned on a small amount of labeled data to tackle specific tasks. This opens the door to highly flexible AI systems that can continuously learn and improve

The new model excels in its ability to develop an intricate understanding of complex visual events. V-JEPA can dissect intricate interactions between multiple objects, even when actions are subtle or occur over extended periods. This could prove vital for tasks like detailed video analysis or robotic manipulation.

By understanding what’s happening in a scene, V-JEPA builds a powerful knowledge base for real-world problem-solving. This contextual awareness could revolutionize assistive technologies and AI agents.

Who is Yann LeCun?

Yann LeCun is a giant in the world of computer science and artificial intelligence. He’s widely recognized as one of the founding fathers of deep learning, particularly for his groundbreaking work on convolutional neural networks (CNNs). CNNs have completely changed the way machines see the world, driving major advances in computer vision, image recognition, and countless applications like self-driving cars and medical diagnostics. LeCun’s influence extends beyond deep learning, shaping broader machine learning approaches with his ongoing research in areas like reinforcement learning and unsupervised learning.

Currently, LeCun holds the prestigious role of Vice President and Chief AI Scientist at Meta (formerly Facebook). There, he guides a team of top-tier AI researchers who are exploring the next frontiers of technology for Meta’s products and services. LeCun’s academic roots remain strong as he also serves as a Silver Professor at New York University. In this role, he mentors and inspires the next generation of AI innovators.

LeCun’s remarkable contributions to the field did not go unnoticed. In 2018, he shared the ACM A.M. Turing Award with Geoffrey Hinton and Yoshua Bengio. This award, often called the “Nobel Prize of Computing”, is the highest honor in computer science and reflects the transformative impact of their deep learning research.

Featured image credit: Meta.