Nvidia is at the forefront of innovation with Nvidia Chat with RTX AI. At a time when AI technology is advancing at an unprecedented pace, this revolutionary demo app allows users to personalize their own chatbot powered by a large language model (LLM) linked to their personal content.

Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, Chat with RTX AI provides fast and secure access to contextually relevant answers. Here’s what we know.

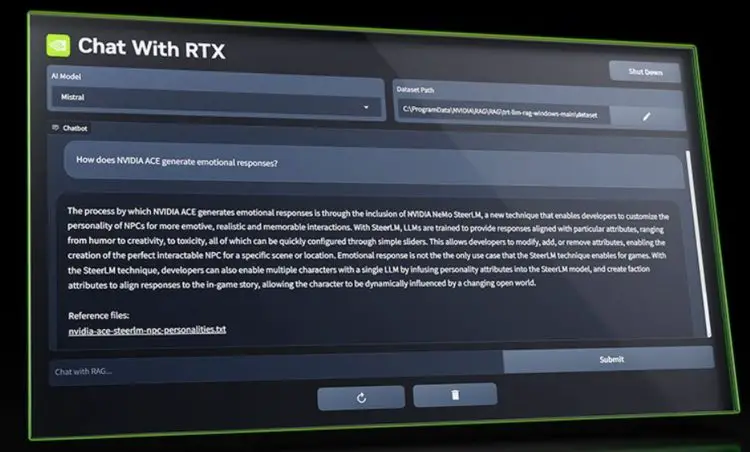

What is Nvidia Chat with RTX AI?

Nvidia Chat with RTX AI is a demo app that allows users to create their own custom chatbot tailored to their specific needs and preferences. Users can query chatbots using their own content, such as documents, notes, videos, or other data, and get fast and accurate responses. It is made possible by integrating RAG, TensorRT-LLM, and RTX acceleration, which simultaneously provide a seamless and efficient AI experience.

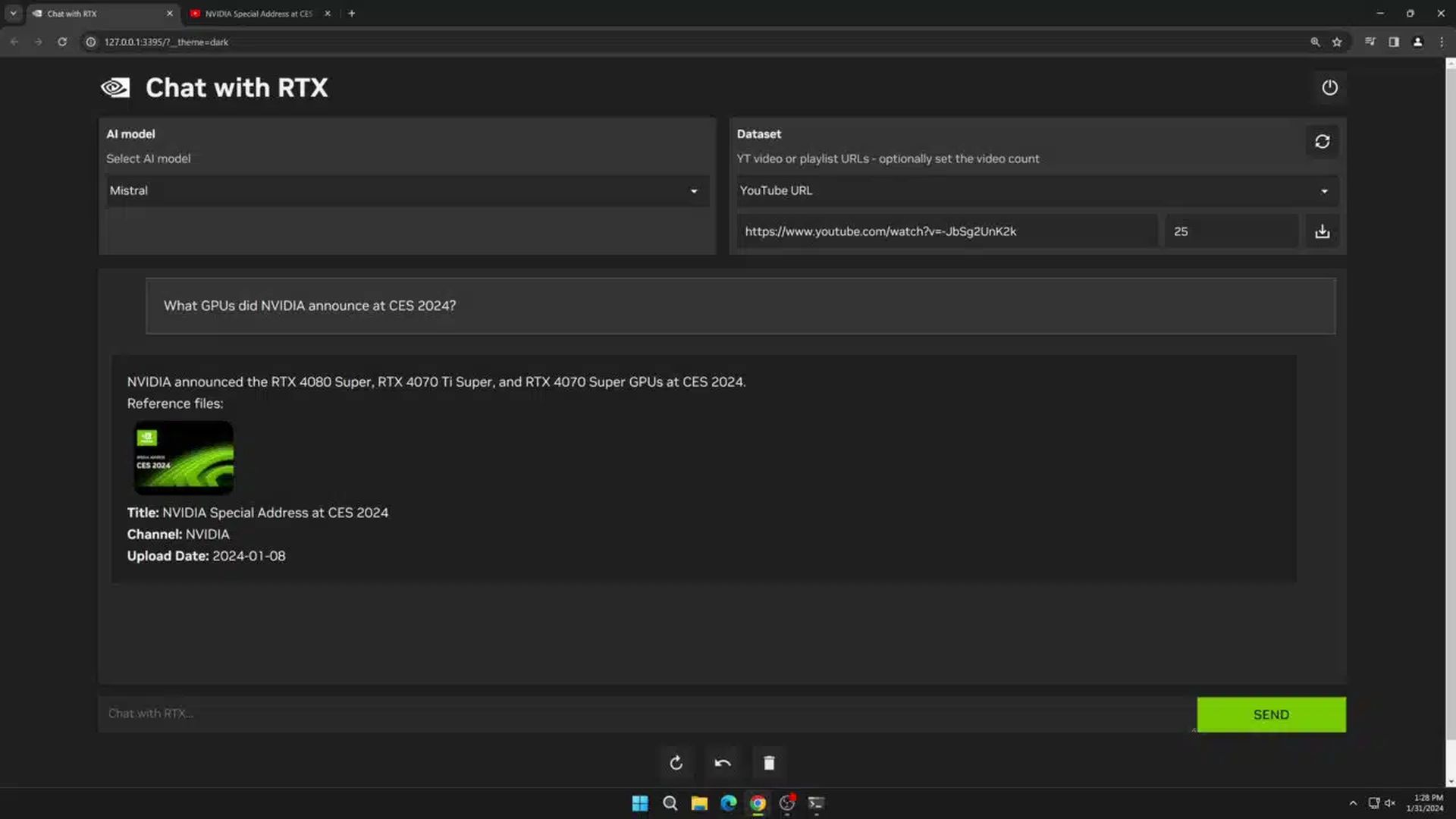

How does Nvidia Chat with RTX AI work?

Nvidia Chat with RTX AI works by connecting users’ local content to an open-source LLM like Mistral or Llama 2. This allows users to ask contextual questions and get relevant answers without the need for an internet connection or cloud-based services. The app supports various file formats such as .txt, .pdf, .doc/.docx, and .xml and can also integrate information from YouTube videos and playlists.

Users can type queries such as “What was the name of the music store my partner recommended in California?” and Chat with RTX AI will scan local files and provide the answer with context. This personalized chatbot is perfect for a variety of applications, such as research, education, and customer service.

Nvidia Chat with RTX AI system requirements

You can see the system requirements in the table we have prepared for you below:

| PLATFORM | Windows |

| GPU | NVIDIA GeForce™ RTX 30 or 40 Series GPU or NVIDIA RTX™ Ampere or Ada Generation GPU with at least 8GB of VRAM |

| RAM | 16GB or greater |

| OS | Windows 11 |

| DRIVER | 535.11 or later |

Benefits of Nvidia Chat with RTX AI

One of the key benefits of Chat with RTX AI is its fast and secure performance. Since the application runs natively on Windows RTX PCs and workstations, users can access their data quickly and efficiently without relying on cloud-based services. It is especially useful for those who need to handle sensitive data because it stays on their devices and is not shared with third parties.

Another benefit of Chat with RTX AI is that it can deliver contextually relevant responses. Unlike traditional chatbots, which often rely on predefined answers, Chat with RTX AI uses reach-enhanced generation to create answers based on the user’s specific query.

Building LLM-based applications with RTX

Chat with RTX AI is built from the TensorRT-LLM RAG developer reference project available on GitHub. This project provides a comprehensive framework for developers to build their own RAG-based applications accelerated with TensorRT-LLM for RTX. With the potential to revolutionize how we interact with AI, RTX Conversation with AI is an exciting development in the world of generative AI.

Join the NVIDIA Productive AI Developer Challenge on NVIDIA RTX

To encourage innovation and creativity in the field of generative AI, Nvidia is hosting NVIDIA Generative AI at NVIDIA RTX Developer Contest. The contest runs through Friday, February 23, inviting developers to create their own LLM-based applications accelerated with TensorRT-LLM for RTX. Participants can submit their entries for a chance to win exciting prizes, including a GeForce RTX 4090 GPU, a full, in-person conference ticket to NVIDIA GTC, and more.

Chat with RTX AI is a groundbreaking demo app that showcases the potential of personalized chatbots powered by NVIDIA RTX AI. With fast and secure performance, contextually relevant responses, and the ability to integrate with various file formats and YouTube videos, Chat with RTX AI is an exciting development in the world of generative AI.

Featured image credit: NVIDIA