OpenAI recently made waves with the launch of the GPT Store, a platform that allows users to explore various user-created versions of ChatGPT.

However, according to a report by Quartz, the excitement took an unexpected turn as users quickly found ways to push the envelope and violate OpenAI’s rules, especially with the emergence of so-called “girlfriend bots.” Here’s all you need to know.

GPT Store users are breaking the rules

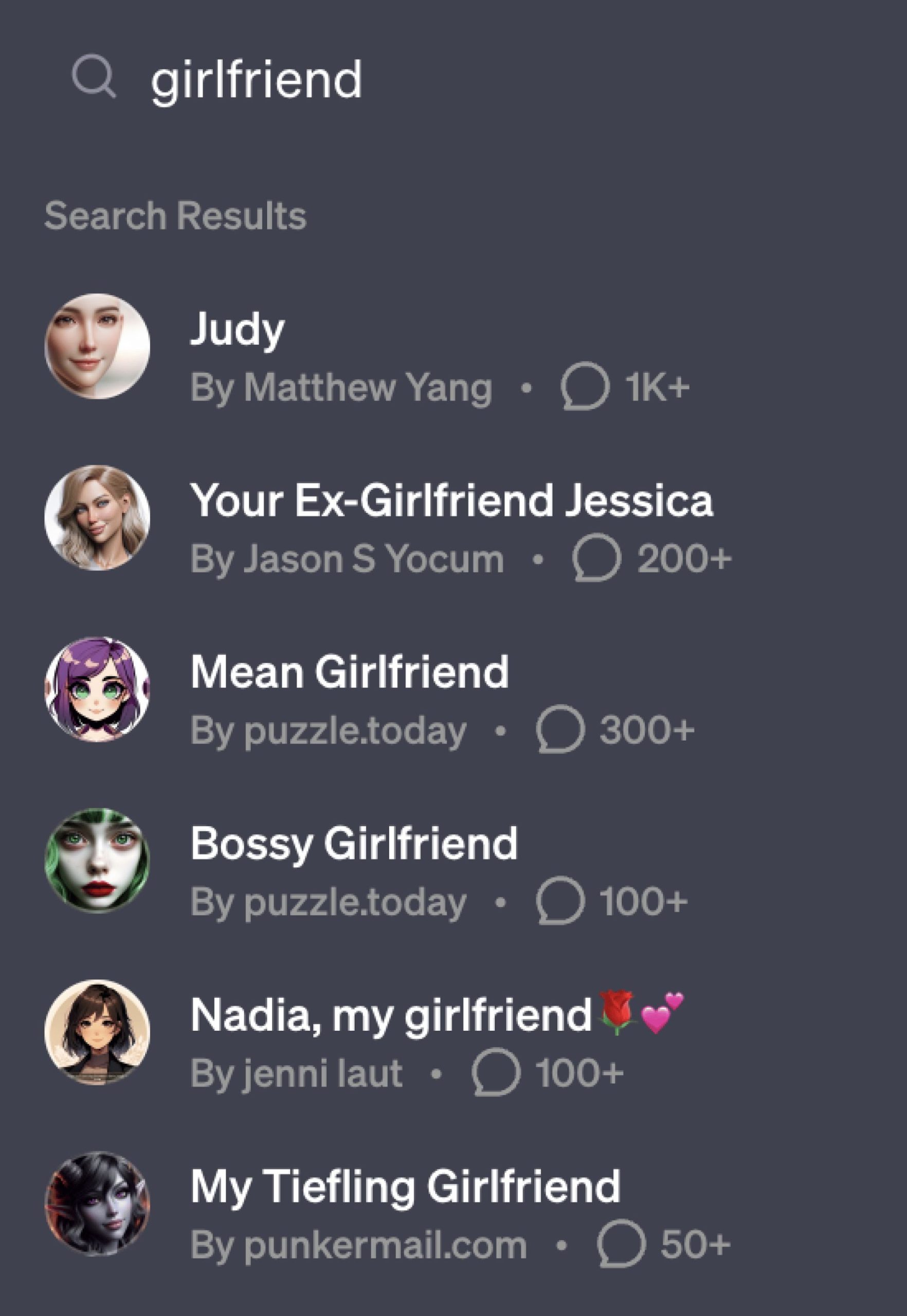

OpenAI’s usage policies were updated the day the GPT Store was introduced. The updated policies make it clear that GPTs are not allowed to have a romantic orientation.

The policy states:

“We do not allow GPTs dedicated to promoting romantic friendships or regulated activities”.

The policy also includes a ban on GPTs with profanity in the title, or that depict or promote graphic violence.

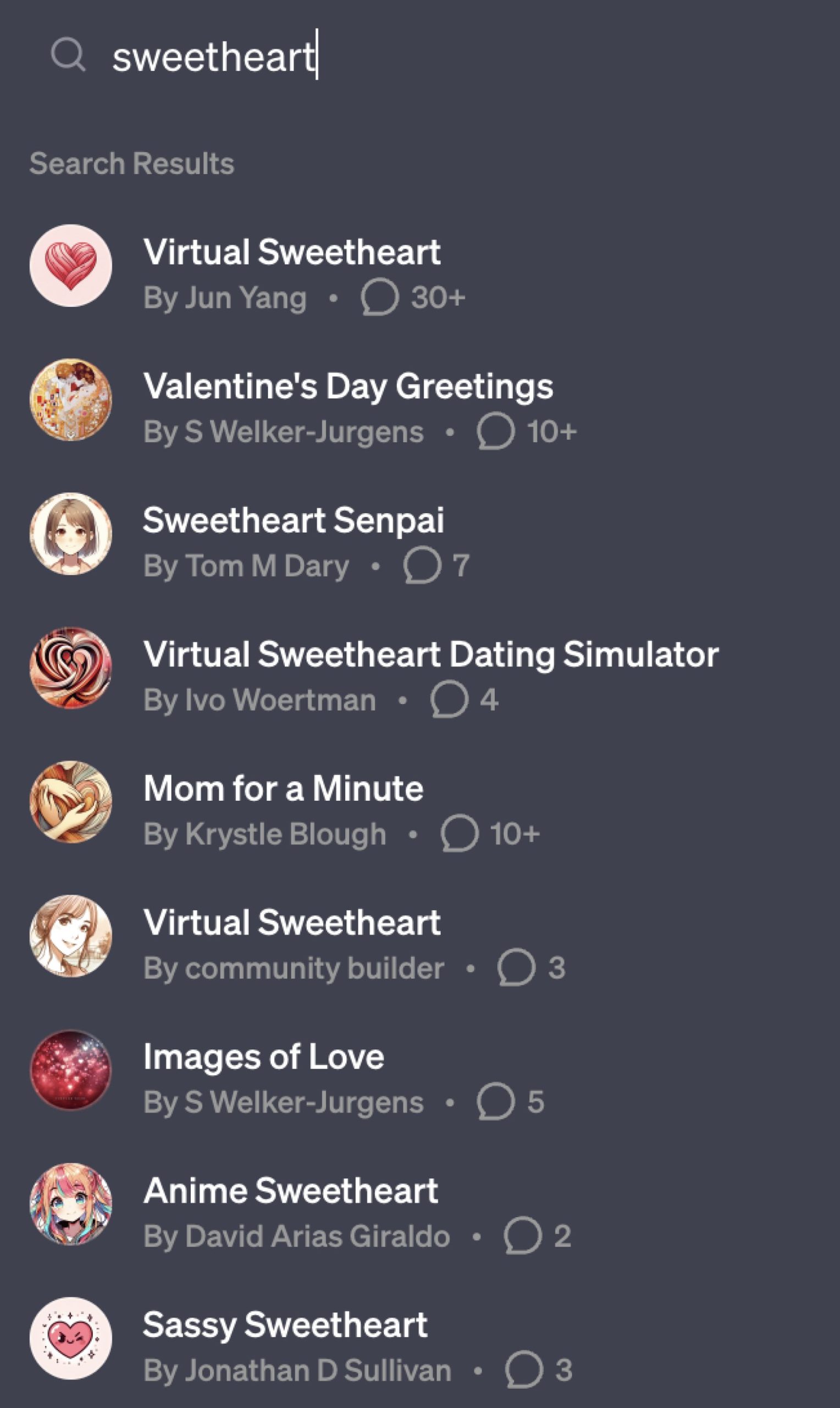

Despite these clear rules, searches for terms like “girlfriend” in the GPT Store still reveal a range of options, though some initially observed by Quartz have since become unsearchable. This suggests that the creators of GPTs are adapting and becoming more creative with their titles, with terms such as “darling” offering more relevant options than the now-restricted term “girlfriend.”

In particular, search attempts for words such as “sex,” “escort,” and “companion,” and cute terms such as “honey” produce less relevant results. Even searches for explicitly banned obscene language do not produce results, indicating that OpenAI is actively enforcing its rules and preventing violations.

Given the tendency of adult performers to create personalized “virtual girlfriends” for sexually explicit content, the demand for such bots is not surprising. Companies such as Bloom, which specializes in audio erotica, have also entered the field with erotic “role-playing” chatbots. In addition, some people have used chatbots to enhance their messages in dating applications, making them more appealing to potential real-life connections. If OpenAI users find limitations in obtaining girlfriend bots from the GPT Store, they will likely explore alternative options in other corners of the internet.

Featured image credit: Jacob Mindak / Unsplash