Google’s DeepMind robotics team has devised a groundbreaking ‘Robot Constitution’ embedded within its AutoRT data gathering system. Drawing inspiration from Isaac Asimov’s iconic Three Laws of Robotics, this constitution acts as a safeguard, ensuring that AI droids perform tasks efficiently and prioritize human safety.

So will it be successful? Let’s deep dive into the intricacies of this innovative approach and find out whether Google’s Robot Constitution can truly be our shield against the ominous threat of robots gone rogue.

What is Google’s Robot Constitution about, and can it save use for real?

Google’s Robot Constitution is a sophisticated safety measure integrated into the AutoRT data gathering system, designed by the DeepMind robotics team. At its core, this constitution serves as a set of safety-focused prompts inspired by the ethical guidelines proposed by science fiction writer Isaac Asimov in his Three Laws of Robotics.

Quick reminder: Isaac Asimov’s Three Laws of Robotics are a set of ethical principles designed to guide the behavior of artificial intelligence, emphasizing the protection of humans and preventing harm by prioritizing safety and ethical considerations.

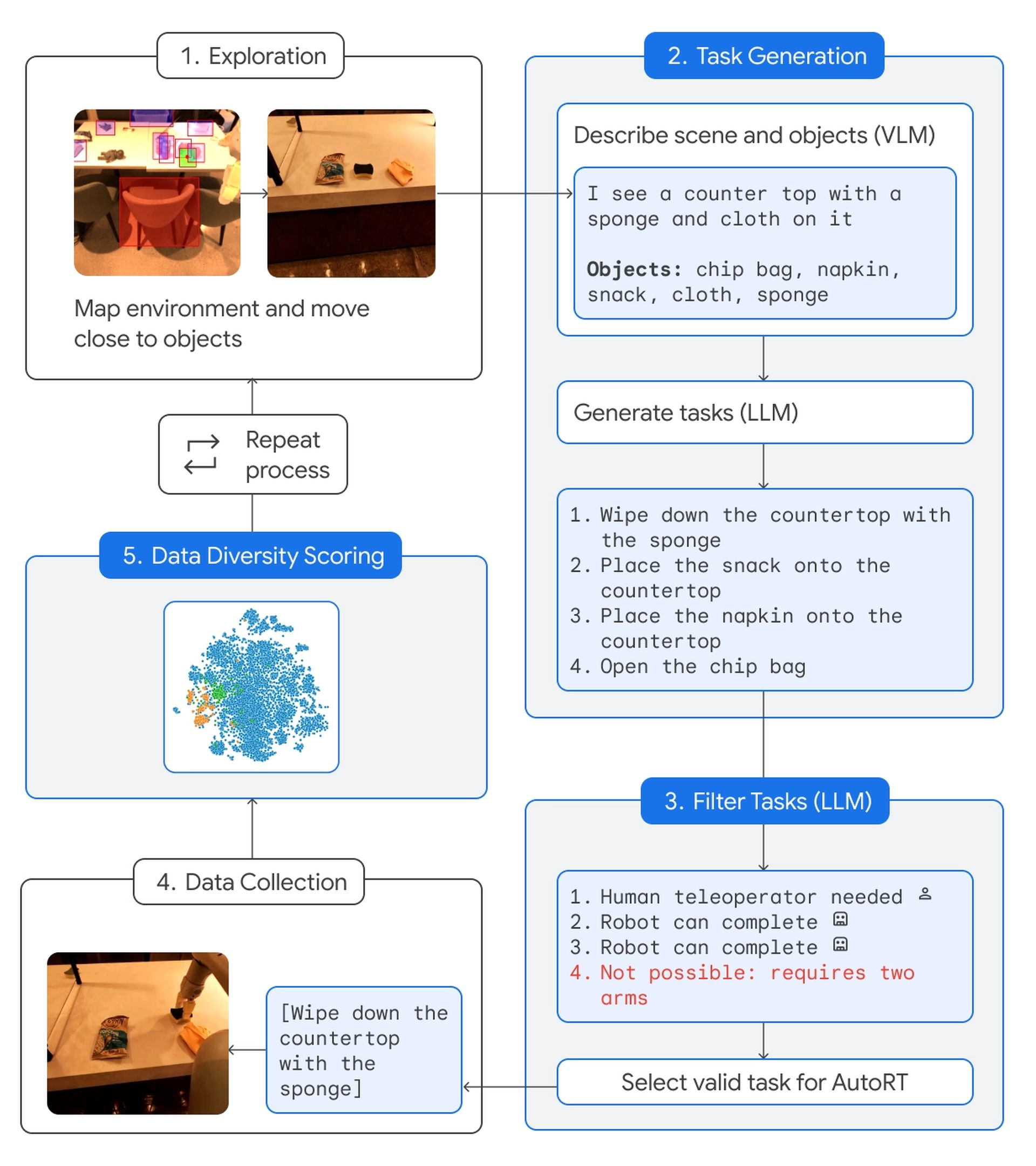

The AutoRT system utilizes a Visual Language Model (VLM) and a Large Language Model (LLM) to enhance the decision-making capabilities of AI droids. The VLM processes visual information, allowing robots to understand their surroundings, while the LLM interprets this information and suggests appropriate tasks for the robot. This collaborative approach enables the robot to navigate diverse environments and scenarios with a heightened awareness of potential risks.

Within the AutoRT framework, the Robot Constitution acts as a critical protection layer. It issues instructions to the LLM, directing it to avoid tasks that could potentially harm humans or animals, involve sharp objects, or interact with electrical appliances. This proactive approach aligns with Asimov’s emphasis on prioritizing human safety and ensures that the AI droids operate within ethical boundaries.

DeepMind has incorporated additional features into the AutoRT system to enhance safety further. If the force on the robot’s joints exceeds a predetermined threshold, the system is programmed to halt automatically, preventing potential accidents or collisions. Additionally, a physical kill switch is provided, allowing human operators to deactivate the robots swiftly in emergency situations, offering an extra layer of control and security.

The extensive real-world testing of the AutoRT system underscores Google’s commitment to safety. Over a period of seven months, a fleet of 53 AutoRT robots was deployed in four different office buildings, undergoing more than 77,000 trials. This comprehensive testing approach ensures that the AutoRT system is efficient and capable of adapting to various environments and scenarios.

So, can Google’s robot constitution save us from being killed by robots? It depends. The development of Google’s Robot Constitution within the AutoRT system is a significant stride towards ensuring the safe integration of AI in our daily lives, drawing inspiration from Asimov’s ethical principles; however, its efficacy ultimately depends on the continued refinement and implementation of such safeguards as technology evolves.

Google’s Robot Constitution is a pioneering step towards developing responsible and secure AI systems. By combining advanced visual and language models with ethical guidelines inspired by Asimov’s principles, Google aims to create a future where AI droids can coexist with humans harmoniously, prioritizing safety and ethical considerations.

To get more detailed information, click here and visit the official announcement.

Featured image credit: Eray Eliaçık/Bing