Nvidia is revolutionizing the world of artificial intelligence computing with the recent launch of the NVIDIA H200. This cutting-edge platform, built on the NVIDIA Hopper™ architecture, boasts the powerful NVIDIA H200 Tensor Core GPU, equipped with advanced memory designed to tackle substantial data loads for generative AI and high-performance computing (HPC) workloads.

In this article, we’ll delve into the recent launch and provide as much detail as possible about the new NVIDIA H200.

The power of NVIDIA H200

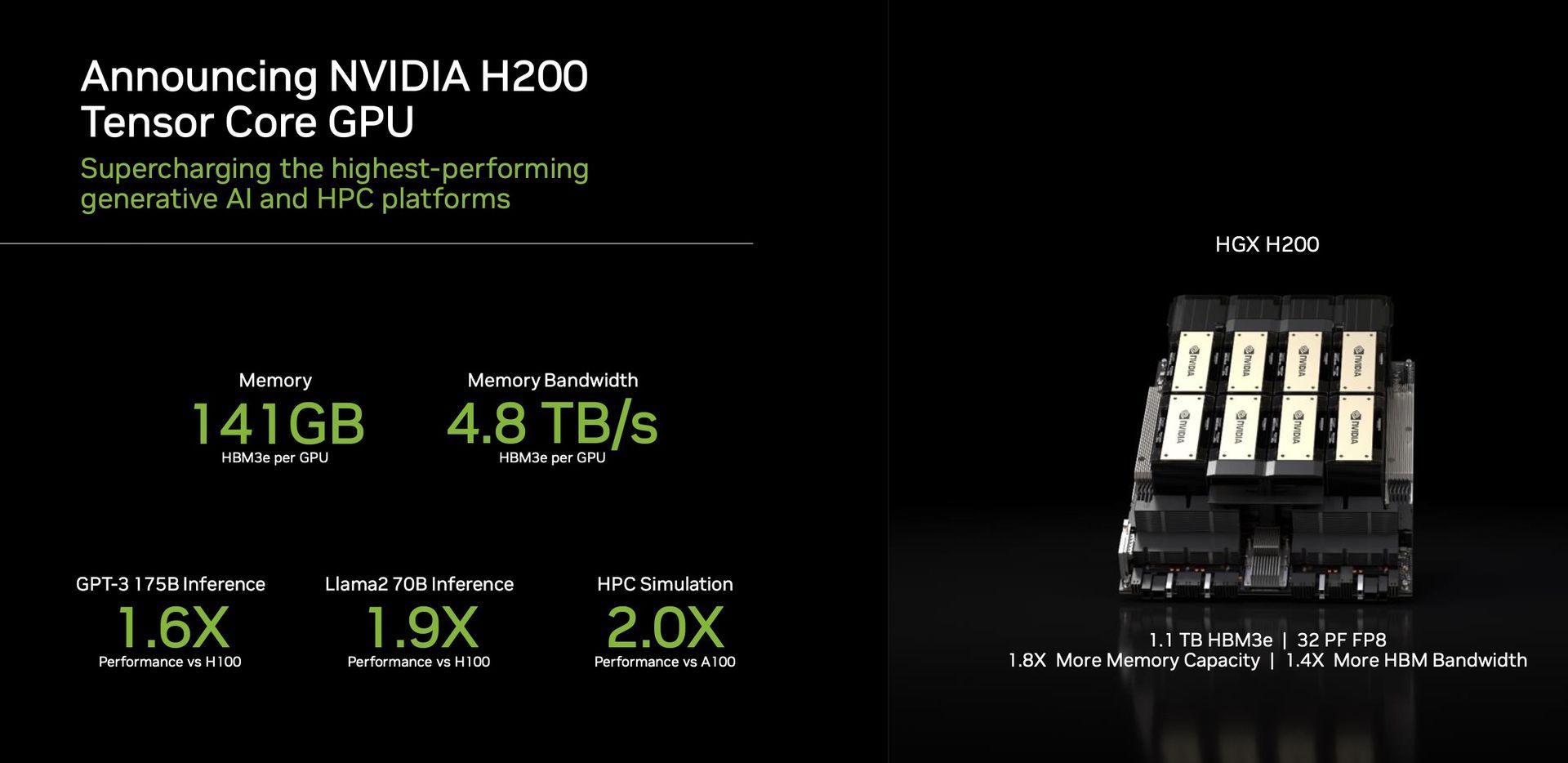

A standout feature of the H200 is its utilization of HBM3e, the first GPU to offer this faster and larger memory. This advancement propels the acceleration of generative AI and large language models, simultaneously pushing the boundaries of scientific computing for HPC workloads. With HBM3e, the H200 delivers an impressive 141GB of memory at a staggering 4.8 terabytes per second. This represents nearly double the capacity and 2.4 times more bandwidth compared to its predecessor, the NVIDIA A100.

Performance and applications

The H200, powered by NVIDIA NVLink™ and NVSwitch™ high-speed interconnects, promises unparalleled performance across various application workloads. This includes its prowess in LLM (Large Language Model) training and inference for models surpassing a colossal 175 billion parameters. An eight-way HGX H200 stands out by providing over 32 petaflops of FP8 deep learning compute and an aggregate high-bandwidth memory of 1.1TB, ensuring peak performance in generative AI and HPC applications.

Nvidia’s GPUs have become pivotal in the realm of generative AI models, playing a crucial role in their development and deployment. Designed to handle massive parallel computations required for training and running these models, Nvidia’s GPUs excel in tasks such as image generation and natural language processing. The parallel processing architecture allows these GPUs to perform numerous calculations simultaneously, resulting in a substantial acceleration of generative AI model processes.

The H200 is a testament to Nvidia’s commitment to perpetual innovation and performance leaps. Building on the success of the Hopper architecture, ongoing software enhancements, including the recent release of open-source libraries like NVIDIA TensorRT™-LLM, continue to elevate the performance of the platform. The introduction of NVIDIA H200 promises further leaps, with expectations of nearly doubling inference speed on models like Llama 2, a 70 billion-parameter LLM, compared to its predecessor, the H100.

Versatility and availability

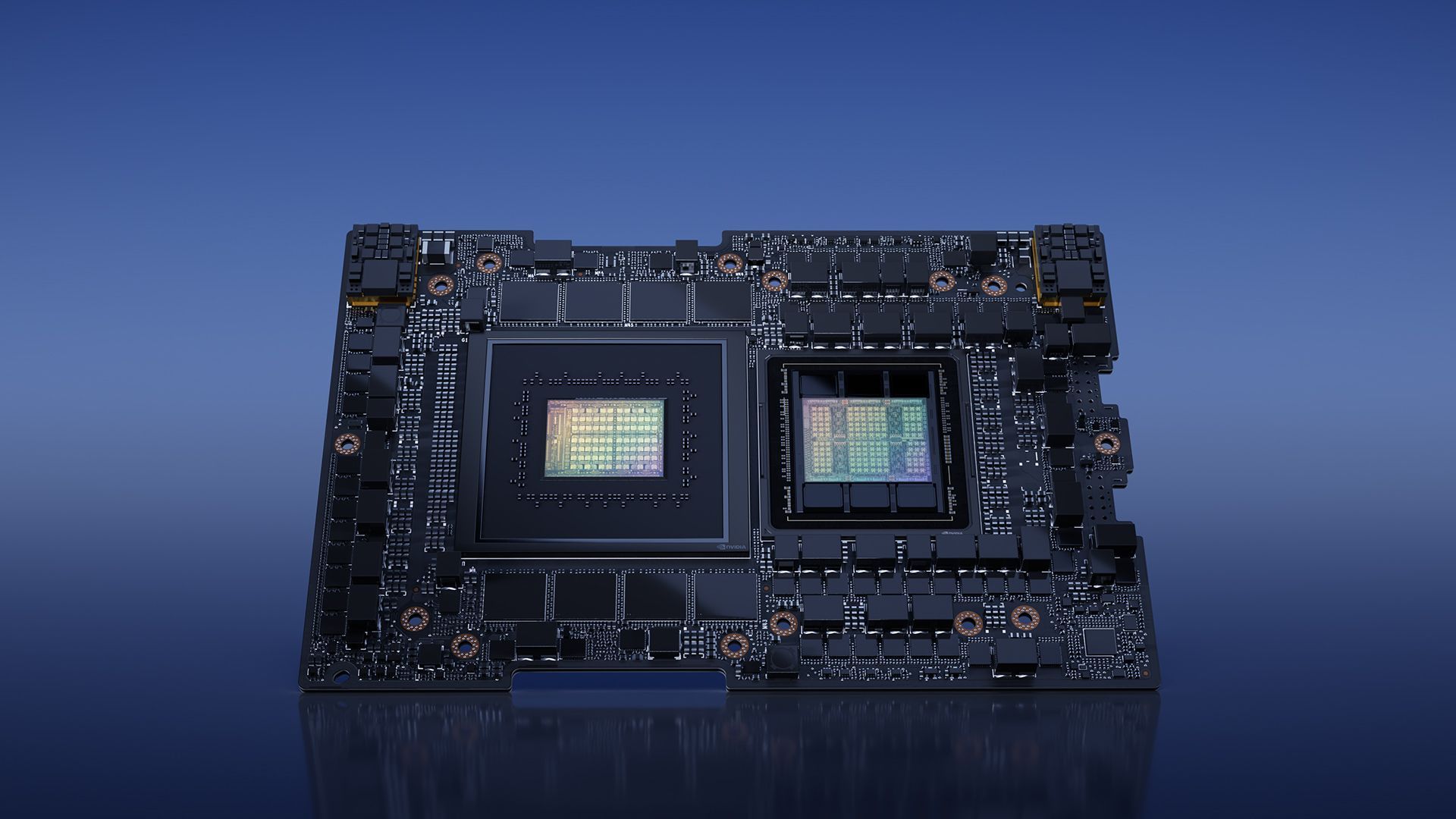

Nvidia ensures versatility with the H200, offering it in various form factors such as NVIDIA HGX H200 server boards with four- and eight-way configurations. These configurations are compatible with both hardware and software of HGX H100 systems. Additionally, NVIDIA H200 is available in the NVIDIA GH200 Grace Hopper™ Superchip with HBM3e, catering to different data center setups, including on-premises, cloud, hybrid-cloud, and edge environments. The global ecosystem of partner server makers can seamlessly update their existing systems with the H200, ensuring widespread accessibility.

Enthusiasts and industry experts are eagerly awaiting the release of the HGX H200 systems, which are set to hit the market in the second quarter of 2024. Prominent global players such as Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are among the first wave of cloud service providers ready to deploy H200-based instances next year.

In conclusion, the NVIDIA H200, with its groundbreaking features and performance capabilities, is set to redefine the landscape of AI computing. As it becomes available in the second quarter of 2024, the H200 is poised to empower developers and enterprises across the globe, accelerating the development and deployment of AI applications from speech to hyperscale inference.

Meanwhile, if you’re interested in the leading tech company, make sure to also check out our article on how Nvidia and AMD are working on an “arm based chip”.

Featured image credit: NVIDIA