Meta, the tech giant known for shaping the metaverse, has recently unveiled a remarkable advancement in artificial intelligence: Emu AI, short for Expressive Media Universe.

This cutting-edge AI model is poised to revolutionize the process of generating images from textual descriptions.

Quality tuning with Emu AI

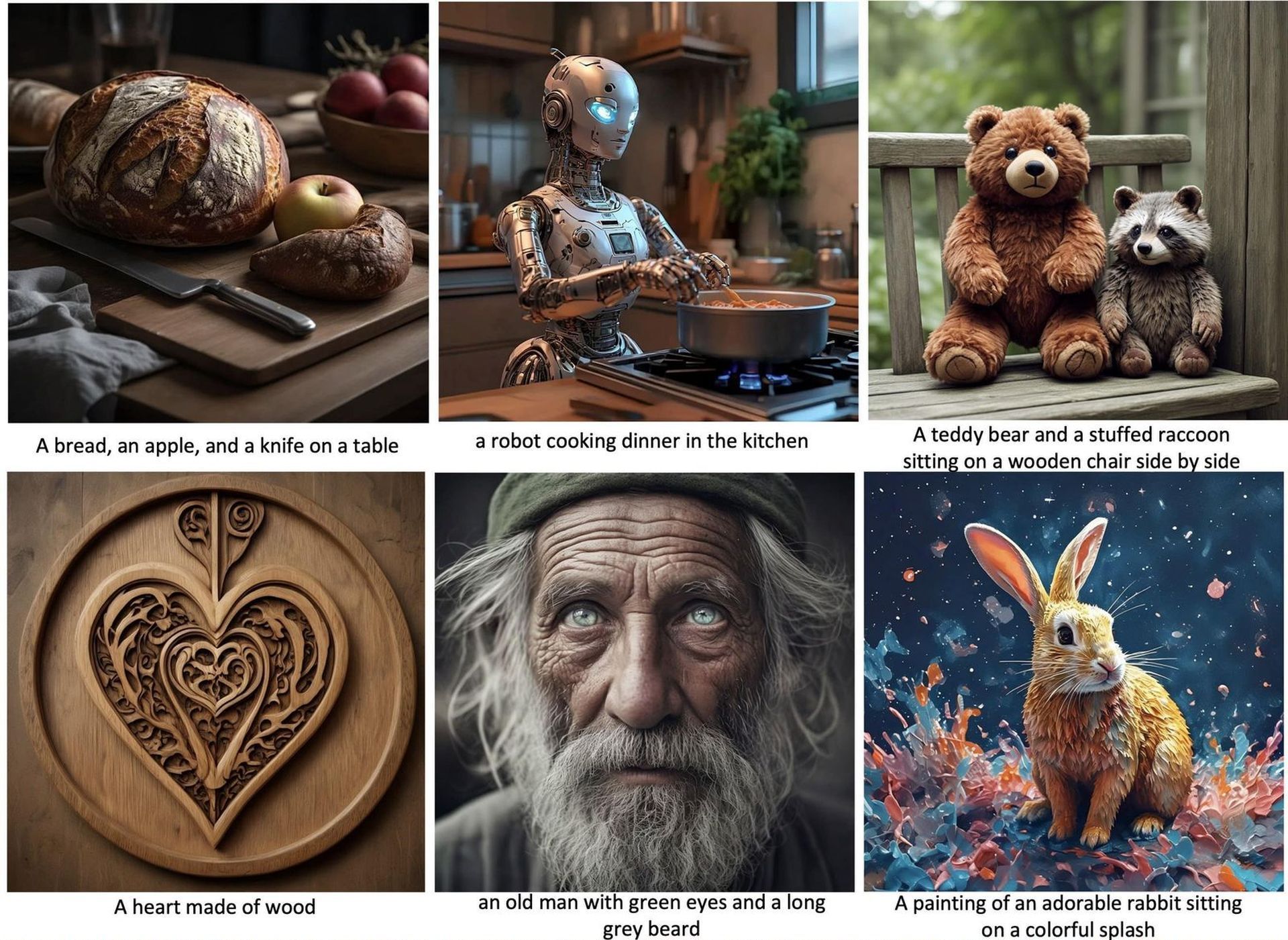

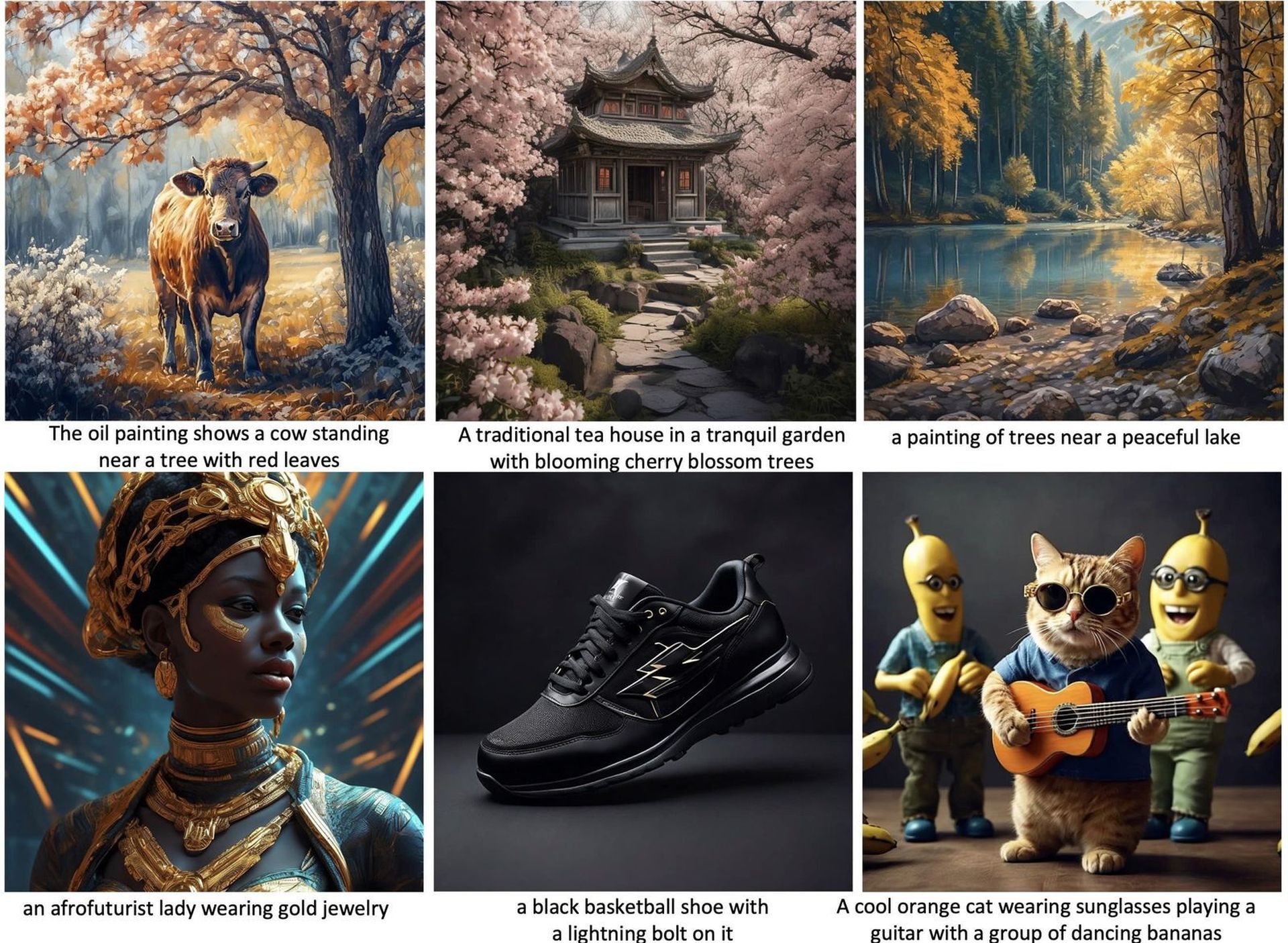

At the heart of Emu’s ingenuity lies a technique known as “quality tuning.” This innovative approach dramatically enhances the visual appeal of images produced by AI text-to-image models. The results are not only visually striking but also remarkably faithful to the provided text.

In their extensive research endeavor, Meta’s AI team embarked on a journey that began with the pre-training of a latent diffusion model. This initial phase involved a colossal dataset comprising a staggering 1.1 billion image-text pairs. However, the true breakthrough emerged during the fine-tuning stage, where the system underwent training on a curated selection of just 2000 meticulously chosen, high-quality images.

Merging technology with human expertise

This process, described as finding “photogenic needles in a haystack,” marries state-of-the-art technology with the indispensable human touch. The initial dataset was expansive, capturing billions of images. Yet, it was through a series of automatic filters that this trove of imagery was refined. Factors such as offensive content, image-text alignment, and text overlay were scrutinized. However, the limitations of automated filtering were apparent, leading to the critical inclusion of human annotators.

Annotators, ranging from generalists to specialists, played a pivotal role in the selection process. Their discerning eyes ensured that only the crème de la crème – the images that transcended ‘good’ to achieve ‘exceptional’ status – made the final cut. In the end, a mere 2000 images remained, each possessing an undeniable allure.

A moniker of distinction

The choice of ‘Emu’ as the moniker for this groundbreaking model is symbolic. It pays homage to the emu, a bird known for its distinctive, attention-grabbing nature. This choice is reflective of Emu’s ability to capture attention and stand out in the realm of image generation.

Emu AI’s triumph over the state-of-the-art

Emu AI’s prowess extends beyond the realm of photorealistic settings, as it excels even in generating sketches and cartoons. Comparative assessments against the state-of-the-art SDXL1.0 model yielded remarkable results. Emu emerged as the preferred choice, with a significant 68.4% preference rate for visual appeal on the PartiPrompts benchmark, and an even more impressive 71.3% preference rate on their Open User Input benchmark.

Meta’s researchers attribute Emu’s exceptional performance not only to the model architecture but also, crucially, to the quality and diversity of the data used for fine-tuning. Surprisingly, the impact of as few as 100 high-quality training images on Emu AI’s generation capabilities was substantial. This underscores the potency of a select set of exemplary examples in aligning AI creativity with human aesthetics.

Emu’s multifaceted artistry

One of Emu’s most laudable features is its versatility. It demonstrates the capacity to depict a vast array of concepts, ranging from portraits to sweeping landscapes and even abstract art. This versatility positions Emu as a powerful tool for artists, designers, and creators across a spectrum of visual disciplines.

Pioneering the future of AI-driven creativity

Emu represents a significant leap for Meta towards AI that can seamlessly transform ideas into visually captivating content. It serves as a testament to the value of meticulous curation in machine learning datasets. Furthermore, it provides a tantalizing glimpse into a future where text alone may be sufficient to materialize our imaginative visions.

Emu’s functionality will soon be accessible through the Meta AI chatbot, promising to democratize the creation of visually stunning content across a myriad of applications and devices.

Featured image credit: Meta