Apple’s virtual assistant, Siri, has become an indispensable part of the iOS ecosystem, but its voice recognition capabilities have faced challenges in noisy environments and with distorted voices. Seeking to address these limitations, a recent patent application from Apple has shed light on the tech giant’s exploration of incorporating lip-reading abilities into Siri.

This groundbreaking feature could leverage motion sensing technology, such as accelerometers or gyroscopes, to detect subtle facial movements and enhance voice command accuracy. While this patent hints at exciting possibilities, it remains uncertain when and how Apple plans to implement this new feature.

The logic behind Apple’s lip-reading patent for Siri

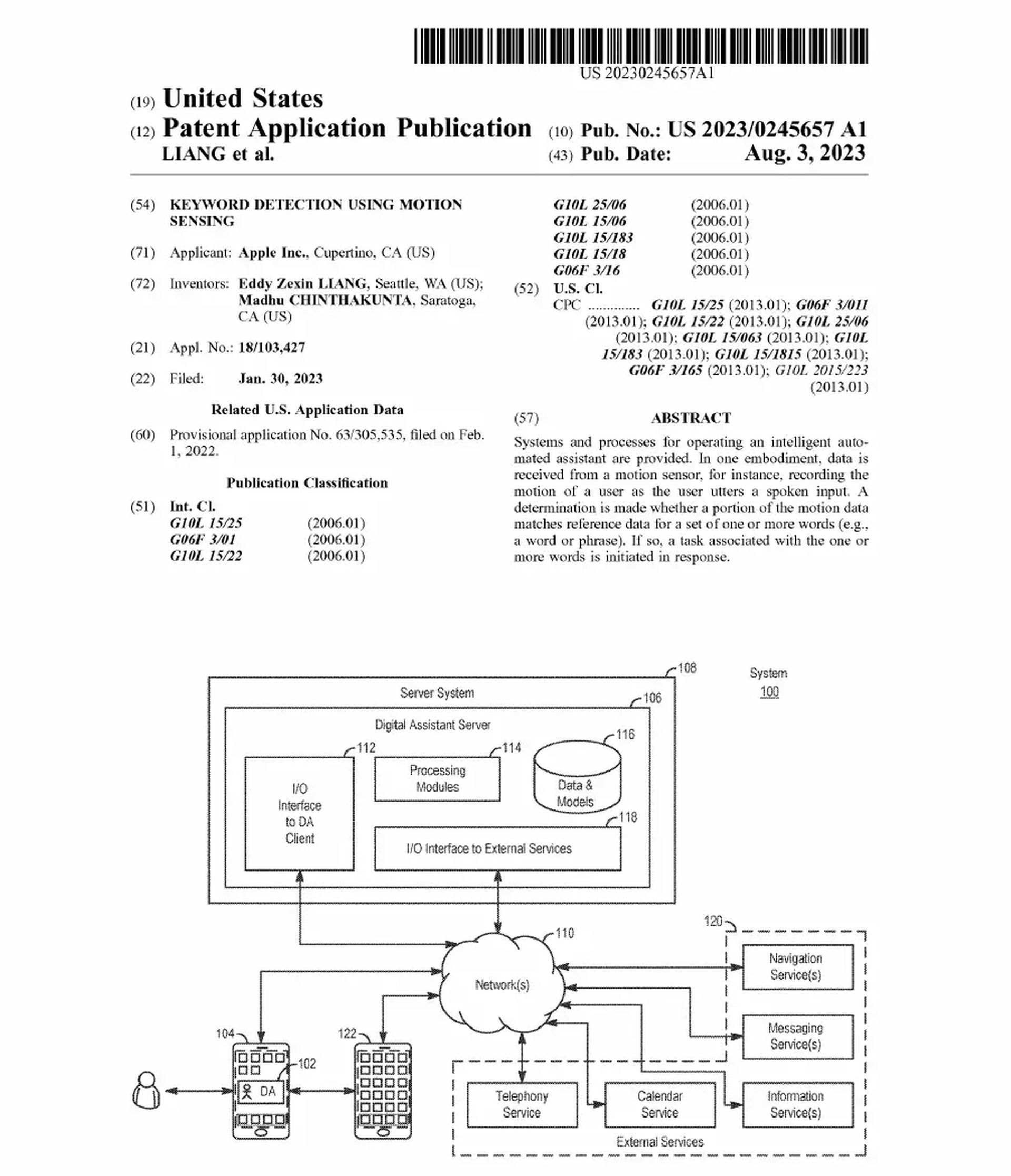

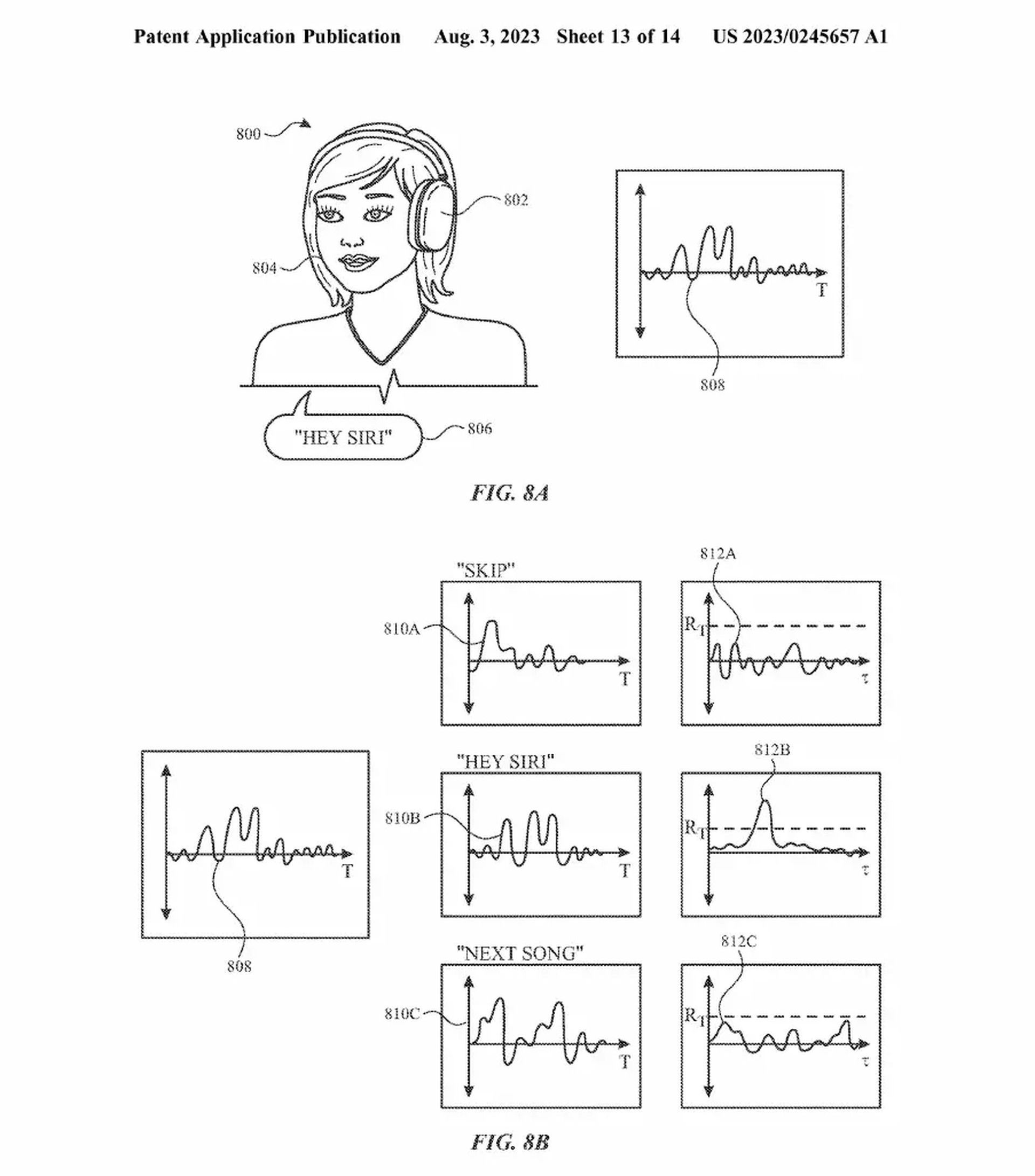

The patent application, filed in January, outlines a system that uses motion data to determine if a user’s mouth movements align with spoken words or phrases. Instead of relying solely on traditional voice recognition systems, which can be impacted by background noise and drain device resources, Apple’s proposed method observes facial muscle vibrations, head motions, and other mouth parts’ movements. By utilizing motion sensors, such as accelerometers and gyroscopes, this innovative approach could potentially overcome many of the challenges faced by existing voice recognition technology.

Implications for beyond smartphones

While the patent primarily mentions iPhones, it hints at a broader scope of implementation. Apple envisions extending the technology to other devices, including AirPods and even “smart glasses,” implying a vast array of potential applications. However, given Apple’s discontinuation of its smart glasses project, the focus seems to be on its Vision Pro headset, which remains shrouded in mystery.

The quest for data

To develop this lip-reading capability, Apple would require substantial amounts of data on human mouth movements. Creating a “voice profile” for users could potentially address this need. Apple’s recent accessibility features, including Live Speech on iOS, enable the collection of users’ voice profiles. These profiles could serve as a foundation for training a language model that recognizes facial movements from extensive datasets. The company’s penchant for integrating AI subtly into its features aligns with the idea of incorporating a “transformer language model” for lip-reading capabilities.

The road ahead

While the patent application signifies a significant advancement in voice recognition technology, its actual integration into Apple’s products remains uncertain. Apple’s renowned supply chain analyst, Ming-Chi Kuo, pointed out that the company’s progress on generative AI lags behind competitors, and there are no immediate indications of such deep learning models being integrated into hardware products anytime soon. However, Apple’s development of an internal chatbot codenamed “Apple GPT” could hint at potential AI-related enhancements for Siri.

Apple’s patent application revealing plans for a lip-reading Siri ushers in a new era of voice recognition technology. By exploring motion sensing technology as a means to improve voice command accuracy, Apple demonstrates its commitment to refining user experiences and staying at the forefront of AI integration.

While the implementation timeline remains unclear, the concept holds significant potential for revolutionizing voice-assisted interactions across various Apple devices. As the future unfolds, Apple users eagerly anticipate the day when their virtual assistant can effortlessly read their lips and cater to their every command.

Featured image credit: Omid Armin / Unsplash