The rise of hate speech and harmful content on social media platforms has become a pressing concern for users, advertisers, and tech companies alike. In the midst of this heated issue, X Corp, the platform recently acquired by Elon Musk, is at the center of a legal battle with The Centre for Countering Digital Hate (CCDH).

The CCDH has published reports alleging an increase in hate speech instances on the platform since Musk’s takeover, leading X Corp to take legal action against the group. This article delves into the complexities of this dispute, exploring the motivations behind X Corp’s move and the potential implications for both the company and the fight against hate speech on social media.

CCDH reports and X Corp’s response

The CCDH has been vocal in its claims, pointing out a significant rise in hate speech after Elon Musk’s acquisition of the platform. In one of its reports, the group highlighted an increase in slurs against Black and transgender individuals. Additionally, the CCDH accused Twitter of lax enforcement of rule-breaking tweets from Twitter Blue subscribers, as well as tweets containing references to the LGBTQ+ community alongside ‘grooming’ slurs.

X Corp’s response to these allegations has been two-fold. On the one hand, the company has adopted a new approach, titled ‘Freedom of Speech, Not Reach,’ leaning towards leaving tweets up instead of removing them. However, X Corp also seeks to counter the CCDH’s claims in court and uncover the identity of the group’s backers.

Who is funding this organization? They spread disinformation and push censorship, while claiming the opposite. Truly evil.

— Elon Musk (@elonmusk) July 18, 2023

The impact on brand safety

A core motivation behind X Corp’s legal action is the perceived impact of the hate speech reports on the platform’s brand safety. High-profile reports on the proliferation of harmful content may deter potential advertisers from partnering with the platform. Consequently, Musk and his legal team are aiming to refute these claims to reassure brand partners and safeguard the platform’s reputation.

Challenges of third-party assessments

Both X Corp and Meta (formerly known as Facebook) have criticized the CCDH’s reports as limited in scope. The challenge with third-party assessments lies in their inability to access a comprehensive pool of posts and examples, rendering the conclusions relative and potentially skewed. Nevertheless, the media coverage of these reports has influenced public perception and raised concerns among advertisers.

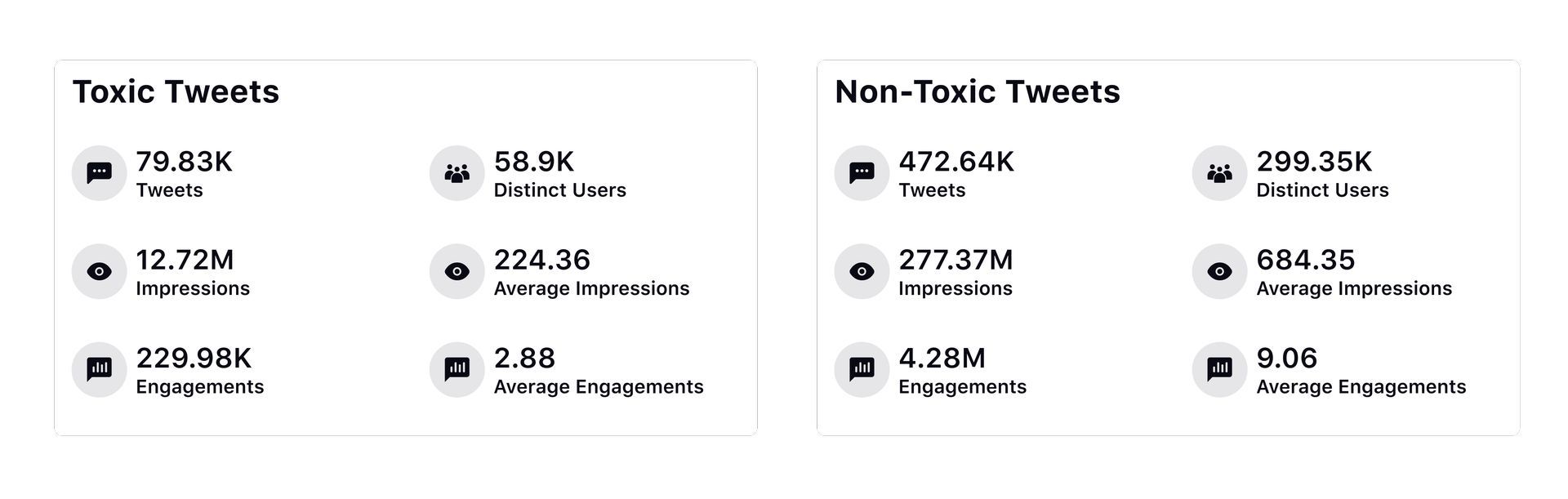

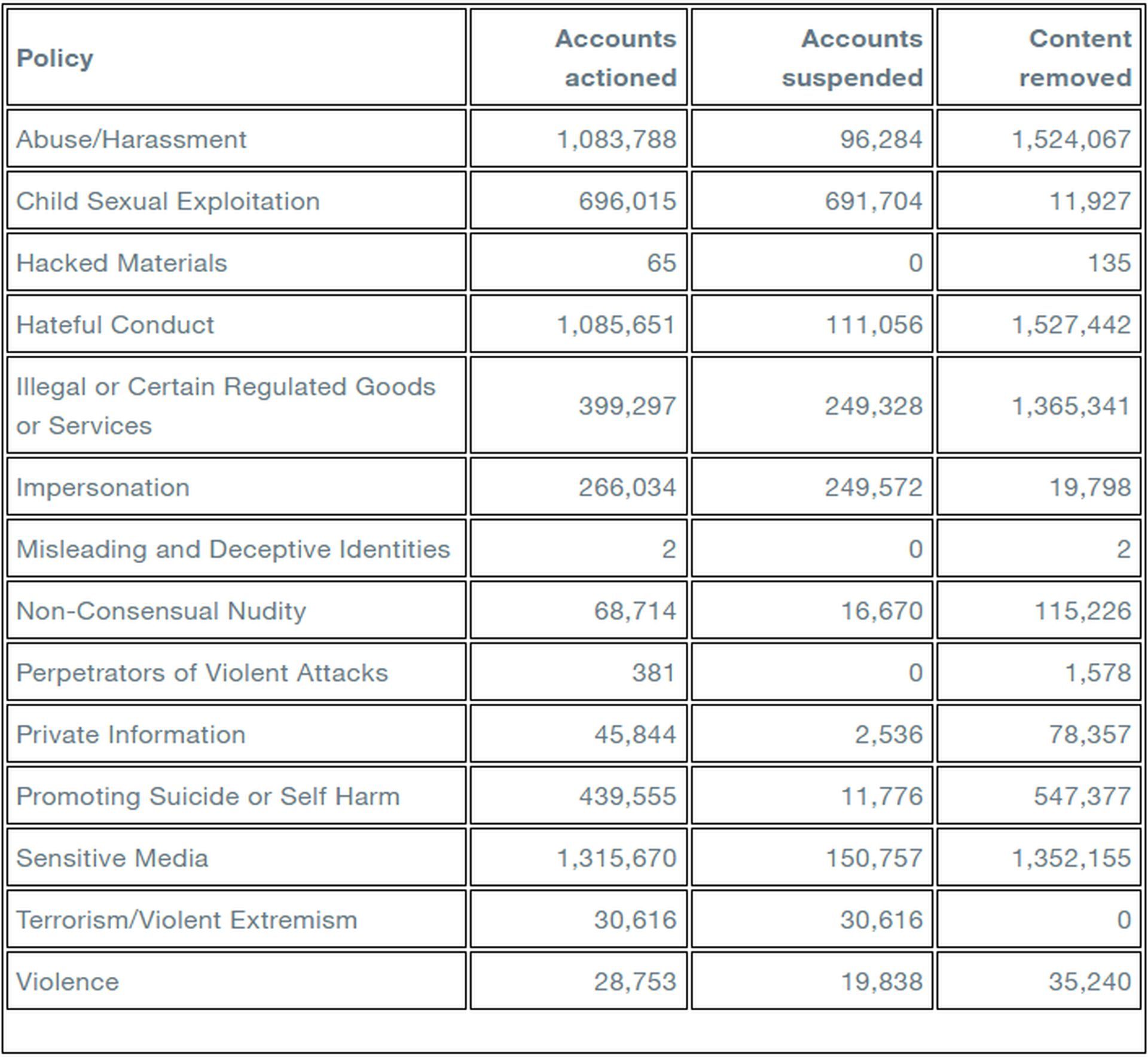

X Corp claims that its efforts have reduced hate speech impressions drastically, citing a staggering 99.99% decrease since Musk’s acquisition. However, the company has not provided detailed data or a comprehensive report to back up this assertion. This lack of transparency has fueled skepticism and further questions about the validity of the claims.

Despite what others may claim, the truth is that Twitter is the safest it’s ever been and the numbers speak for themselves – 99.99% of Tweet impressions are healthy. And we’re achieving this while defending our users’ right to free speech.

To learn more about the work we’re… https://t.co/DM8YnXYdnL

— Safety (@Safety) July 19, 2023

Part of the dispute centers around how hate speech is interpreted and assessed. X Corp’s assessment partner, Sprinklr, takes a more nuanced approach, analyzing the context within which hate terms are used to determine if they are genuinely harmful. This methodology led to the finding that 86% of posts containing hate speech terms were not intended to cause harm.

The call for transparency

While X Corp pushes back against outside assessments, the call for transparency remains strong. Critics argue that the company should provide a detailed report to support its claims and counter the CCDH’s findings effectively. Transparency and open dialogue could help build trust among stakeholders and facilitate a more constructive approach to addressing hate speech concerns.

The legal battle between X Corp and the CCDH shines a spotlight on the challenges posed by hate speech on social media platforms. It underscores the need for more comprehensive and transparent reporting mechanisms to address this issue effectively. As the world grapples with the complexities of online speech and open dialogue, data-driven insights should form the bedrock of strategies to combat hate speech and harmful content on social media.

Featured Image: Credit