ChatGPT, the renowned AI chatbot, has undoubtedly revolutionized the way we interact with technology. However, with ChatGPT scams, cybercriminals have seized the opportunity to exploit unsuspecting users through various schemes. As more and more people fall prey to these deceptive tactics, it’s crucial to be aware of the potential threats and learn how to protect yourself from becoming a victim.

In this article, we’ll explore five ChatGPT scams that you need to watch out for. For more information on the security aspect of AI, make sure yo check out why you should never let your attorney use ChatGPT.

5 most common ways of ChatGPT scams

The emergence of AI-generated malware and the proliferation of phishing sites targeting ChatGPT users underscore the importance of staying vigilant and informed to safeguard against these evolving threats. From phishing emails crafted with impeccable language to deceptive browser extensions and fake apps, these scams pose significant risks to users’ personal information and digital security. By understanding these common ChatGPT scams, you can take proactive measures to protect yourself from falling victim to cybercriminals’ sophisticated ploys.

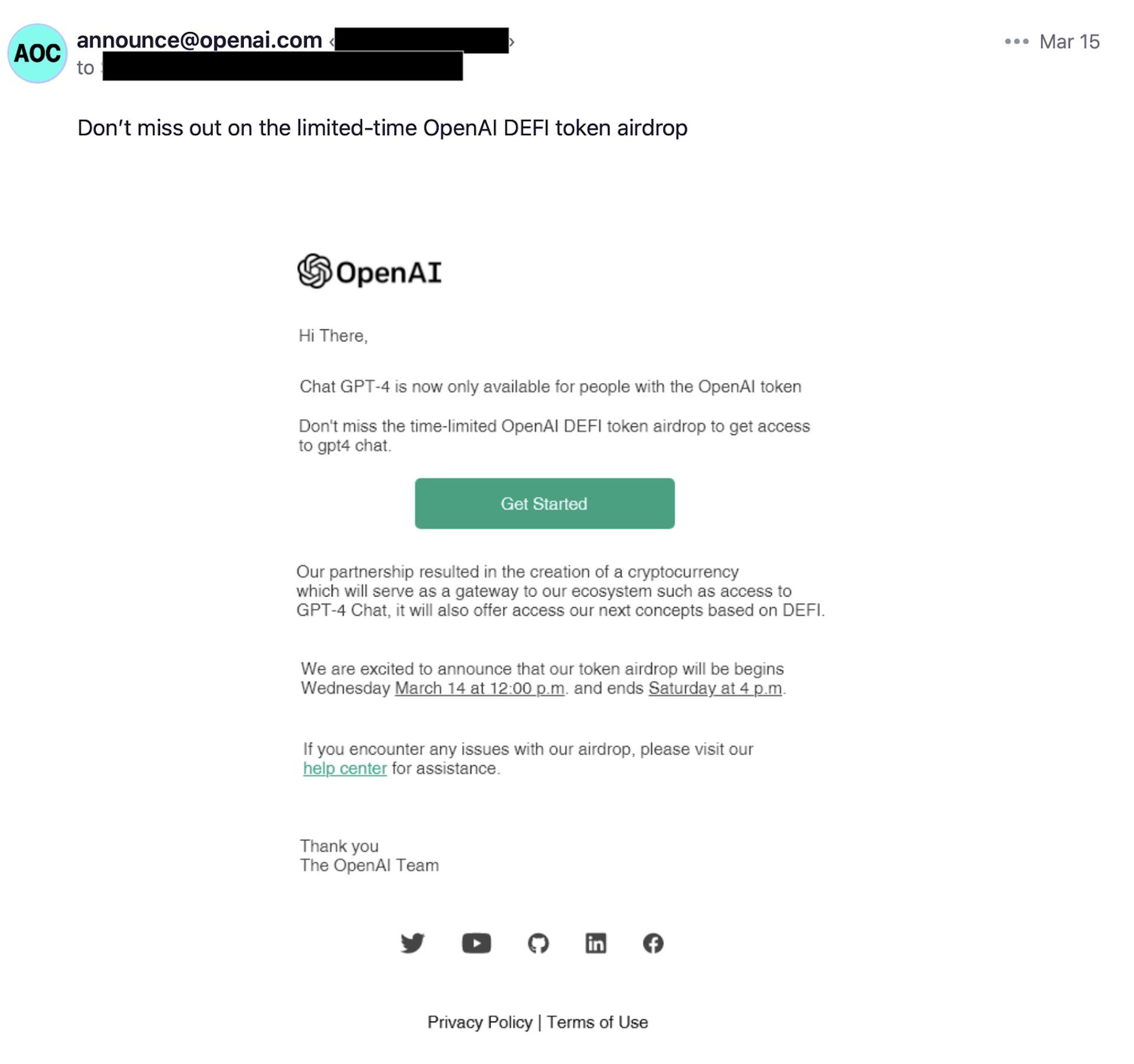

ChatGPT email scams

Email scams have plagued the internet for years, and now cybercriminals are leveraging ChatGPT to take phishing attacks to new heights. The ability of ChatGPT to generate realistic content on demand has led to the creation of well-crafted phishing emails. These messages appear authentic, devoid of any grammar or spelling errors, making it challenging for recipients to identify them as scams.

Imagine a non-English-speaking criminal wanting to target English-speaking individuals; using ChatGPT, they can effortlessly produce a convincing phishing email that deceives recipients into divulging sensitive information or clicking on malicious links. As such, the frequency of phishing attacks may rise, luring more victims into falling for these cleverly disguised traps.

Fake ChatGPT browser extensions

Browser extensions enhance our browsing experience, but malevolent actors have exploited this technology by creating fake ChatGPT extensions. These deceptive extensions may masquerade as legitimate ones, tricking users into downloading and installing malware or granting unauthorized access to their accounts.

For instance, a malicious extension named “Chat GPT for Google” emerged, bearing an uncanny resemblance to the genuine tool. Users who failed to notice subtle differences in the name unwittingly installed the malware-infested extension, leading to the compromise of their Facebook accounts and personal data.

To safeguard yourself, verify the legitimacy of any ChatGPT extension before installation, and rely only on reputable sources and app stores. Be cautious of names that appear similar to the authentic versions, as cybercriminals often exploit minor discrepancies to deceive users.

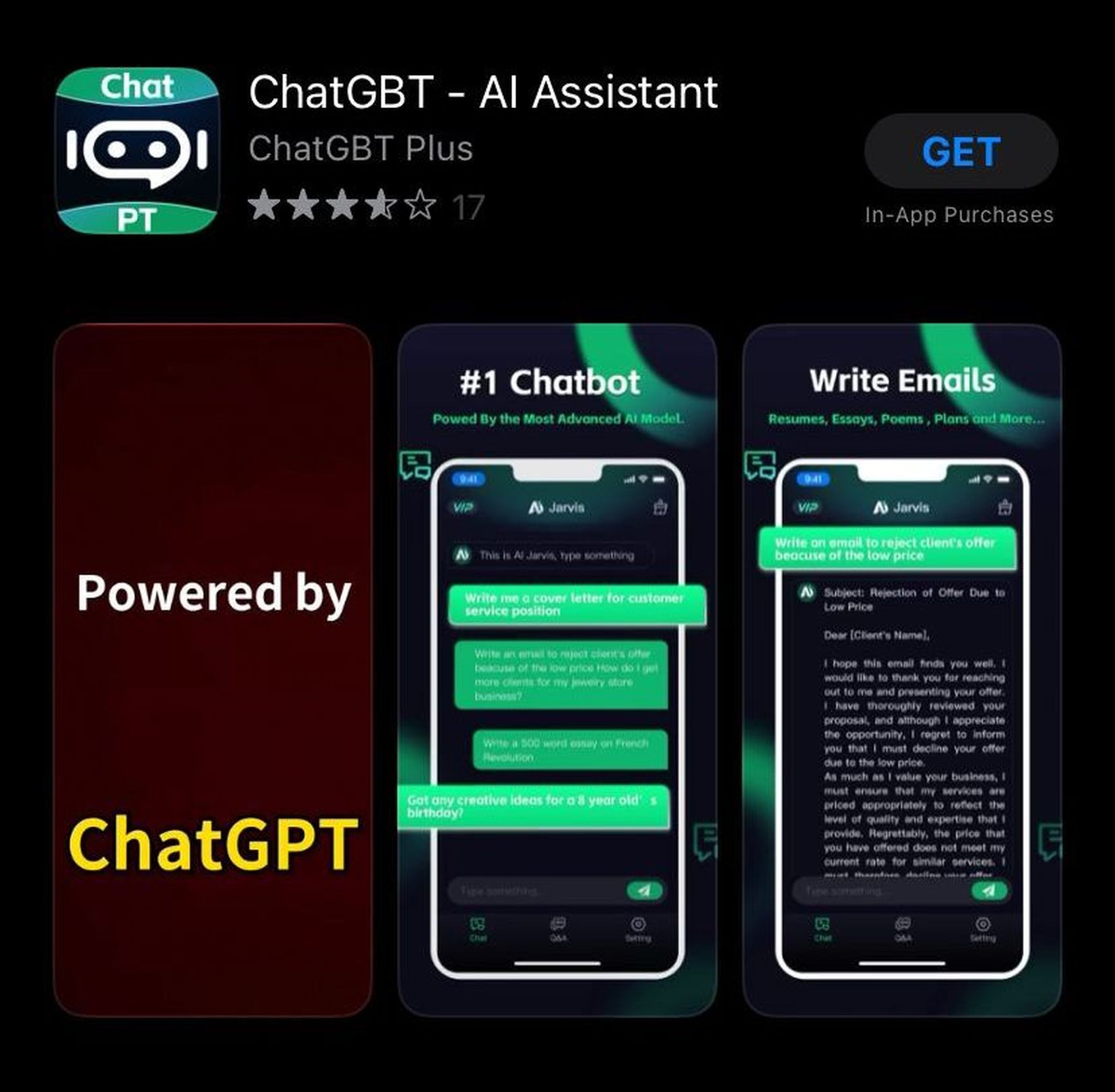

Fake ChatGPT apps

Similar to browser extensions, fraudulent ChatGPT apps have surfaced, posing a significant risk to users. Criminals capitalize on ChatGPT’s popularity to spread malware-laden apps or solicit personal information under the guise of providing a free version of the premium service.

In one instance, cybercriminals developed a counterfeit ChatGPT app, claiming to offer OpenAI’s ChatGPT Plus for free. However, unsuspecting users who fell for the ruse faced potential data theft or malware installation on their devices.

To stay safe, conduct thorough research on any app before downloading it, stick to reputable app stores, and scrutinize user reviews for any red flags. Remember, if an offer seems too good to be true, it probably is.

Malware created by ChatGPT

The growing concern surrounding AI and cybercrime isn’t unfounded. Malicious actors have already begun using ChatGPT to create and distribute malware. While these initial attempts may not have resulted in highly sophisticated malware, the potential implications are alarming.

Reports have surfaced of Python-based malware allegedly developed using ChatGPT. Although the impact of such simple malware may seem limited, this opens the door for aspiring cybercriminals with limited technical expertise to enter the realm of cybercrime. As AI advances, so too might the complexity and danger of these AI-generated threats.

Another development in AI regarding user security is WormGPT AI, which can only be accessed through the dark web.

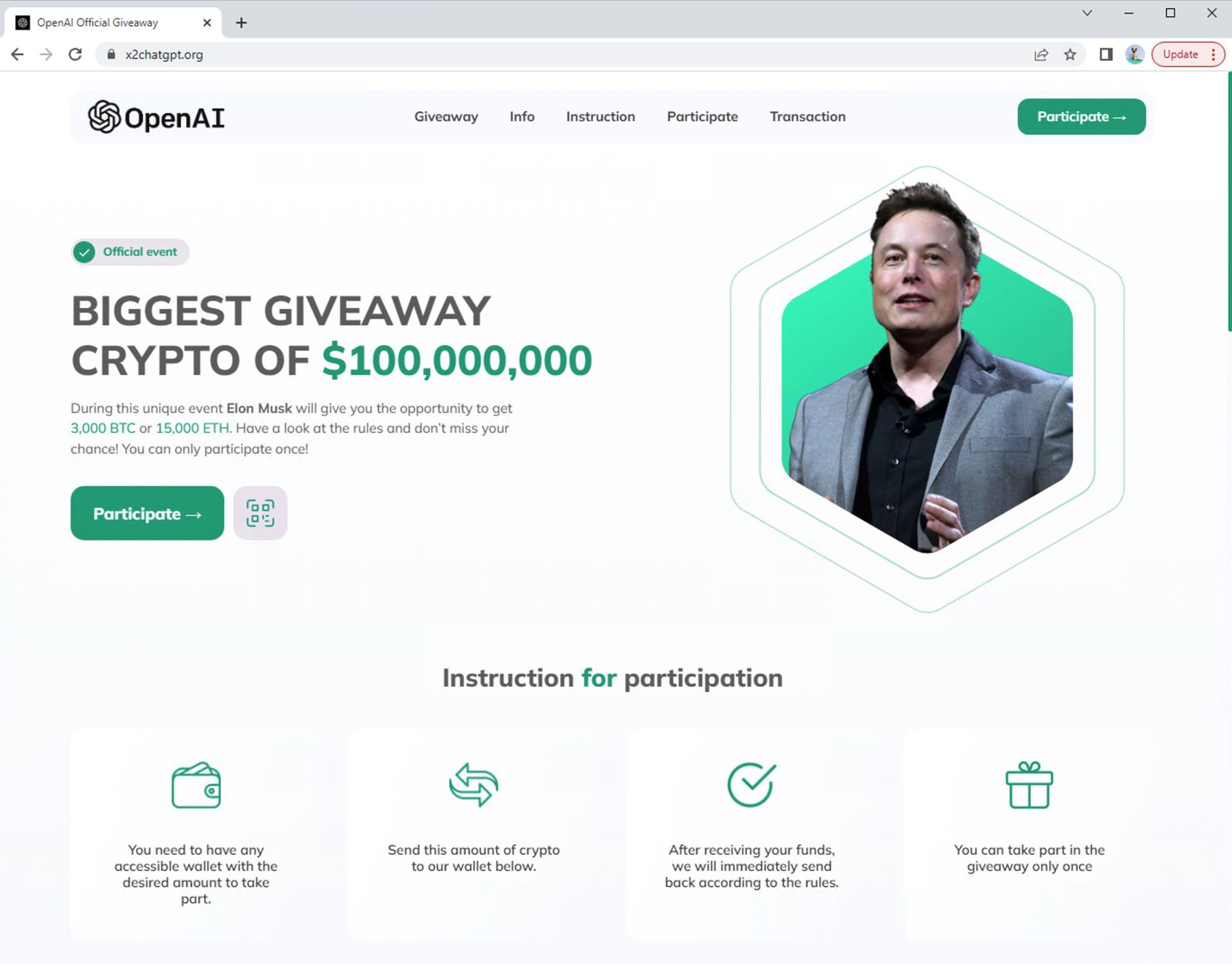

ChatGPT phishing sites

Phishing attacks often exploit websites to steal valuable data through keystroke logging. Cybercriminals may design phishing sites that appear genuine, tricking users into providing personal information.

If you use ChatGPT, you may be at risk of encountering such phishing sites. For example, a seemingly official ChatGPT webpage might request personal information during the account creation process, leading to data theft and unauthorized account access.

Remain vigilant and verify the authenticity of websites before submitting any sensitive information. Be wary of suspicious emails claiming to be from ChatGPT staff, asking for verification through provided links, as these may lead to phishing pages.

As ChatGPT gains more prominence, the potential for ChatGPT scams and attacks will continue to rise. By staying informed and vigilant, you can better protect yourself from falling victim to these deceptive tactics. Always be cautious when interacting with AI-generated content, and verify the authenticity of any apps, extensions, or websites related to ChatGPT before taking any action.

Featured Image: Credit