- Stability AI unveiled FreeWilly, two new language models (LLMs) trained on synthetic data.

- FreeWilly1 and FreeWilly2 were created in collaboration with CarperAI, based on Meta’s LLaMA and LLaMA 2 open-source models.

- These LLMs excel in resolving complex issues in law, mathematics, and language distinctions.

- Released under a non-commercial license, FreeWilly aims to promote research and open access in the AI community.

- FreeWilly2 outperforms GPT-3.5 in various tasks, including common sense and language inference, while FreeWilly1 demonstrates impressive performance despite being smaller and more energy-efficient.

FreeWilly, one of Stability AI’s new language models that were trained on “synthetic” data, was just made public. The Carper AI lab at Stability AI created the LLMs, which are now widely usable.

The two new LLMs were unveiled on Friday by Stability AI, the company behind the Stable Diffusion image generation AI and founded by former UK hedge funder Emad Mostaque, who has been accused of fabricating his resume. The two new LLMs are based on versions of Meta’s LLaMA and LLaMA 2 open-source models but were trained on a completely new, smaller dataset that includes synthetic data.

Both models are quite good at resolving challenging issues in technical disciplines like law, mathematics, and fine language distinctions.

The FreeWillys were released by CarperAI, a division of Stability, under the terms of a “non-commercial license,” which forbids using them for commercial, corporate, or profit-making purposes. They are instead meant to advance research and encourage open access within the AI community.

https://twitter.com/carperai/status/1682479222579765248

What is FreeWilly?

On July 21, 2023, Stability AI released the first FreeWilly and its follow-up FreeWilly in collaboration with the AI development company CarperAI. FreeWilly1 was tuned via supervised fine-tuning (SFT) on datasets produced artificially using Meta’s LLaMA-65B large-scale language model. On the other side, FreeWilly2 was created using LLaMA 270B.

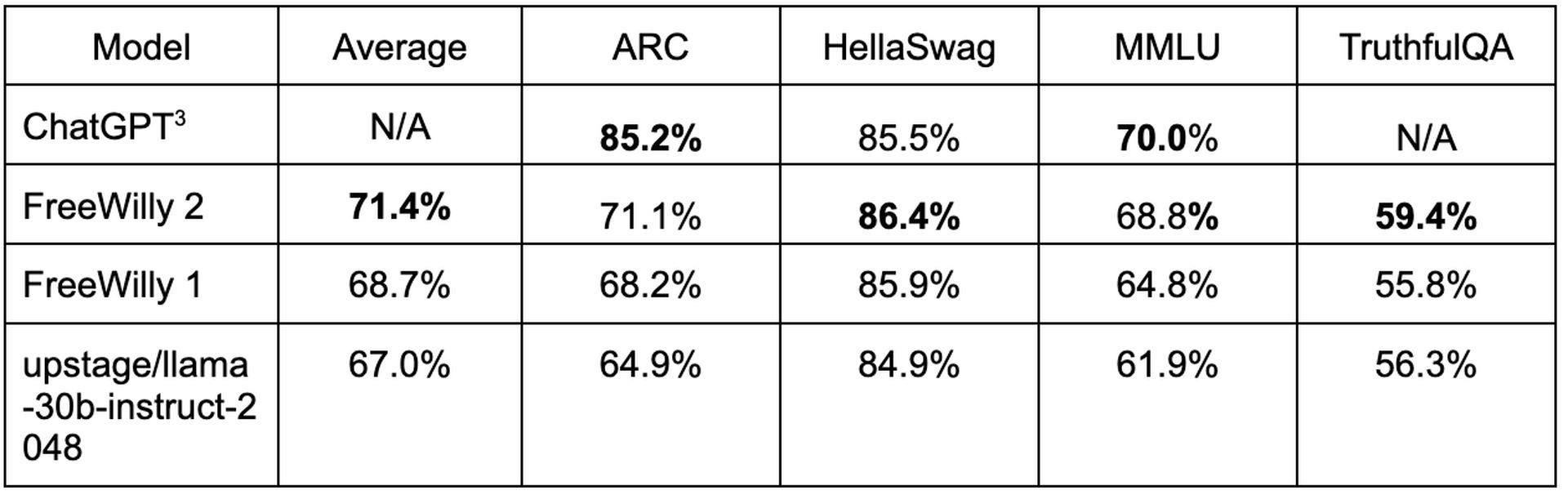

FreeWilly2 reportedly performs several tasks on par with GPT-3.5. FreeWilly2 surpassed ChatGPT with GPT-3.5, which achieved 85.5% accuracy, with an accuracy of 86.4% in “HellaSwag,” a natural language inference task that assesses common sense, according to independent benchmark tests conducted by Stability AI researchers.

When performance against the large-scale language model benchmark software “AGIEval” was tested, FreeWillly2 outperformed GPT-3.5 in most tasks except the mathematics section of the US college admission exam known as “SAT Math”.

Stability AI also places a strong emphasis on the cautious release of FreeWilly models and the rigorous risk analysis carried out by an internal, specialized team. The company frequently promotes outside suggestions to enhance safety procedures.

FreeWilly was subjected to research using the “Orca” training method

The names of the models are puns on the “Orca” AI training method developed by Microsoft researchers, which allows “smaller” models (those exposed to fewer data) to perform just as well as large core models put under more rigorous testing. (This does not allude to the orcas that sank a boat.)

Using help from four datasets created by Enrico Shippole, FreeWilly1, and FreeWilly2 were particularly trained using 600,000 data points, which is only 10% of the size of the original Orca dataset. As a result, compared to the original Orca model and the bulk of other top LLMs, they were both much more inexpensive and environmentally benign (consuming less energy and leaving a lower carbon footprint). The models continued to perform superbly, occasionally matching or even outperforming ChatGPT on GPT-3.5.

The researchers combined EleutherAI’s lm-eval-harness with AGIEval to evaluate these models. The findings show that both FreeWilly models do remarkably well in problem-solving in specialized fields like law and mathematics, complicated reasoning, and language subtlety recognition.

According to the team, the two models improve spoken language comprehension in humans and open up new, previously unfeasible possibilities. They expect to see all new artificial intelligence applications use these models.

Featured image credit: Stability.