WormGPT, a chatbot designed to assist internet criminals, was built by a hacker.

SlashNext, an email security firm that employed the chatbot, believes that the inventor of WormGPT is selling access to the program in a well-known hacker forum. According to the company’s blog, “We see that malicious actors are now creating their own custom modules similar to ChatGPT, but easier to use for nefarious purposes.”

The chatbot appears to have been provided by the hacker in March before being launched last month. WormGPT, unlike ChatGPT or Google’s Bard, has no controls to prevent it from responding to malicious requests.

WormGPT: What is it?

WormGPT is a malicious version of ChatGPT that was released earlier this month. It can respond to dangerous content requests, but other well-known generative AI tools, such as ChatGPT or Bing, cannot.

It is critical to remember that WormGPT is a malicious chatbot designed to help cyber criminals conduct crimes. WormGPT is hence not recommended for any purpose. We can appreciate the need of using technology ethically and responsibly if we are aware of the risks associated with WormGPT and its potential consequences. Let’s look into WormGPT’s properties, how it varies from other GPT models, and the risks it poses.

Because of the advancement of artificial intelligence (AI) technologies such as OpenAI’s ChatGPT, business email compromise (BEC) attacks now have a new vector. Based on the input, a strong AI model called ChatGPT generates text that resembles human speech. Cybercriminals can boost the success rate of their attacks by automating the creation of phony emails that are highly convincing and tailored for the receiver.

WormGPT: How to use it?

After reading the aforementioned information, if you still wish to access and utilize WormGPT, you may visit the Hack Forums page here at your own risk. This implies that if WormGPT causes any damage or problems to you or to anybody else, it is your fault.

WormGPT: How to access?

To access WormGPT, you may utilize the official forum URL, however, it is strictly forbidden to use it for phishing or any other nefarious purposes. It is far preferable to use the normal ChatGPT rather than attempting to use this one since it doesn’t vary in any way from the regular ChatGPT in terms of responding to general and legal inquiries.

WormGPT might theoretically be used for good intentions. But it’s important to remember that WormGPT was developed and spread with malicious intentions. Any use of it raises moral dilemmas and legal risks.

WormGPT: Drawbacks and hazards

Exploiting WormGPT for malevolent purposes entails exploiting it to commit crimes against society including data theft, hacking, and other cybercrimes. This is very risky and can have negative effects. This kind of usage of WormGPT is illegal and may result in legal ramifications for the offender.

To prevent hurting people or breaking the law, it is crucial to always utilize technology ethically and responsibly.

- Dangerous and illegal.

- Attacks from malware and phishing.

- Modern cyberattacks.

- Fostering illegal activity.

- Legal implications.

Let’s take a closer look.

Dangerous and illegal

WormGPT use for illicit purposes is prohibited and may result in severe repercussions. It violates laws pertaining to data theft, hacking, and other unlawful conduct.

Attacks from malware and phishing

Malware and phishing assaults that hurt people and businesses may be made with WormGPT. These assaults have the potential to steal money, steal personal information, and do other kinds of harm.

Modern cyberattacks

Additionally, WormGPT gives thieves the ability to execute sophisticated assaults that have the potential to seriously harm networks and computer systems. Businesses, governments, or other organizations may be affected by these assaults.

Fostering illegal activity

WormGPT also makes it simpler for cyber criminals to commit crimes, endangering the safety of innocent individuals and institutions. This covers actions like fraud, identity theft, and other forms of online crime.

Legal implications

Cybercriminals that use WormGPT may be subject to legal repercussions, including possible criminal prosecution. There may be fines, jail time, or other consequences for this.

Conclusion

WormGPT is a dangerous and illegal chatbot developed with malicious intent. Its use for nefarious purposes poses significant risks to individuals, businesses, and society as a whole. The creation of phishing attacks, malware dissemination, and other cybercrimes can be facilitated by this malicious chatbot.

Such activities not only harm innocent victims but also have legal implications, potentially resulting in severe penalties for those involved. It is crucial to recognize the ethical and legal responsibilities associated with using technology and to always prioritize the well-being and security of others when engaging with AI tools like WormGPT.

Read more:

- Character AI App: A huge crossover event on your phone

- Stability AI launches Stable Doodle that enables sketch-to-image generation

- BabyAGI vs AutoGPT: How to choose?

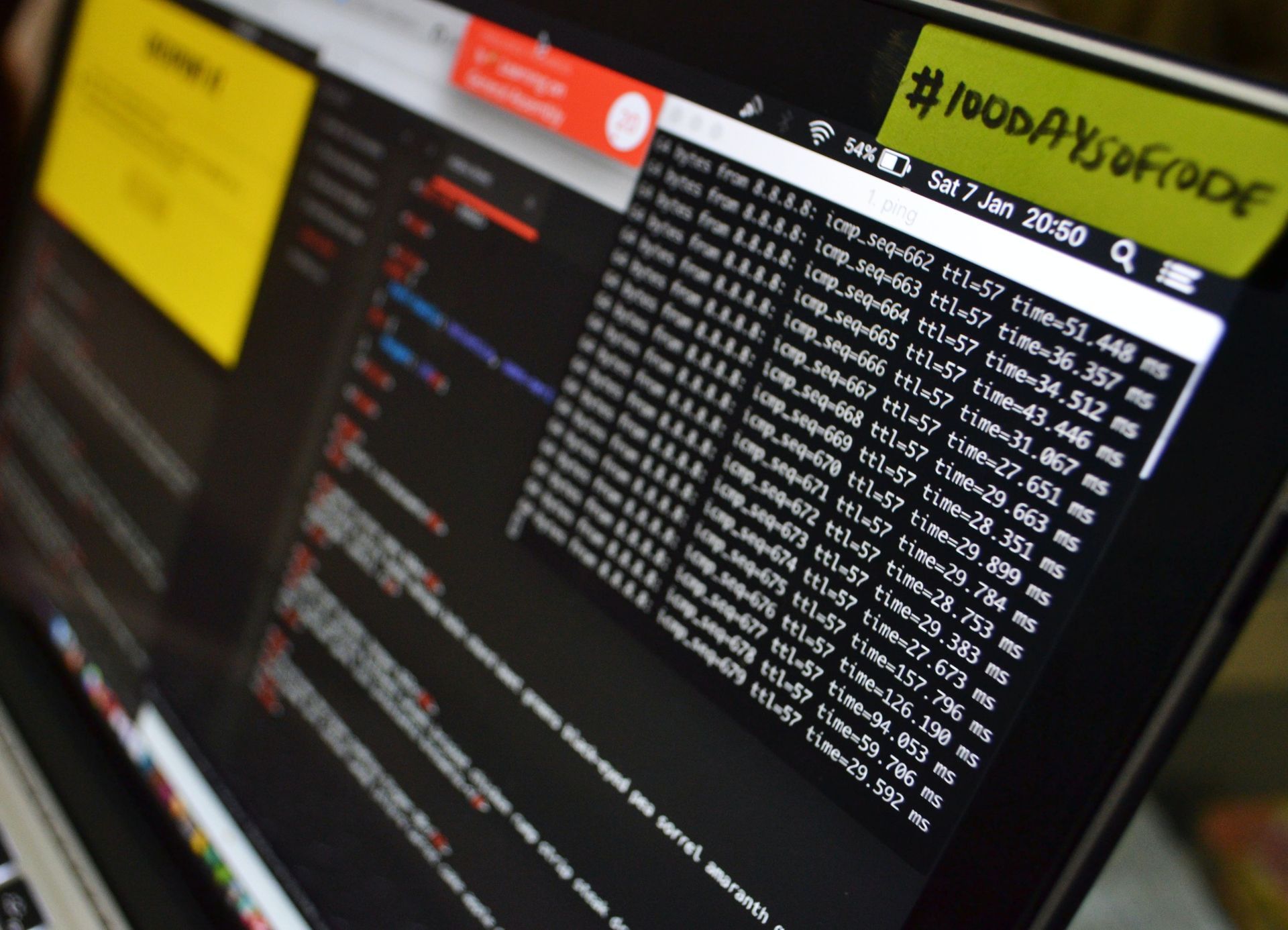

Featured image credit: Clint Patterson on Unsplash.