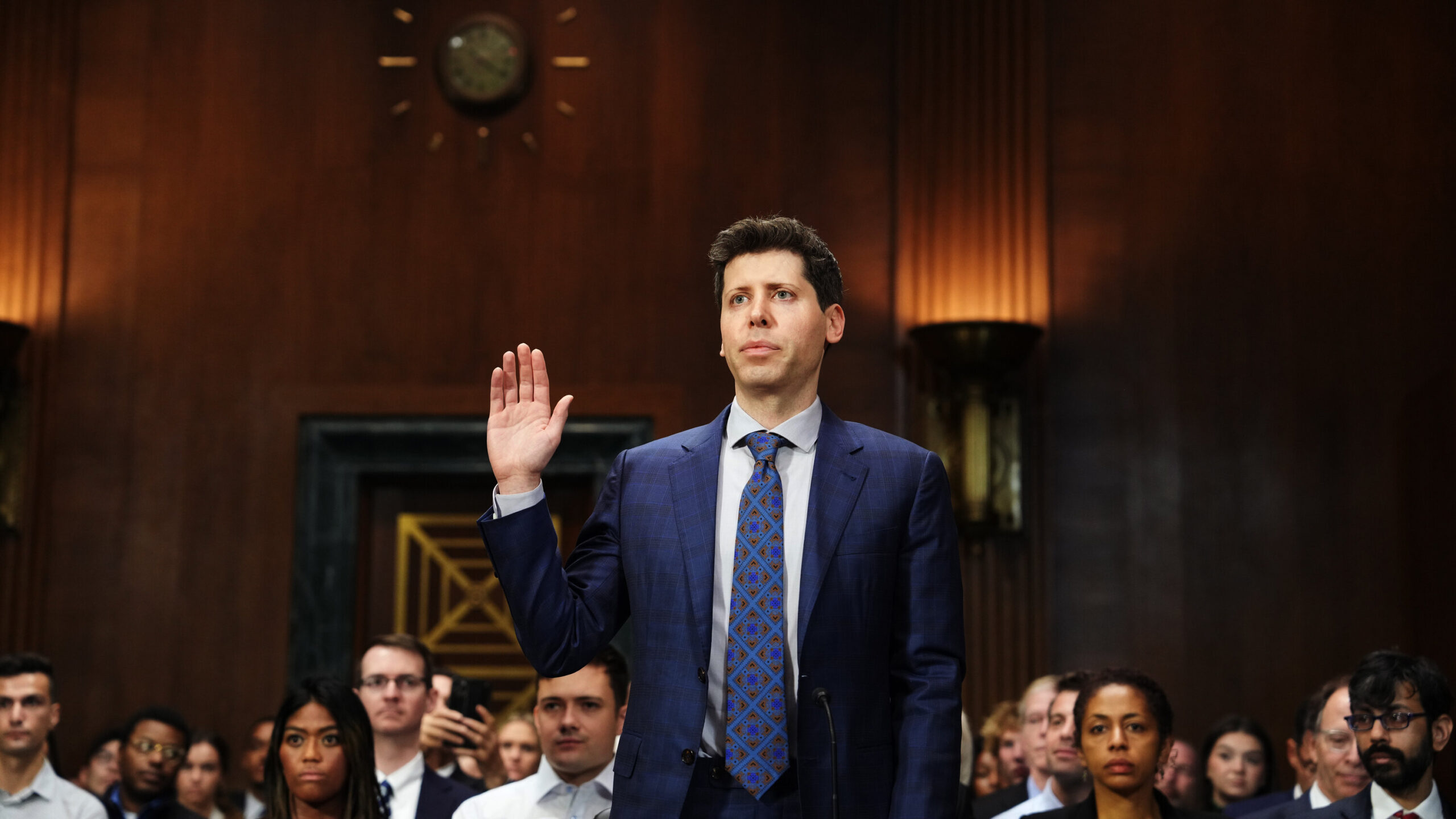

OpenAI CEO Sam Altman continues his international outreach, building upon the momentum generated by his recent appearances before the U.S. Congress. However, Altman’s approach overseas differs from the AI-friendly climate in the United States, as he hints at the possibility of relocating his tech ventures if compliance with his rules is not met.

Altman’s globetrotting journey has taken him from Lagos, Nigeria to various European destinations, culminating in London, UK. Despite facing some protests, he actively engages with prominent figures from the tech industry, businesses, and policymakers, emphasizing the capabilities of OpenAI’s ChatGPT language model. Altman seeks to rally support for pro-AI regulations while expressing discontent over the European Union’s definition of “high-risk” systems, as discussed during a panel at University College London.

The European Union’s proposed AI Act introduces a three-tiered classification for AI systems based on risk levels. Instances of AI that present an “unacceptable risk” by violating fundamental rights include social scoring systems and manipulative social engineering AI. On the other hand, “high-risk AI systems” must adhere to comprehensive standards of transparency and oversight, tailored to their intended use.

Sam Altman expressed concerns regarding the current draft of the law, stating that both ChatGPT and the upcoming GPT-4 could potentially fall under the high-risk category. Compliance with specific requirements would be necessary in such cases. Altman emphasized OpenAI’s intention to strive for compliance but acknowledged that there are technical limitations that may impact their ability to do so. As reported by Time, he stated, “If we can comply, we will, and if we can’t, we’ll cease operating… We will try. But there are technical limits to what’s possible.”

EU’s AI Act gets updated as AI keeps improving

Originally aimed at addressing concerns related to China’s social credit system and facial recognition, the AI Act has encountered new challenges with the emergence of OpenAI and other startups. The EU subsequently introduced provisions in December, targeting “foundational models” like the large language models (LLMs) powering AI chatbots such as ChatGPT. Recently, a European Parliament committee approved these updated regulations, enforcing safety checks and risk management.

In contrast to the United States, the EU has shown a greater inclination to scrutinize OpenAI. The European Data Protection Board has been actively monitoring ChatGPT’s compliance with privacy laws. However, it’s important to note that the AI Act is still subject to potential revisions, which likely explains Altman’s global tour, seeking to navigate the evolving landscape of AI regulations.

Altman reiterated familiar points from his recent Congressional testimony, expressing both concerns about AI risks and recognition of its potential benefits. He advocated for regulation, including safety requirements and a governing agency for compliance testing. Altman called for a regulatory approach that strikes a balance between European and American traditions.

However, Altman cautioned against regulations that could limit user access, harm smaller companies, or impede the open-source AI movement. OpenAI’s evolving stance on openness, citing competition, contrasts with its previous practices. It is worth noting that any new regulations would inherently benefit OpenAI by providing a framework for accountability. Compliance checks could also increase the cost of developing new AI models, giving the company an advantage in the competitive AI landscape.

Several countries have imposed bans on ChatGPT, with Italy being one of them. However, after OpenAI enhanced users’ privacy controls, the ban was lifted by Italy’s far-right government. OpenAI may need to continue addressing concerns and making concessions to maintain a favorable relationship with governments worldwide, especially considering its large user base of over 100 million active ChatGPT users.

Why do governments act cautiously over AI chatbots?

There are a number of reasons why governments may impose bans on ChatGPT or similar AI models for several reasons:

- Misinformation and Fake News: AI models like ChatGPT can generate misleading or false information, contributing to the spread of misinformation. Governments may impose bans to prevent the dissemination of inaccurate or harmful content.

- Inappropriate or Offensive Content: AI models have the potential to generate content that is inappropriate, offensive, or violates cultural norms and values. Governments may ban ChatGPT to protect citizens from encountering objectionable material.

- Ethical Concerns: AI models raise ethical questions related to privacy, consent, and bias. Governments may impose bans to address concerns about data privacy, the potential misuse of personal information, or the perpetuation of biases in AI-generated content.

- Regulatory Compliance: AI models must adhere to existing laws and regulations. Governments may impose bans if they find that ChatGPT fails to meet regulatory requirements, such as data protection or content standards.

- National Security and Social Stability: Governments may perceive AI models as a potential threat to national security or social stability. They may impose bans to prevent the misuse of AI technology for malicious purposes or to maintain control over information flow.

It is worth noting that the specific reasons for government-imposed bans on ChatGPT can vary across jurisdictions, and decisions are influenced by a combination of legal, ethical, societal, and political factors. For some current risks and undesired consequences of AI chatbots, check these latest articles: