OpenAI, the company responsible for creating ChatGPT, has developed AI Text Classifier to differentiate between text written by AI and text written by humans. We call it “ChatGPT detector.” While it’s not possible to identify AI-written text with absolute certainty, OpenAI feels its new tool will reduce instances of false claims that human-written content is actually generated by AI.

Understanding AI Text Classifier

In a statement, OpenAI claims that its AI Text Classifier can hinder the spread of automated misinformation, prevent the use of AI for academic deception, and block chatbots from pretending to be human.

The tool’s accuracy was tested on a set of English texts, where it correctly identified AI-written text 26% of the time. However, it also mistakenly classified human-written text as AI-generated 9% of the time. According to OpenAI, the tool performs better when the text is longer, which is why it requires a minimum of 1,000 characters to run a test.

OpenAI shared some other limitations:

- We recommend using the classifier only for English text. It performs significantly worse in other languages and it is unreliable on code.

- Text that is very predictable cannot be reliably identified. For example, it is impossible to predict whether a list of the first 1,000 prime numbers was written by AI or humans, because the correct answer is always the same.

- AI-written text can be edited to evade the classifier. Classifiers like ours can be updated and retrained based on successful attacks, but it is unclear whether detection has an advantage in the long-term.

- Classifiers based on neural networks are known to be poorly calibrated outside of their training data. For inputs that are very different from text in our training set, the classifier is sometimes extremely confident in a wrong prediction.

How to use OpenAI’s ChatGPT detector?

The OpenAI AI Text Classifier is easy to use. Simply go to this page, log in, paste the text to be tested, and hit the submit button. The tool will then provide a rating of the probability that the text was generated by AI. The results can range from:

- Very unlikely

- Unlikely

- Unclear if it is

- Possibly

- Likely

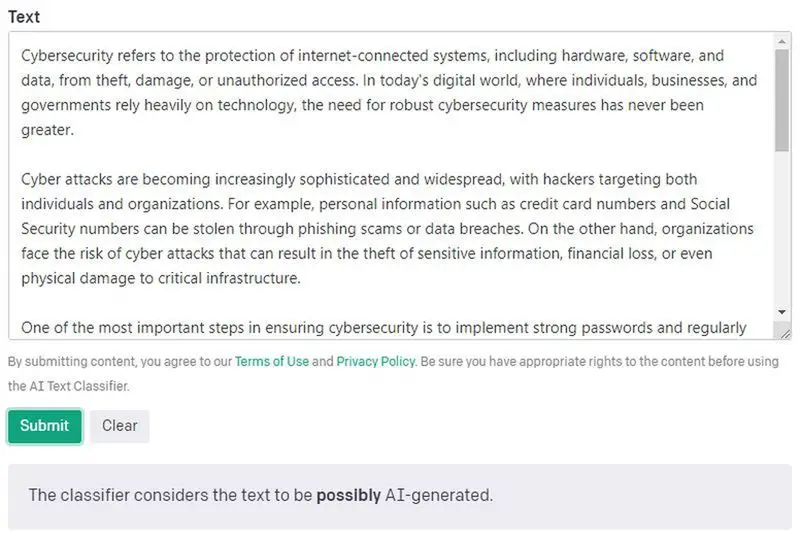

We evaluated ChatGPT’s writing skills by having it write an essay on cybersecurity and then submitted the essay to an AI Text Classifier. The classifier rated the essay as “possibly generated by AI,” which is a strong but not definitive indication:

This highlights the limitations of the tool, as it cannot say with complete certainty that the essay was written by AI. Making slight changes suggested by Grammarly, I lowered the rating from “possibly” to “unclear.” OpenAI is accurate in pointing out that it’s simple to bypass the classifier, but it’s not meant to be the only proof that AI wrote the text.

This highlights the limitations of the tool, as it cannot say with complete certainty that the essay was written by AI. Making slight changes suggested by Grammarly, I lowered the rating from “possibly” to “unclear.” OpenAI is accurate in pointing out that it’s simple to bypass the classifier, but it’s not meant to be the only proof that AI wrote the text.

OpenAI states in a FAQ section at the bottom of the page:

“Our intended use for the AI Text Classifier is to foster conversation about the distinction between human-written and AI-generated content. The results may help, but should not be the sole evidence when deciding whether a document was generated with AI. The model is trained on human-written text from a variety of sources, which may not be representative of all kinds of human-written text.”

OpenAI mentions that the tool has not undergone extensive testing for detecting text that is a mixture of AI and human writing. While the AI Text Classifier can be useful for identifying text that may have been created by AI, it should not be relied upon as the sole basis for making a final determination.

If you are dealing with the ChatGPT 405 – method not allowed error, check out our special guide!