Do you know about the history of AI technologies that are constantly being talked about? Today we will dive into the topic of: ”How long has AI existed?” together with you.

Artificial intelligence (AI), a relatively new field with about 60 years of history, is a collection of sciences, theories, and methods that try to replicate human cognitive abilities. These include:

- Mathematical logic

- Statistics, probability

- Computational neuroscience

- Computer science

Beginning amid World War II, its advancements are closely tied to those of computing and have enabled computers to take on increasingly complicated jobs that were previously exclusively suitable for humans.

How long has AI existed?

Since 1940, artificial intelligence (AI) phrase has been in our lives. Some experts find the term misleading because this technology is still far from true human intelligence. Their study has not yet advanced to a point where it can be compared to the present accomplishments of mankind. Advances in fundamental science would be necessary for the “strong” AI, which has only yet appeared in science fiction, to be able to model the entire world.

However, since 2010, the field has seen a new surge in popularity, mostly as a result of significant advancements in computer processing power and easy access to vast amounts of data. An objective understanding of the issue is hindered by repeated promises and worries that are occasionally fantasized about.

In our opinion, a brief review of the discipline’s history can serve to frame present discussions.

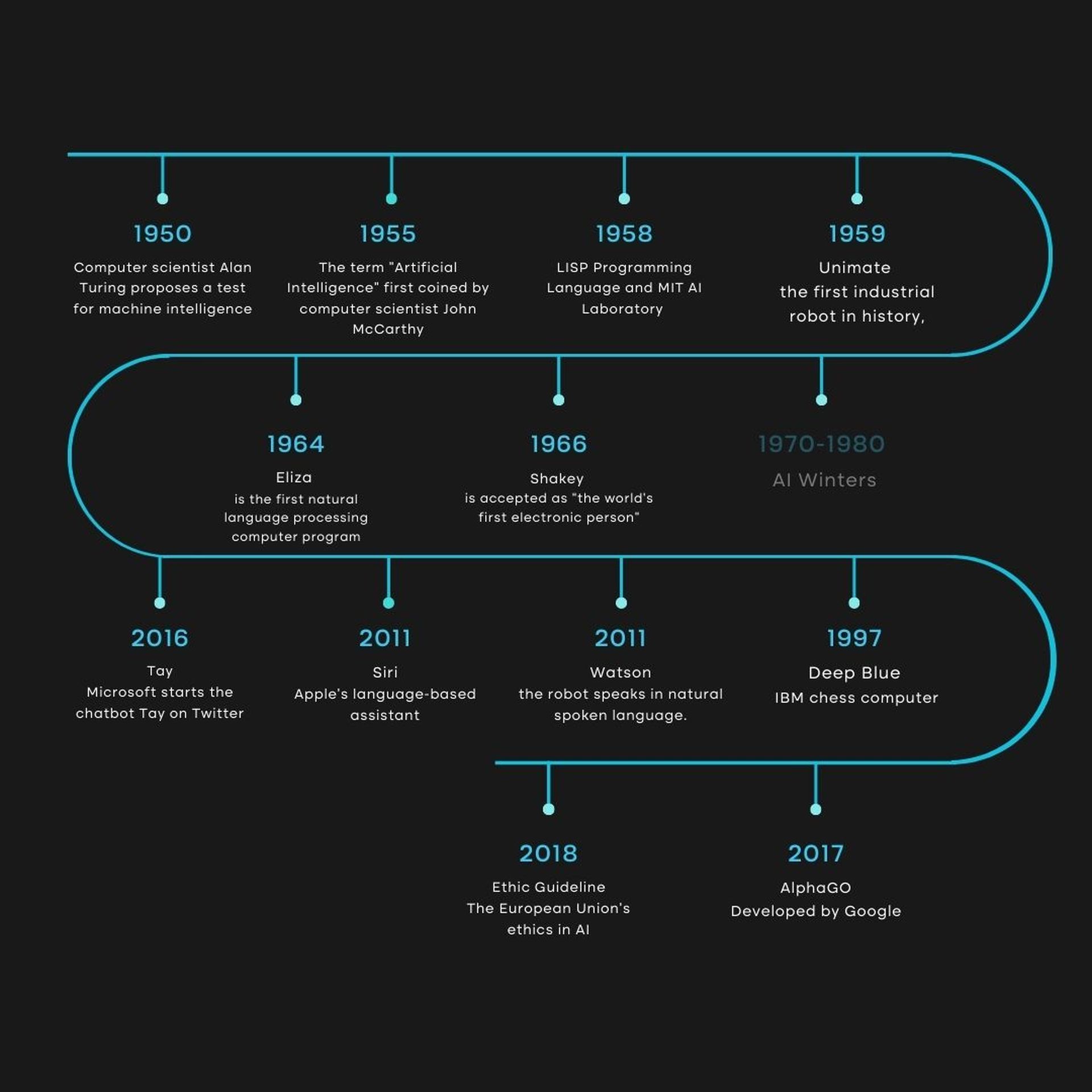

1940–1960: AI’s birth

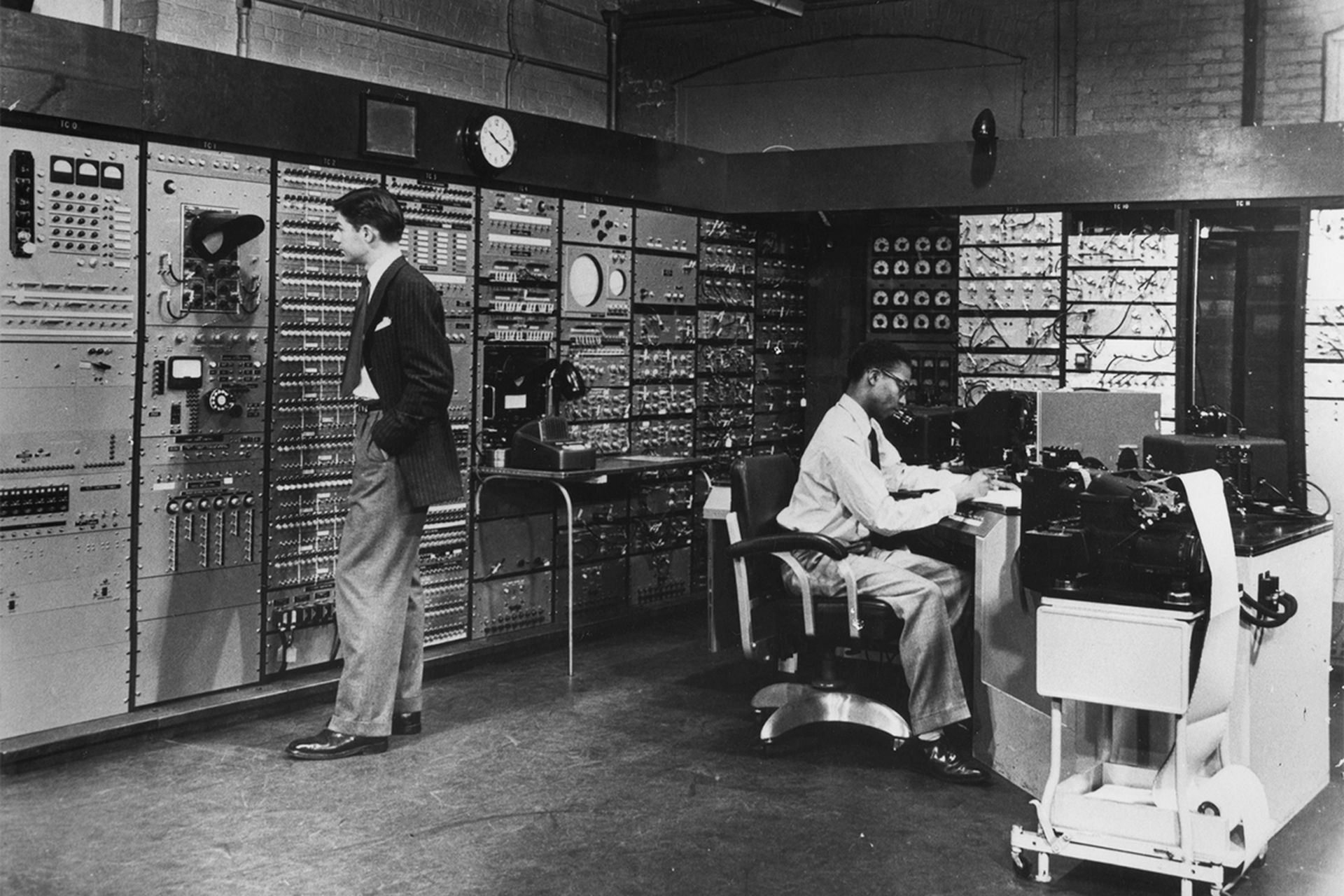

The confluence of technological advancements and the quest to comprehend how to combine the functioning of machines and organic beings between 1940 and 1960 left a lasting impression.

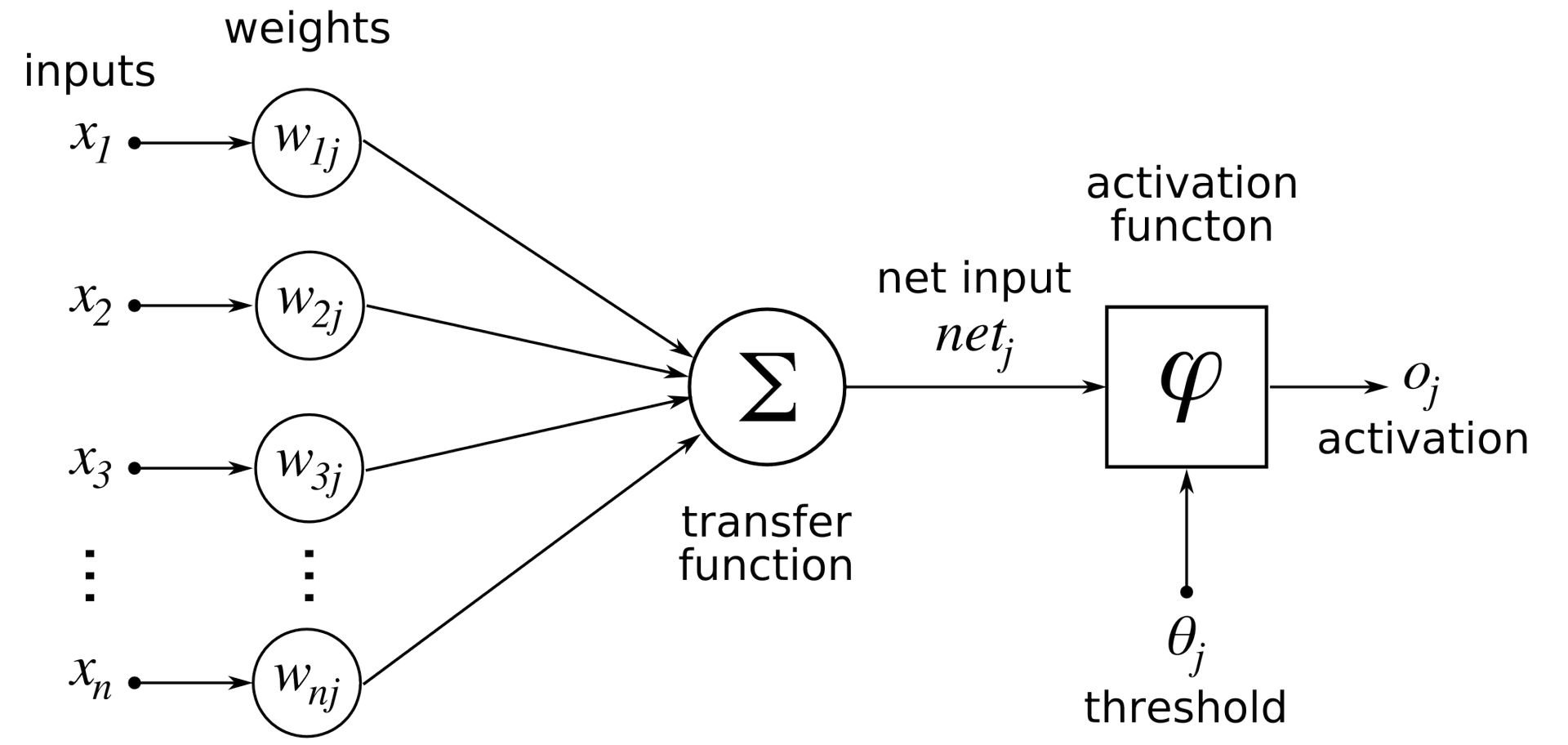

To create “a whole theory of control and communication, both in animals and machines,” Norbert Wiener, a pioneer in the field of cybernetics, saw the need to integrate mathematical theory, electronics, and automation. Before this, Warren McCulloch and Walter Pitts created the first mathematical and computer model of the biological neuron in 1943.

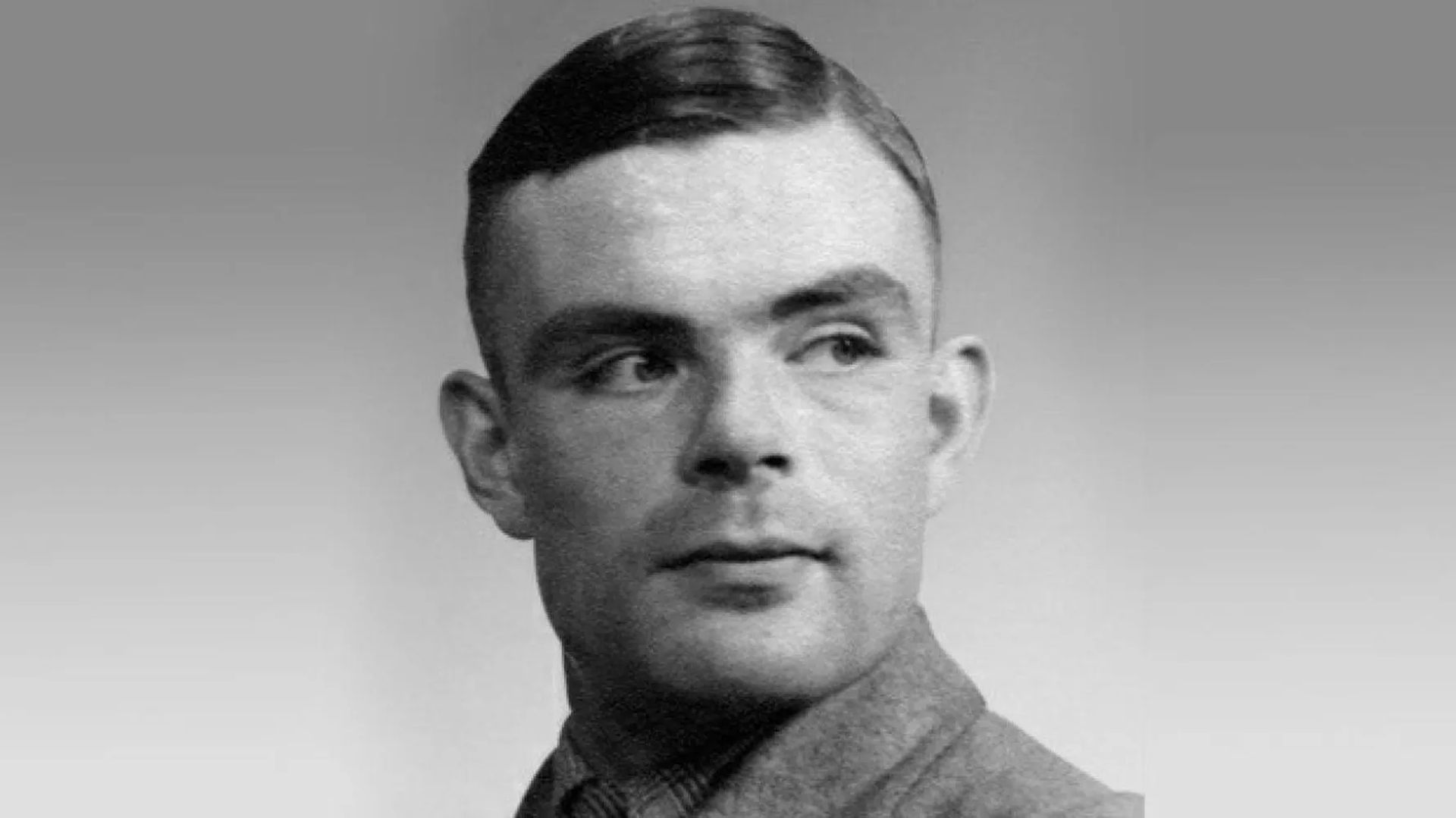

Although they did not invent the term artificial intelligence (AI) at the beginning of 1950, John Von Neumann and Alan Turing were the pioneers of the technology that underlies it. They helped computers shift to 19th-century decimal logic and machines to binary logic. Thus, the two researchers codified the architecture of today’s computers and showed that they were a universal device that could carry out what was programmed. Turing, on the other hand, defined a “game of imitation” in which a person should be able to tell in a teletype conversation whether he is speaking to a man or a machine for the first time in his renowned 1950 article “Computing Machinery and Intelligence.”

Regardless of how contentious it may be, this piece is frequently regarded as the starting point for discussions over where the line between humans and machines should be drawn.

John McCarthy of MIT is credited with inventing “AI,” which Marvin Minsky defines as:

“The development of computer programs that engage in tasks that are currently better done by humans because they require high-level mental processes like perceptual learning, memory organization, and critical reasoning.”

The discipline is credited with beginning at a symposium held at Dartmouth College in the summer of 1956. Anecdotally, it is important to note the workshop that served as the conference’s main event. McCarthy and Minsky were two of the six individuals who have continuously participated in this effort.

The early 1960s saw a decline in technology’s appeal, though it continued to be exciting and promising. Because of the computers’ little amount of memory, using a computer language was challenging.

The IPL, or information processing language, had thus made it feasible to develop the LTM program, which attempted to show mathematical theorems, as early as 1956. However, there were also some foundations that are still present today, such as the solution trees to solve mathematical theorems.

1980-1990: Expert systems

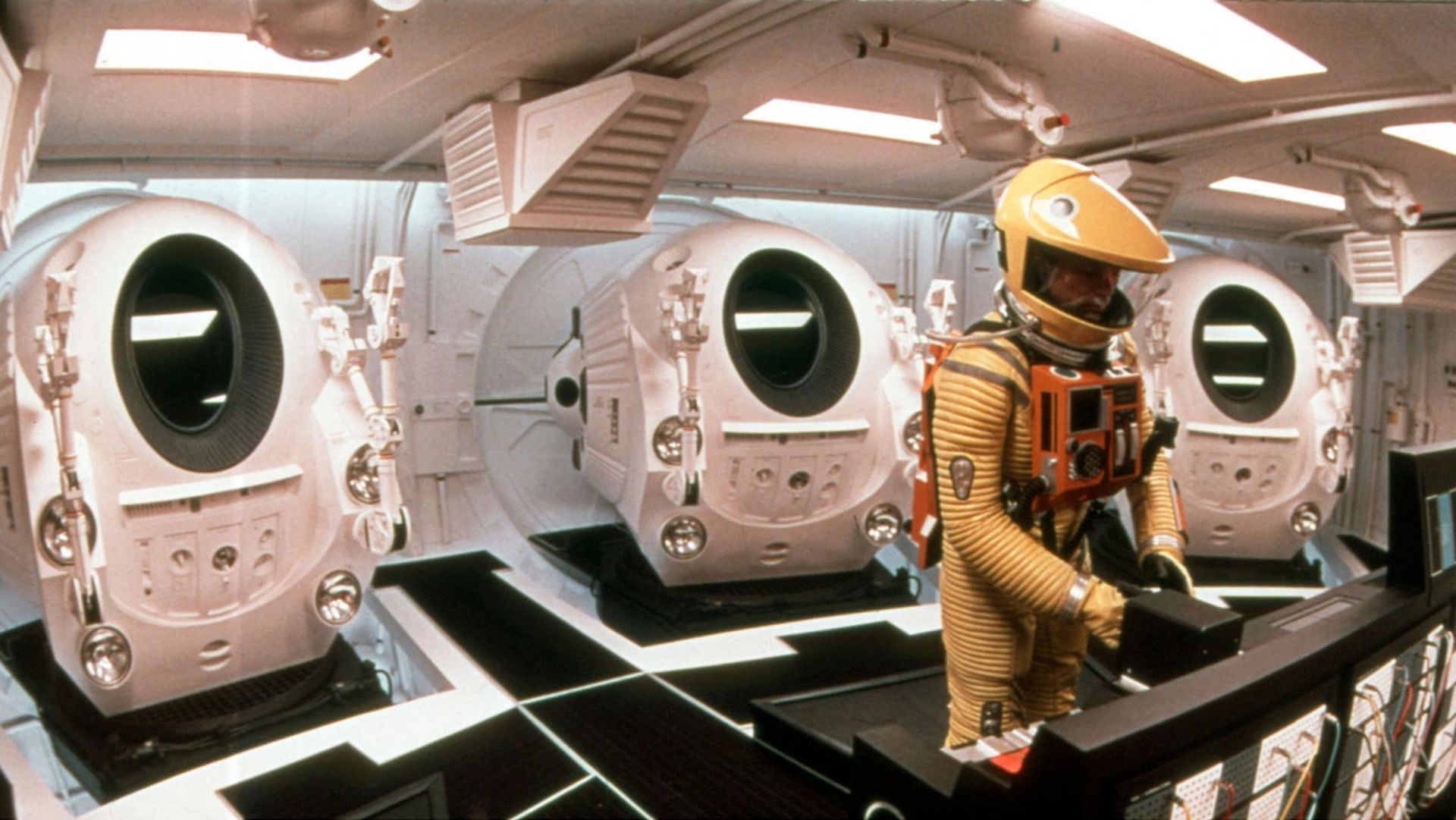

The 1968 film “2001 Space Odyssey,” directed by Stanley Kubrick, features a computer named HAL 9000 that encapsulates all of the ethical concerns raised by artificial intelligence:

”Will it be very sophisticated, beneficial to humanity, or a danger?”

The movie’s influence will obviously not be scientific, but it will help make the theme more well-known, much like science fiction novelist Philip K. Dick, who will never stop wondering whether machines can ever feel emotions.

The first microprocessors were introduced at the end of 1970, and expert systems entered their heyday as AI once more took off. The approach was made public at Stanford University in 1972 with MYCIN and at MIT in 1965 with DENDRAL. These systems relied on an “inference engine,” which was built to be a logical replica of human reasoning. By giving it information, the engine produced highly knowledgeable answers.

The promises predicted a significant advancement, but by the end of 1980 or the beginning of 1990, the enthusiasm would have peaked. It took a lot of work to implement such information, and between 200 and 300 rules, there was a “black box” effect that obscured the machine’s logic. Thus, creation and upkeep became incredibly difficult, and most importantly there were numerous other, quicker, less difficult, and more affordable options available. It should be remembered that in the 1990s, the phrase “artificial intelligence” had all but disappeared from academic vocabulary and that more subdued forms, such as “advanced computing,” had even come in.

In May 1997 IBM’s supercomputer Deep Blue’s had a victory against Garry Kasparov in a chess match, however, it did not encourage the funding and advancement of this type of AI.

Deep Blue operated via a methodical brute force approach in which all potential moves were weighed and evaluated. Although Deep Blue had only been capable of attacking a relatively small area and was far from being able to simulate the complexity of the globe, the defeat of the human remained a hugely symbolic event in history.

2010-Today: The modern era of AI

AI technologies, which hit the spotlight after Kasparov’s defeat to the supercomputer Deep Blue, peaked in mid-2010. Two factors may explain the new boom in the discipline around 2010:

- Access to enormous amounts of data

- Discovery of computer graphics card processors’ that runs at extremely high efficiency

The public accomplishments made possible by this new technology have increased investment, and Watson, IBM’s AI, will defeat two Jeopardy champions in 2011.

An AI will be able to identify cats in videos in 2012 thanks to Google X. This final challenge required the employment of more than 16,000 processors, but the potential of a machine can learn to distinguish between different things was astounding.

In 2016, Lee Sedol and Fan Hui, the European and world champions in Go Games, would lose to Google’s AI AlphaGO.

From where did this miracle originate? A radical departure from expert systems. The methodology has changed to be inductive; instead of coding rules as in the case of expert systems, it is now necessary to allow computers independently discover them through correlation and categorization based on vast amounts of data.

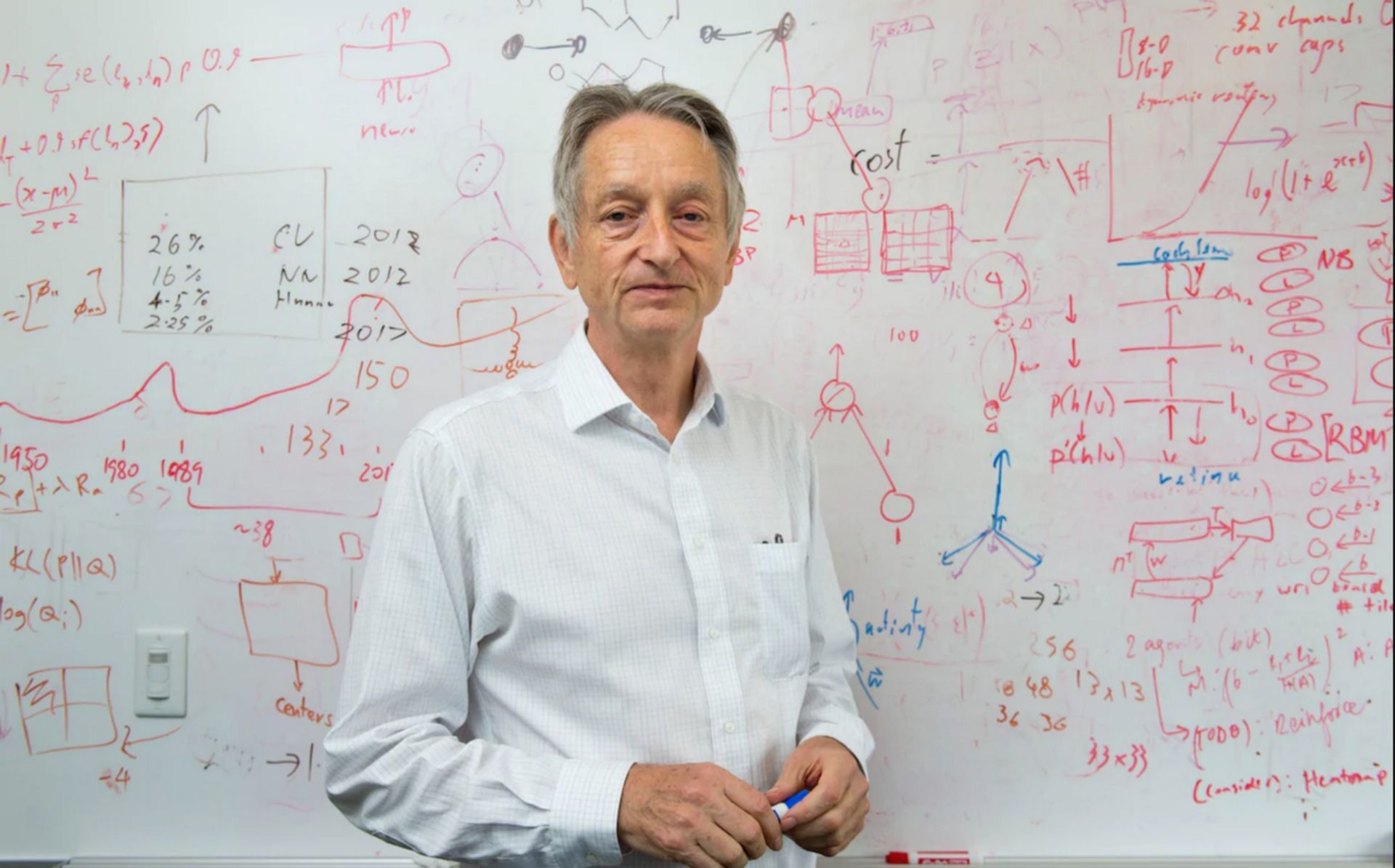

Deep learning appears to be the most promising machine learning technology for a variety of applications. Geoffrey Hinton, Yoshua Bengio, and Yann LeCun decided to launch a research program in 2003 to modernize neural networks. With the assistance of the Toronto laboratory, experiments carried out simultaneously at Microsoft, Google, and IBM revealed that this sort of learning was successful in reducing the error rates for speech recognition by half. The image recognition team at Hinton had similar success.

Almost overnight, the vast majority of research teams adopted this technology, which had undeniable advantages. Although there has been significant improvement in text recognition because of this form of learning, there is still a long way to go before text understanding systems can be created, according to specialists like Yann LeCun.

Conversational agents provide a good illustration of this difficulty: while our smartphones are currently capable of transcribing instructions, they are unable to properly contextualize them or discern our intents.

This is how we summarized the development of AI technologies to date for you. Keep reading us so that you won’t be unaware of the latest news on AI technologies like Notion AI and Meta Galactica AI