Nvidia Grace CPU and Nvidia H100 were revealed at Nvidia GTC 2022. Nvidia today announced its next-generation Hopper GPU architecture and Hopper H100 GPU, as well as a new data center chip that combines the GPU with a high-performance CPU, dubbed the “Grace CPU Superchip” (not to be confused with Nvidia’s other Grace superchip).

Nvidia Grace CPU: Specs, price, and release date

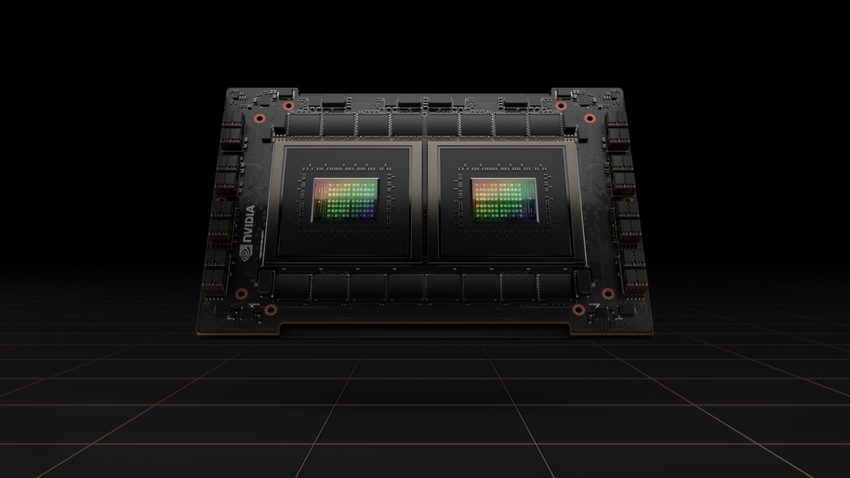

The Nvidia GPU Superchip is the company’s first attempt at a specialized data center CPU. The Arm-based chip will feature a staggering 144 cores and 1 terabyte per second of memory bandwidth, according to information leaked by Intel. It actually combines two Grace CPUs connected over Nvidia’s NVLink interconnect — which is comparable to Apple’s M1 Ultra architecture.

The new CPU, which will be powered by fast LPDDR5X memory, is expected to be delivered in the first half of 2023 and will offer 2x the performance of conventional servers. Nvidia predicts that the chip will achieve 740 points on the SPECrate®2017_int_base benchmark, putting it head-to-head with high-end AMD and Intel data center processors (though some of those score higher, but at the cost of lower performance per watt).

“A new type of data center has emerged — AI factories that process and refine mountains of data to produce intelligence. The Grace CPU Superchip offers the highest performance, memory bandwidth, and NVIDIA software platforms in one chip and will shine as the CPU of the world’s AI infrastructure.”

-Jensen Huang, founder and CEO of Nvidia

This new chip, in many ways, is the natural progression of the Grace Hopper Superchip and CPU announced last year. The Grace Hopper Superchip combines a CPU and GPU into a single system-on-a-chip design. This system, which will also debut in the first half of 2023, will include a 600GB memory GPU for big models and Nvidia claims that the memory bandwidth will be 30x greater than a basic server’s GPU. These processors, according to Nvidia, are intended for “gigantic-scale” AI and high-performance computing applications.

The Grace CPU Superchip is an Arm v9-based SoC that may be used to create stand-alone CPUs or servers with up to eight Hopper-based GPUs.

The company indicates that it is working with “leading HPC, supercomputing, hyperscale and cloud customers,” implying that these systems will eventually be available on a cloud provider near you.

No information about the price has been shared yet.

Nvidia H100 GPU: Specs, price, and release date

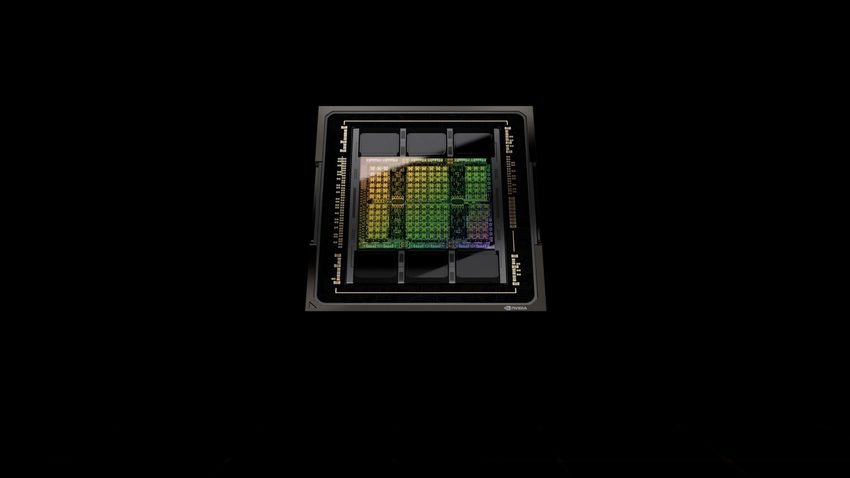

Nvidia is releasing a number of new and improved technologies with Hopper, but the architecture’s emphasis on transformer models, which have become the machine learning technique of choice for many applications and which power models like GPT-3 and asBERT, may be the most essential one.

The H100 chip’s new Transformer Engine promises to speed up model training by up to six times, and because this new architecture also includes Nvidia’s new NVLink Switch system for linking numerous nodes, massive server clusters powered by these chips will be able to scale up to support huge networks with less overhead.

“The largest AI models can require months to train on today’s computing platforms. That’s too slow for businesses. AI, high performance computing and data analytics are growing in complexity with some models, like large language ones, reaching trillions of parameters. The NVIDIA Hopper architecture is built from the ground up to accelerate these next-generation AI workloads with massive compute power and fast memory to handle growing networks and datasets.”

-Dave Salvator

Customers’ Tensor Cores, which can combine 8-bit precision and 16-bit half-precision as needed while remaining precise, are used in the new Transformer Engine.

No information about the price has been shared yet.