Today Google introduced a new AI-supported upscaling technology, in a blog post named High Fidelity Image Generation Using Diffusion Models, that generates high fidelity images from low resolution images. Recent research from Google’s AI department shows how new advances in this area make it possible to create incredible images. The company’s machine learning model is able to take a photo with almost no resolution and scale it to achieve unique details.

Google introduces a new AI-supported upscaling technology

When it comes to scaling photographs by AI there are different methods. The one used by the company is called diffusion modeling. It is a generative model that began to be implemented in 2015.

As they explain, the system takes a low-resolution image as input and builds a high-resolution image on its own. To do this, Google says they have first trained the AI to lower the resolution of the images and make them extremely pixelated. From there it “learns to reverse this process, beginning from pure noise and progressively removing noise to reach a target distribution through the guidance of the input low-resolution image.”

Google’s tool creates high fidelity images using diffusion modeling

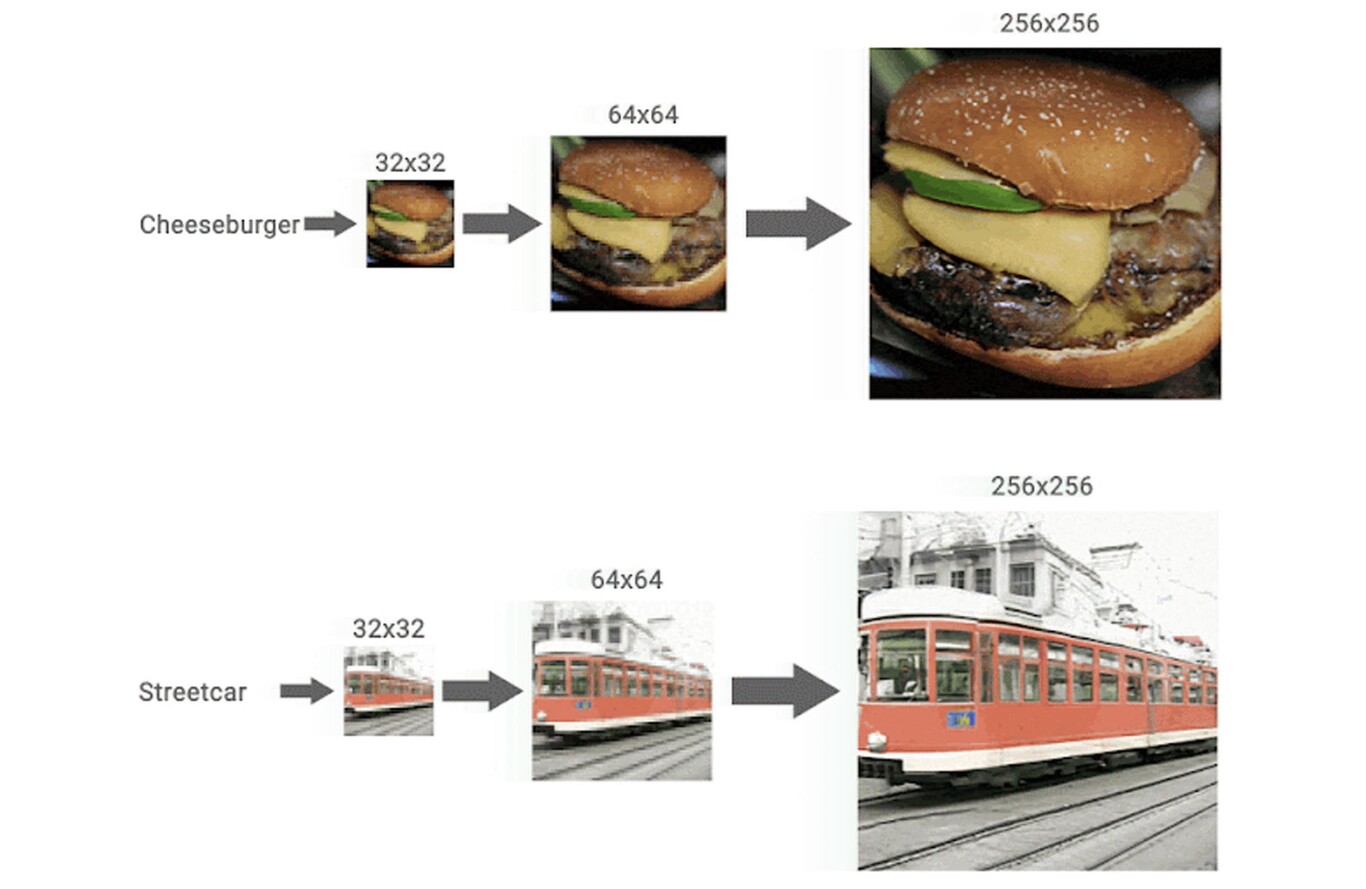

With this method, Google manages to improve especially portraits of people. However, it goes a step further and uses a second AI to be able to improve the quality even further. For example, it first transforms a 32 x 32 image to 64 x 64 and takes that new photograph as a reference to go up to 128 x 128 and so on.

The results are undoubtedly spectacular, it allows you to create genuinely detailed photographs from virtually nothing. Although there are some minor errors the photographs look real without any problem. In fact, without knowing the context, an ordinary person would probably not identify that they were scaled by an AI.