On August 20, Tesla held a live presentation in which they told us about their upcoming humanoid robot, which got all the attention. However, there was another big announcement that went unnoticed: their new AI chip, the Tesla D1.

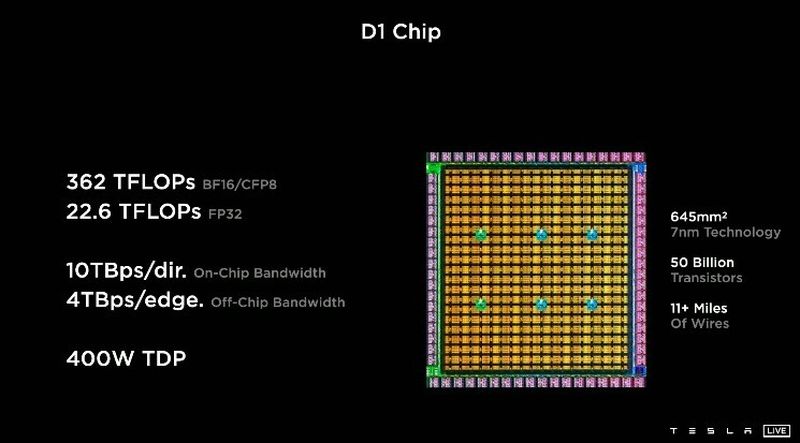

This is a chip with impressive capabilities, using seven-nanometer technology and capable of housing up to 50 billion transistors in a size of 645 mm2 and with a power of up to 362 TFLOPs. This new chip is intended to be linked with other chip units to create large processing networks.

Tesla’s powerful solution for AI leadership

Manufactured by TSMC, but designed by Tesla, the D1 is the new artificial intelligence chip from Elon Musk’s company. In its 645mm2 body, it is capable of housing 50 billion transistors and is intended to solve complex AI computations. For context, the NVIDIA A100 GPU, capable of 54 billion transistors, is 826 mm2 in size.

The D1 chip can intercommunicate with other D1 units to create supercomputers or different training networks.

According to Tesla, this D1 chip is capable of delivering up to 22.6 TFLOPS in FP32 and 362 TFLOPS in BF16/CFP8. The directional bandwidth reaches 10TB/s, figures designed to be multiplied by joining several of these chips to create supercomputing units.

This D1 chip is capable of intercommunicating with itself, thus creating training units. As an additional fact, Tesla stated that by joining 3000 D1 chips together, a supercomputer with a computational performance of 1.1 ExaFLOPS is obtained, thus becoming the most powerful AI supercomputer in the world, according to Tesla’s data.

The real application of this chip will be seen, among others, in Tesla’s vehicles, as Musk will use it both to improve its AI capabilities and Autopilot. It will first be used in the testing phase and, subsequently, we will start to see this chip implemented in Tesla’s new vehicles.