Twitter’s automatic photo cropping algorithm favored “young, thin, fair-skinned faces,” according to the results of a contest held by the social network.

The company deactivated automatic photo cropping in March this year. Many users pointed out that, when posting a group image, white people were highlighted over black people.

The contest organized by Twitter corroborated the situation. The participants, experts in Artificial Intelligence, highlighted the biases that fed the network’s system.

The winners showed that the algorithm favored “young, slim, fair-skinned faces, smooth skin texture, with stereotypically feminine features.” First place went to Bogdan Kulynych, a graduate student at EPFL: he received $3,500.

In the second place, it was pointed out that it was biased against people with white or gray hair, implying age discrimination.

While in third place it was noted that it favored English over Arabic writing in the images.

Looking for improvements in Twitter’s AI

Let’s remember that the system is in constant development, so it can still be improved. What Twitter was looking for was, based on expert opinions and findings, a better guideline for automatic photo cropping.

Rumman Chowdhury, head of Twitter’s META team, analyzed the results.

3rd place goes to @RoyaPak who experimented with Twitter's saliency algorithm using bilingual memes. This entry shows how the algorithm favors cropping Latin scripts over Arabic scripts and what this means in terms of harms to linguistic diversity online.

— Engineering (@XEng) August 9, 2021

He said: “When we think about biases in our models, it’s not just about the academic or the experimental,” the executive said. (It’s about) how that also works with the way we think about society.”

“I use the phrase ‘life imitating art, and art imitating life.’ We create these filters because we think that’s what’s beautiful, and that ends up training our models and driving these unrealistic notions of what it means to be attractive.”

Twitter’s META team studies the ethics, transparency, and accountability of machine learning.

How did the winner arrive at its conclusion?

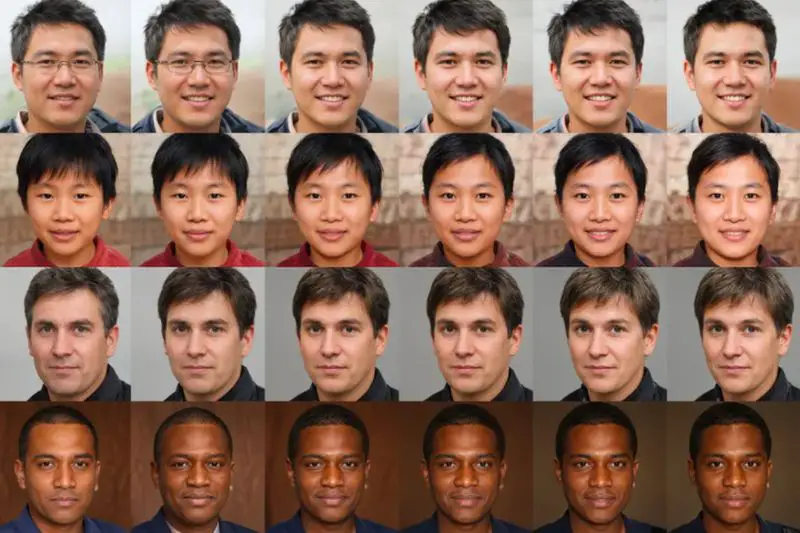

For Bogdan Kulynych to reach his conclusion about Twitter’s algorithm he used an AI program called StyleGAN2. With it, he generated a large number of real faces that he varied according to skin color, as well as female versus male facial features and thinness.

Wow, this was an unexpected conclusion of the week! My submission was awarded the 1st place in the Twitter's Algorithmic Bias program. Many thanks to @ruchowdh, @TwitterEng META team, and the jury…

— Bogdan Kulynych 🇺🇦 (@hiddenmarkov) August 8, 2021

As Twitter explains, Kulynych fed the variants into the network’s automatic photo cropping algorithm, finding which was his favorite.

“(They cropped) those that did not meet the algorithm’s preferences for body weight, age, and skin color,” the expert highlighted in his results.

Companies and racial biases, how do you deal with them?

Twitter, with its contest, confirmed the pervasive nature of social bias in algorithmic systems. Now comes a new challenge: how to fight these biases?

“AI and machine learning are just the Wild West, no matter how skilled you think your data science team is,” noted Patrick Hall, AI researcher.

“If you’re not finding your mistakes, or the bug bounties aren’t finding your mistakes, who are finding your mistakes? Because you have errors.”

His words hearken back to the work of other companies when they have similar failures. The Verge recalls that when an MIT team found racial and gender bias in Amazon’s facial recognition algorithms, the company discredited the researchers.

It subsequently had to temporarily ban the use of those algorithms.