Apple has decided to go further in its fight against child pornography. The company has created a new scanning and encryption system with which it will track the photos of its users in search of suspicious content. The new function based on machine learning will not come to some countries for the moment, it will be tested first in the United States, but it has already aroused suspicion among cybersecurity experts.

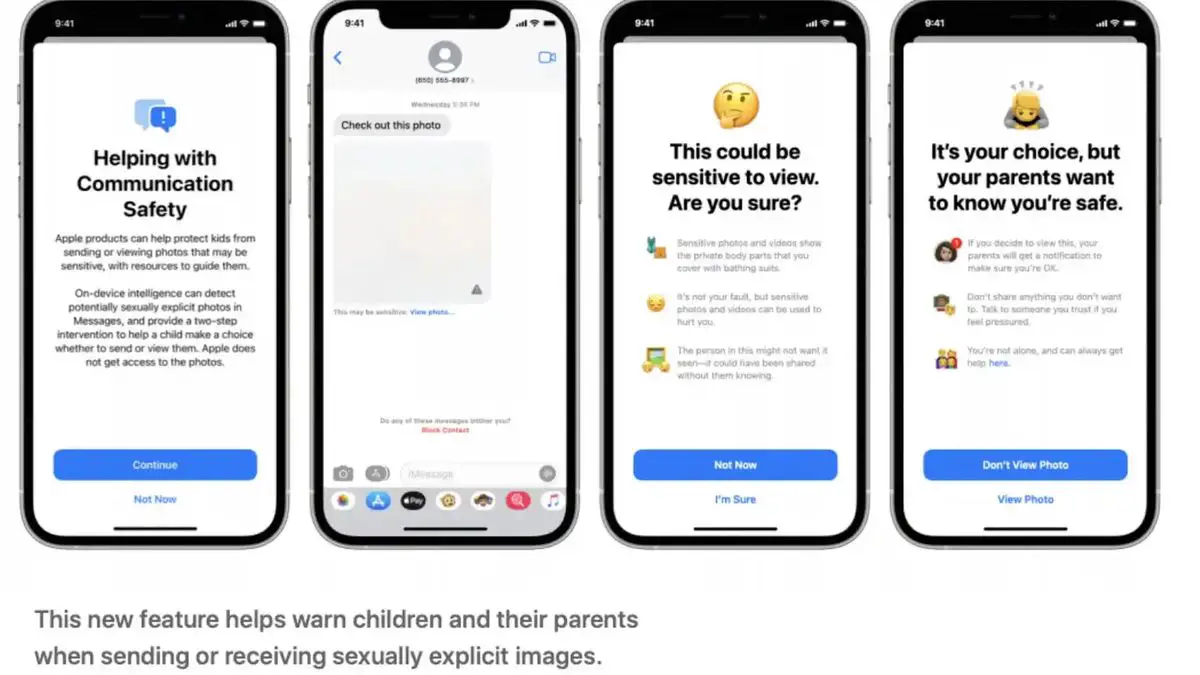

The company thus confirmed the information advanced on Twitter by Matthew Green, professor of cryptography at Johns Hopkins University, on Thursday. From now on, iPhone users will receive an alert if any of the images they try to send by iMessage is suspected of instilling sexual abuse of minors.

“Messages uses machine learning on the device to analyze image attachments and determine if a photo is sexually explicit. The feature is designed so that Apple does not have access to Messages” details Apple in a report outlining how this system works, which is not as new as it sounds.

How does it work?

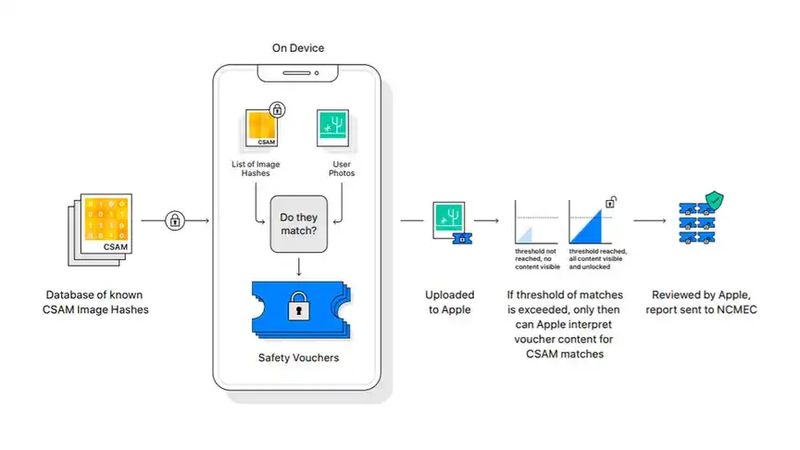

The technology will be used in iOS, macOS, WatchOS, and iMessages from where all images that users intend to share will be analyzed. To do this they have resorted to machine learning algorithms known as NeuralHash.

The hash technology analyzes the image without the need to view it or open the file. It converts each photograph into a unique number that describes its content, like a digital fingerprint, but which would be impossible for any user to understand.

After comparing that code with those established by the company as suspicious content, if enough matches are detected between one code and another, an alert is created that blurs the image and blocks it from being shared.

Apple ensures that parents will be able to receive alerts if their children want to send content classified as suspicious. The authorities could also be alerted of these matches so that a human team could review the images.

Matthew Green explains on his Twitter account that this technology can give false positives as they are not very accurate: “This is on purpose. They are designed to find images that look like bad images, even if they have been resized, compressed, etc.” The company denies this claim: “The risk of the system incorrectly flagging an account is extremely low. In addition, Apple manually reviews all reports made to NCMEC to ensure the accuracy of the reports.”

The privacy risks

What could be a key tool for pursuing criminals, pedophiles and terrorists, poses at the same time a threat to the privacy of innocent citizens and a very useful weapon for totalitarian regimes, the cybersecurity expert denounces.

The problem with this new tool would rather be where they are going to look for those similarities. The photos stored on the devices and stored in iCloud are stored encrypted on Apple servers, but as experts explain, the keys to decrypt these codes are the property of Apple.

The only ones that have end-to-end encryption (E2E) are those of iMessages which, like WhatsApp, are protected from one device to another so that no one but users can see them. This is where, in theory, Apple’s new system would come in.

This content analysis is not so new. Facebook scans posts for nudity on its social networks (Facebook and Instagram). Apple has also used hashing systems to look for child abuse images sent via email, systems similar to Gmail and other cloud email providers, which are not protected by end-to-end encryption.

Green believes this technology sends a troubling message. “Regardless of Apple’s long-term plans, they have sent a very clear signal. (…) it is safe to build systems that scan users’ phones for prohibited content,” he explains on his Twitter profile.

However, not all cybersecurity experts have been so critical. Nicholas Weaver, a senior research fellow at the International Computer Science Institute at UC Berkeley, has assured Motherboard that Apple’s new Messages feature “seems like a VERY good idea.” “Overall, Apple’s approach seems well thought out to be effective and maximize privacy,” he adds.