Intel fights back against Apple M1 by choosing its benchmarks.

If you thought that Intel was going to stand idly by while the media and users were praising Macs with Apple Silicon, you are wrong. Because Intel has decided to get down in the mud and get dirty to mitigate the surprise effect of these processors. And it has done so with its own set of benchmarks, carefully chosen to slam Apple’s MacBook Air and Pro with M1.

The problem is that in many cases, the comparison is dubious. And in others, it changes the processor it compares to improve its results. Let’s see how.

PCWorld collected the presentation prepared by Intel where two computers are compared. In the first case, we have a MacBook Pro M1 with 16GB of RAM, in the second, an unspecified PC but with an 11th generation Intel Core i7 (1185G7) and 16GB of RAM. By not mentioning the PC model that mounts this chip and memory, replicating the tests to check their veracity is going to be complicated.

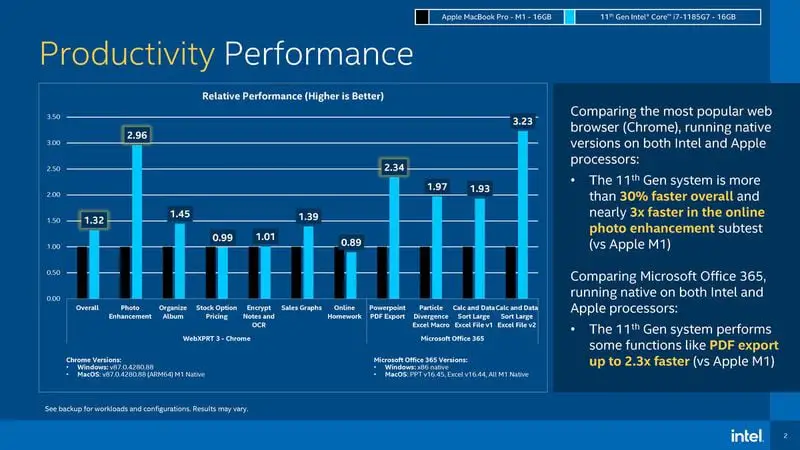

When we take a look at the tests, we see how Intel has chosen to compare very specific functions. Thus, for the productivity tests, it has used Chrome and Office on both platforms and with the native versions. The Intel processor outperforms by a wide margin when it comes to enhancing photos, exporting a PowerPoint to PDF, and sorting large amounts of data in Excel.

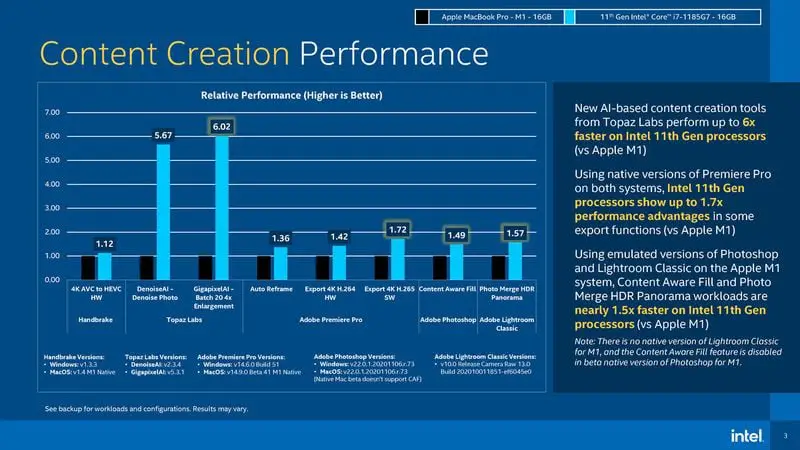

The biggest performance surprise of the 11th generation Intel Core comes in the Topaz Labs tests. Here, its processor is six times better than Apple’s M1. We should point out that Topaz Labs has its apps specifically designed to take advantage of Intel hardware acceleration. A very important point to take into account in the comparison, given that when testing on the Mac with M1, apps that also take better advantage of its hardware are not used. As for Adobe, it should be remembered that it is still in beta for the Mac M1 and there are still features that are not even present.

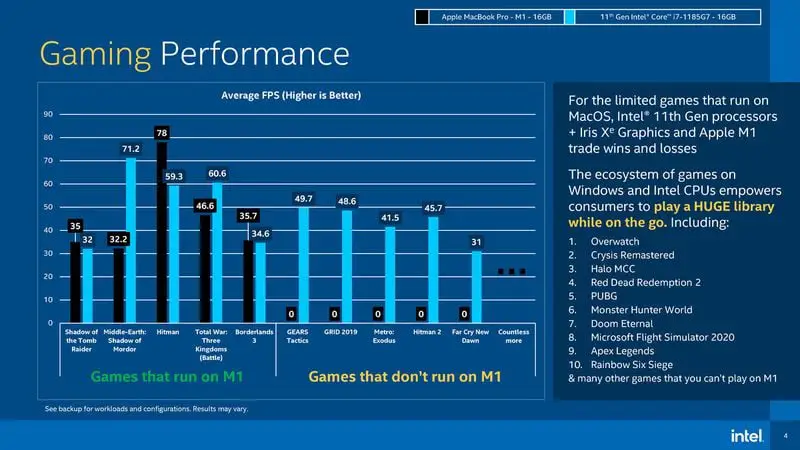

It’s common knowledge that Macs are not the ideal platform if gaming is your thing. Numerous games such as Shadow of the Tomb Raider are not yet ready for Apple Silicon. Despite this, the M1s score quite well on those games that are present on the platform (with Intel pointing out those that are not).

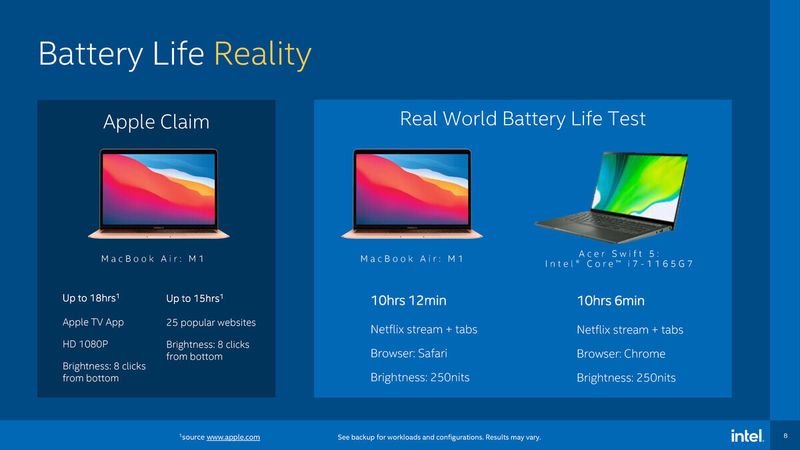

For the battery test, Intel makes a change here on both Apple’s and its own equipment. It switches to a MacBook Air, whose autonomy is shorter than the MacBook Pro compared so far, while on the Intel side we have an i7 1165G7 from Acer. For the test, they use Safari and Chrome, both models with 250 nits of brightness.

The result is very similar for both devices, with just over 10 hours of autonomy and the Mac slightly ahead. The thing is that Intel has chosen the Mac with M1 with lower autonomy for the test, using Safari to watch a Netflix streaming instead of the Apple TV app, where Apple claims to get 18 hours (the test with 250 nits probably fewer hours, but more than those 10).

Benchmarks chose to reinforce Intel’s bias

In general, these kinds of claims need to be corroborated by users and the specialized press in real-world usage situations. While the Mac M1s have received very good reviews in all these areas, there are still not enough models with these processors to compare them in real-world use.

It is also striking that, when some apps or functions take better advantage of the Santa Clara-based processors, they do not do the same in the case of Apple’s M1s. It happens when using Chrome instead of Safari, Safari and not the Apple TV app or using the Adobe suite, which is still in beta and has features that are not even activated. Not to mention the change in the model for the battery test, using the one with the shortest battery life in the case of the Mac.

Intel is at a delicate moment in its history, with a transition to a new CEO underway, problems in chip design and manufacturing, and major customers such as Apple creating their own solutions.

Interestingly, on this last point, Intel makes no mention at all of the lack of a fan on the MacBook Air (as well as the quiet operation of the MacBook Pro). A point very much to be taken into account but not relevant in the manufacturer’s tests.

Each manufacturer is within its rights to compare what it excels at with the rest. But it must also be aware that its claims must be well-founded. Otherwise, when they reach the hands of users and the specialized media, they will be able to take the colors out of your eyes.