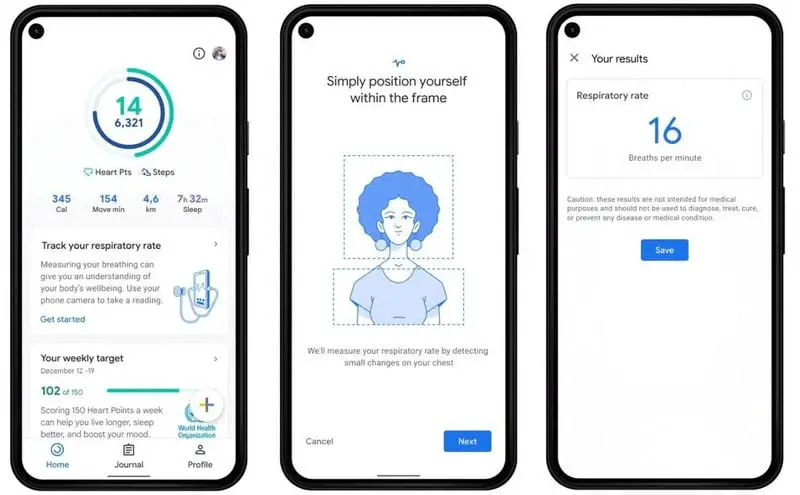

Google has announced a major update to its activity and health tracking app and Google Fit: Starting next month, users will be able to measure both their heart rate and respiration rate using only their smartphone’s camera.

Usually, this type of measurement requires dedicated hardware such as that included in wearables, quantifying bracelets, smartwatches, or heart rate monitors that have specific sensors for this purpose. The novelty is to be able to obtain them only with the camera of a cell phone and a Google Fit app that, like Apple and Samsung, the Internet giant has continued to improve. And it is that a good group of users buy these devices to track sports activity or fitness in general.

Google Fit: More functions

The function comes from the Google Health group led by Schwetak Patel, a professor of computer science at the University of Washington who has been recognized with several awards for his work in digital health. There they have managed to develop a method based on computer vision technology to take these measurements using only cameras to achieve results they say are comparable to clinical ones. A study has been developed to validate these results and an academic journal will publish the corresponding peer review research being conducted by an external source.

A technique known as “optical flow” is used to obtain respiration rate, which monitors the movements in a person’s chest as they breathe and uses this to determine their respiration rate. In the clinical validation study, which covered both typical people in good health and people with existing respiratory conditions, Google’s data indicates high accuracy, 1 breath per minute.

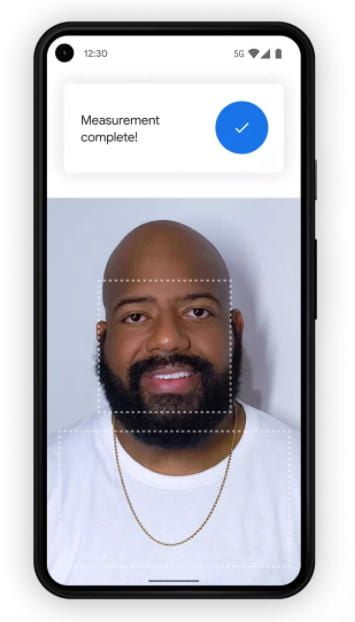

For heart rate, Google Fit uses the camera to detect “subtle color changes” in a user’s fingertip. From there it gets an indicator of when oxygenated blood is flowing from the heart to the rest of your body. The company’s validation data (still subject to external review as we said) also shows high accuracy: A margin of error of 2% on average. Google is working to use this technology using the color changes of a person’s face, although that feature is still in the exploratory phase.

Google will make these new measurement features available to users during March. While it will initially only be available for Pixel smartphones, the goal is to expand it “in the coming months” to any device running Android 6 or later.

Google notes that “these measurements are not intended for medical diagnostics or to assess medical conditions,” but may be of interest to the general public and more so if they don’t need dedicated hardware. We have seen similar apps in the past, but modern phones are accumulating better and better cameras and support for hardware-accelerated computer vision algorithms that achieve greater accuracy.

As for privacy, Google promises that none of the data obtained is sold to third-party companies nor does Google use it for any of its services such as targeted ads.