Google is experimenting with an AI project that turns web pages into videos. It is not available for public use yet, but it is an interesting project. Its name is URL2Video and its operation is based on the extraction of text, images, fonts, colors and other key elements, which are selected and edited autonomously by the application.

The mechanism of operation behind this tool was designed in Google based on the experience transmitted by designers familiar with both audiovisual works, as well as web design. This way, some general styles of the edition were defined, contemplating selection criteria and content hierarchization.

- The new driving mode on Google Maps will be available to everyone soon

- Google adds a free VPN to its Google One service

- Google’s Loon sets record for longest stratospheric flight: 312 days

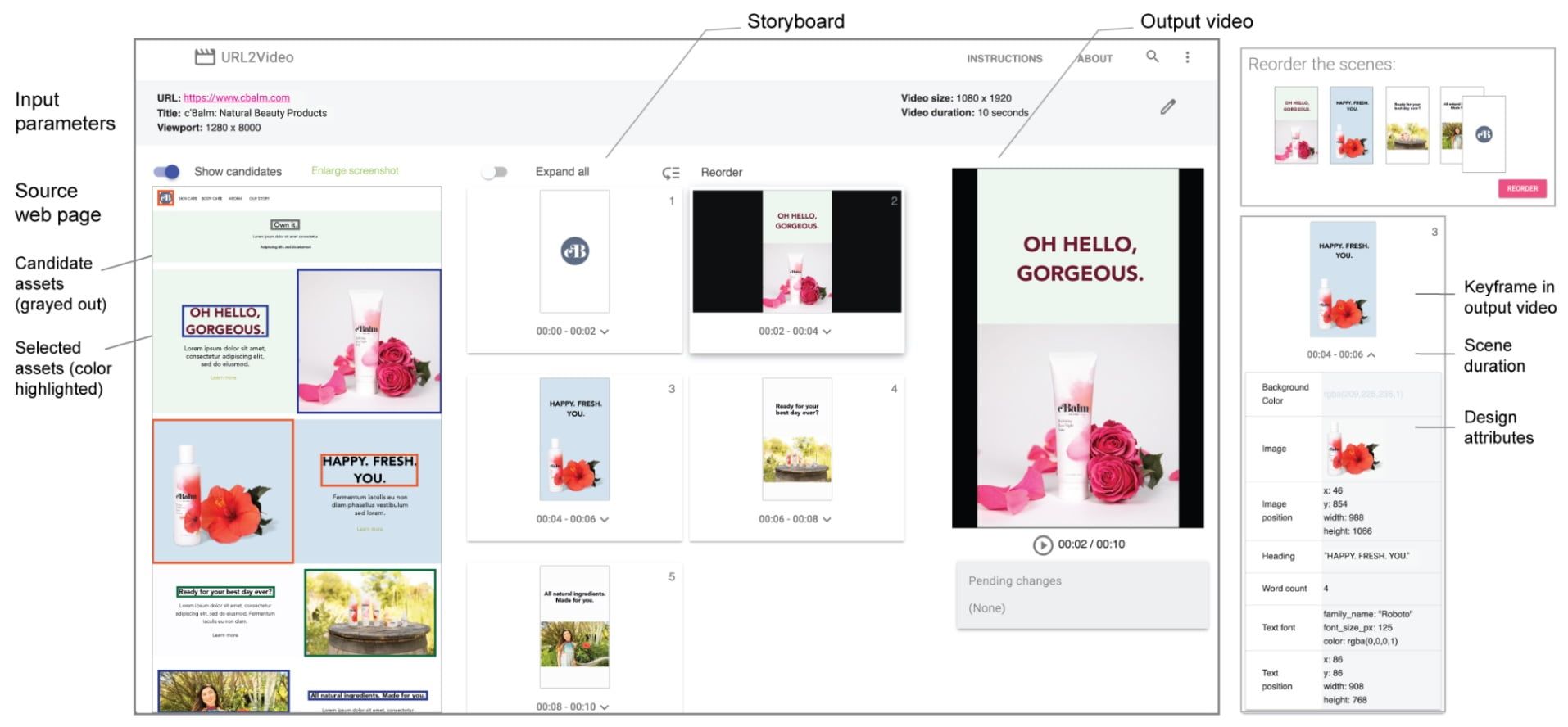

To make use of URL2Video, which is more of a research prototype, it is necessary to provide the beginning of the URL that you want to work with. From here the elements will be selected, through the automatic mechanism mentioned above. The design of the generated clip will be consistent with the appearance of the website.

After processing these first data, the user will be asked to define some parameters, such as the expected duration of the video and its aspect ratio. Before the final rendering of the generated clip, the user interface of this application allows the user to edit some elements, such as certain design attributes (colors, fonts, etc). After the first tests, Google makes a positive assessment of the results obtained so far with URL2Video.

“We are developing new techniques that support the audio track and a voiceover in video editing. All in all, we envision a future where creators focus on making high-level decisions and an ML model interactively suggests detailed temporal and graphical edits for a final video creation on multiple platforms.” said Peggy Chi and Irfan Essa, from Google’s research staff.

In the URL2Video creation interface, users specify the URL, the size of the target page view, and the video output parameters. URL2Video analyzes the site and extracts the main visual components. It creates a series of scenes and displays the keyframes as a storyboard. These components are processed into an output video that meets the input temporal and spatial constraints. Users can playback the video, examine the design attributes, and make adjustments to generate video variations, such as rearranging the scenes.

URL2Video was presented at the UI 2020 software and technology symposium. At the prototype level, a paper specifies the main technical details developed during the research phase of this project.

In addition to being a remarkable demonstration of the potential of artificial intelligence, this tool in the future could be consolidated as an excellent alternative to automatically generate audiovisual material to market the content of some sites.

Google is experimenting with an AI project that turns web pages into videos, it is not open to public yet. But it is an exciting project for all of us.