Microsoft has rolled out an exciting new development in the field of language models: the 1-bit LLM. This advancement, inspired by research projects like BitNet, marks a notable shift in the way language models are constructed and optimized. At the heart of this innovation lies a strikingly efficient method of representing model parameters—or weights—using a mere 1.58 bits, as opposed to the traditional 16-bit floating-point (FP16) approach prevalent in earlier models.

The first-of-its-kind 1-bit LLM

Dubbed BitNet LLM b1.58, this pioneering approach limits the potential values of each weight to just three options: -1, 0, or +1. This dramatic reduction in the number of bits required per parameter is what sets the foundation for this groundbreaking technology. Surprisingly, despite its lean bit consumption, BitNet b1.58 manages to deliver performance metrics comparable to those of traditional models. This includes areas such as perplexity and end-task performance, all while using the same size and training datasets.

What are 1-bit LLMs?

The generative AI scene is evolving day by day, and the latest breakthrough in this dynamic arena is the advent of 1-bit Language Learning Models. It might sound surprising, but this development has the potential to transform the AI arena by addressing some of the most significant hurdles faced by LLMs today—namely, their gargantuan size.

Typically, the weights of a Machine Learning model, whether it’s an LLM or something as straightforward as Logistic Regression, are stored using either 32-bit or 16-bit floating points. This standard approach is a double-edged sword; while it allows for high precision in the model’s calculations, it also results in the immense size of these models.

This bloat is precisely why deploying heavyweight champions like GPT on local systems or in production environments becomes a logistical nightmare. Their astronomical number of weights, necessitated by the precision of these floating points, balloons their size to unmanageable proportions.

In stark contrast to the traditional models, 1-bit LLMs utilize just a single bit—either 0 or 1—to represent weight parameters. This seemingly minor tweak has major implications: it dramatically slashes the overall size of the models, potentially by a vast margin.

Such a reduction in size opens the way for the deployment of LLMs on much smaller devices, making advanced AI applications more accessible and feasible across a wider range of platforms.

Back to BitNet LLM b1.58

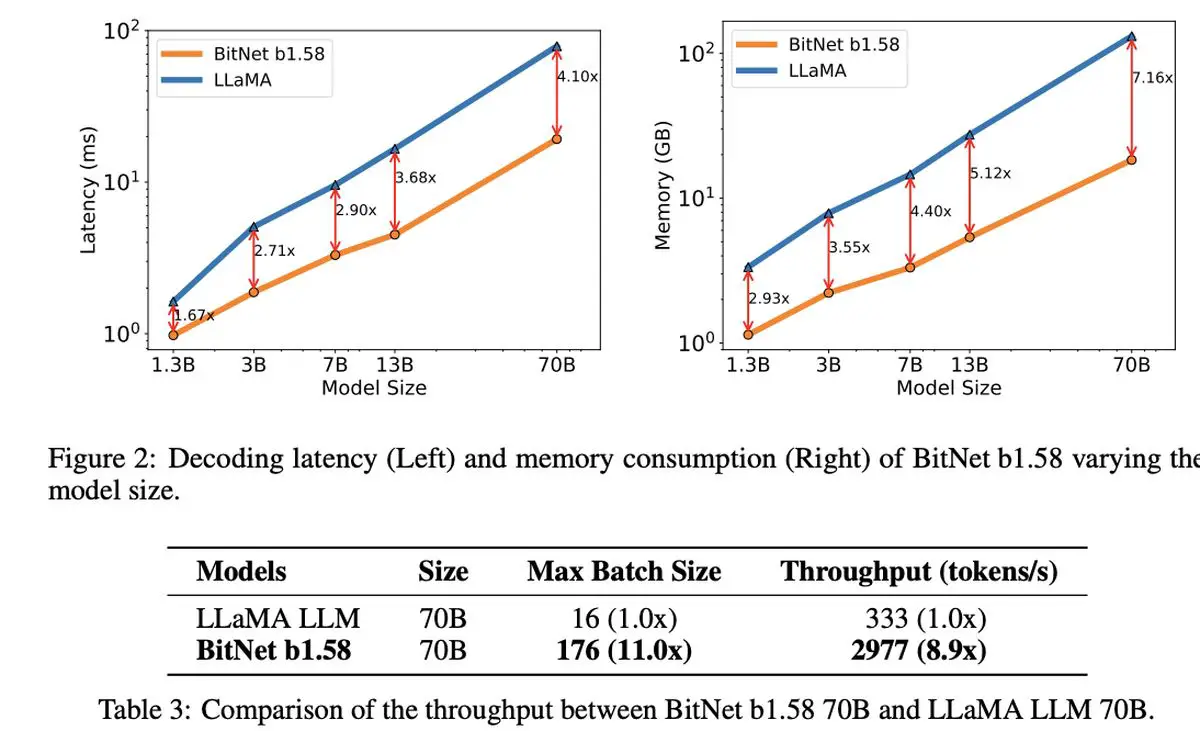

What’s truly remarkable about the 1.58-bit LLM is its cost-effectiveness. The model shines in terms of reduced latency, lower memory usage, enhanced throughput, and decreased energy consumption, presenting a sustainable option in the computationally intensive world of AI.

Microsoft’s 1-bit LLM doesn’t just stand out for its efficiency. It represents a fresh perspective on scaling and training language models, balancing top-notch performance with economic viability. It hints at the dawn of new computing paradigms and the possibility of creating specialized hardware tailored to run these leaner, more efficient models.

The discussion around BitNet LLM b1.58 also opens up intriguing possibilities for managing long sequences more effectively in LLMs, suggesting potential areas for further research in lossless compression techniques to boost efficiency even further.

In the shadow of this notable innovation, Microsoft has also been making waves with its latest small language model, Phi-2. This 2.7 billion-parameter powerhouse has shown exceptional capabilities in understanding and reasoning, further evidence of Microsoft’s ongoing commitment to pushing the boundaries of AI technology. The introduction of the 1-bit LLM, along with the success of Phi-2, would highlight an exciting era of innovation and efficiency in language model development.

Featured image credit: Drew Beamer/Unsplash